Technical SEO for Rubber, Plastics & Silicone Products (2025): Crawl Budget, Faceted Navigation & Page Speed

If your catalog spans thousands of SKUs, variants, and spec sheets, technical SEO is won (or lost) in three places: how wisely bots crawl, how safely filters generate URLs, and how fast your product pages ship pixels. Based on hands-on work with industrial manufacturers, here’s a field-tested playbook—no fluff, just what actually fixes indexation and Core Web Vitals at scale.

When Crawl Budget Actually Matters for Industrial Catalogs

Crawl budget isn’t a vanity metric. It matters when you see symptoms like “Discovered – currently not indexed,” slow recrawl of updated specs, or Googlebot spending more time on sort/view parameters than on high-value categories. Google’s definition (updated 2024) ties crawl budget to server capacity and content demand; large, frequently updated sites are most affected, especially with parameter sprawl and legacy URLs. See the official explanation in Google’s Managing crawl budget for large sites (2024) and this 2025 refresher from Search Engine Land’s crawl budget overview.

Typical industrial triggers:

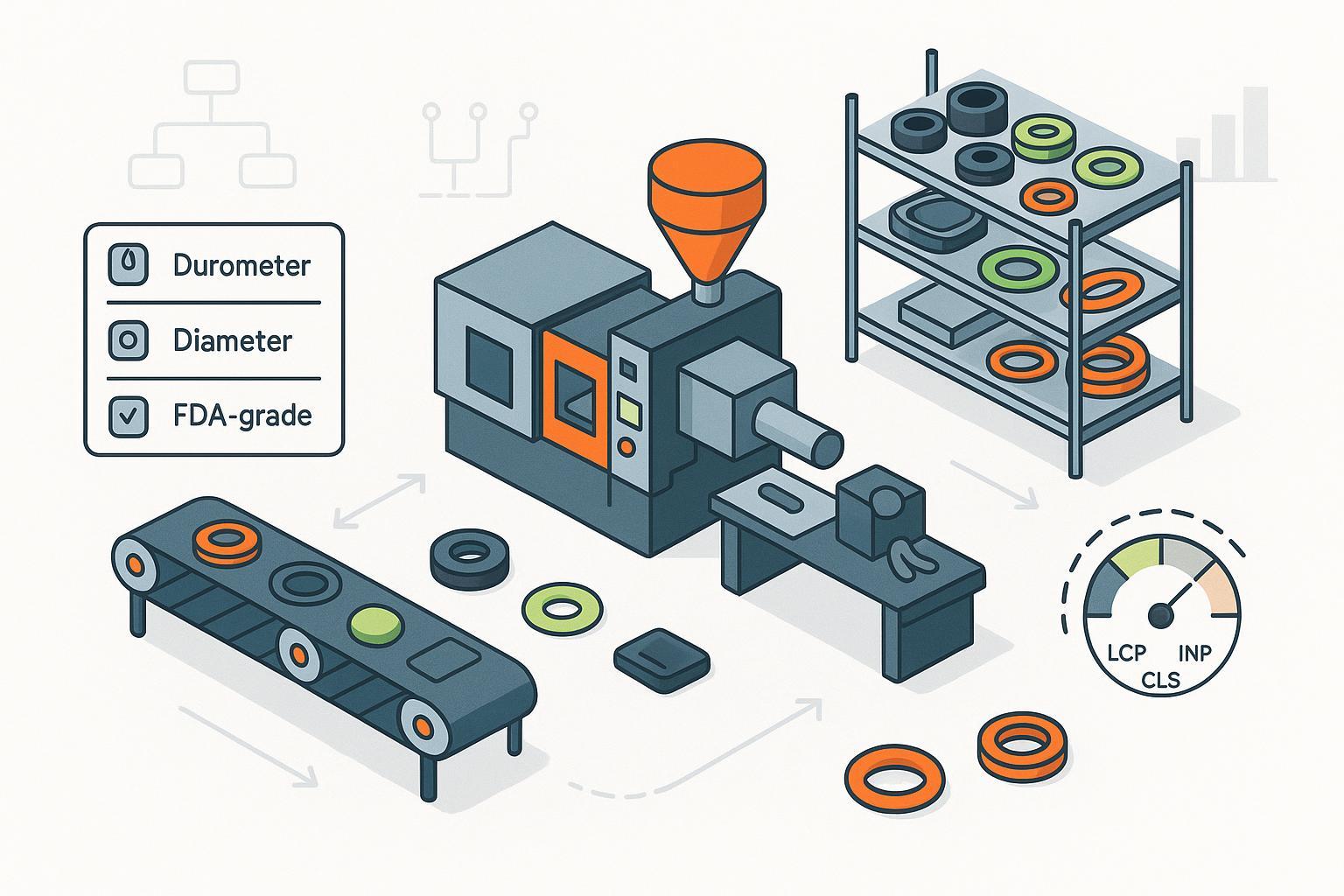

- Variant explosions (durometer, diameter, color, FDA grade) create near-infinite URL spaces.

- Technical documents (PDFs, CAD) soak up requests without adding index equity.

- Heavy JS/catalog frameworks cause slow responses that throttle crawl rate.

Crawl Budget—A Diagnostics-First Workflow

I’ve found the fastest wins come from disciplined triage, then surgical controls.

- Baseline with GSC Crawl Stats and logs

- In Google Search Console, review requests by type, average response time, and spikes in 5xx/429. Correlate crawl allocation with key categories/products. Google details this in Managing crawl budget (2024).

- Parse server logs (validate Googlebot IPs) to quantify waste: high-frequency hits to ?sort=, ?view=, empty-result filters, or legacy paths. Sitebulb’s methodology for depth and efficiency helps structure the audit (Sitebulb guide on crawl depth, 2024+).

- Apply the right control for the right problem

- robots.txt controls crawling, not indexing. Don’t hide pages that need noindex; Google must fetch them to see the directive. See Google’s robots.txt introduction and Block search indexing with noindex.

- Canonicalization consolidates duplicates (including non-HTML via HTTP header rel=canonical). Reference: Google—Consolidate duplicate URLs.

Example robots.txt for low-value params (tailor to your patterns):

User-agent: *

# Crawl waste

Disallow: /*?sort=

Disallow: /*?view=

Disallow: /*?session=

# Keep paginated discovery open

Allow: /*?page=

Example X-Robots-Tag header for non-HTML you don’t want indexed:

X-Robots-Tag: noindex, noarchive

HTTP header canonical for a PDF pointing to its HTML equivalent:

Link: <https://www.example.com/specs/silicone-tubing>; rel="canonical"

- Sitemaps as a prioritization signal

- List only canonical 200 URLs, split large files, gzip, and maintain accurate lastmod so Google knows what to recrawl. See Build and submit sitemaps and Large sitemaps & indexes. Google clarified lastmod usage in 2023 (Sitemaps lastmod guidance).

- Pagination and infinite scroll the SEO-safe way

- Google no longer uses rel=next/prev; focus on crawlable paginated URLs and progressive enhancement. Guidance in Search Engine Land’s 2025 pagination explainer and Google’s lazy loading/infinite scroll pattern.

- Retire legacy crutches

- The URL Parameters tool was deprecated in 2022; the modern stack is architecture + canonicals + robots + internal linking, monitored via Crawl Stats. See Google’s deprecation notice (2022).

Crawl KPIs to track monthly

- % of HTML crawl requests reaching product/category URLs

- Reduction in parameterized URL crawl share

- Time-to-recrawl for updated pages (via lastmod)

Faceted Navigation—SEO-Safe Patterns for Industrial Filters

Manufacturers routinely need filters for Shore hardness, durometer, inner/outer diameter, temperature range, FDA/NSF grade, colorant, and more. The danger is combinatorial URL growth. Google’s guidance on faceted spaces remains clear: prevent infinite crawl, consolidate duplicates, and only index high-value states (Google—Managing faceted navigation crawling, 2024+). Industry patterns are well summarized in Ahrefs’ faceted navigation guide (2024).

A pragmatic approach that holds up in audits:

- Whitelist a tiny set (3–5) of indexable facets

- Only facets with proven search demand and buyer intent (e.g., “food-grade silicone tubing,” “70A durometer rubber sheet”) should be indexable.

- Give each indexable facet a self-referencing canonical and distinct content signals (title/H1, short intro, curated internal links).

- Path vs parameters

- Use tidy paths for permanent, demand-backed filters (e.g., /silicone-tubing/food-grade/). Keep combinatorial filters in query parameters controlled by robots/canonicals.

- Normalize parameter order server-side to avoid duplicates: always output ?diameter=6mm&durometer=70A&brand=acme in a consistent sequence.

- Apply controls consistently

- Noindex low-value combinations; canonicalize near-duplicates to the parent.

- Disallow obvious crawl-waste parameters (sort, view, session) in robots.txt; do not block pages that must serve noindex.

- Ensure empty-result filters return 404 (not 200) to avoid thin pages that attract crawl.

- Make JS/AJAX filters crawlable

- Use the History API to update URLs on filter changes (no hash-only states) and provide server-rendered HTML for indexable states. Start with Google’s JavaScript SEO basics and the official infinite scroll pattern.

Facet QA checklist

- Logs: which params siphon the most Googlebot hits?

- Crawlers: duplicate title/H1 clusters across facets; verify canonical targets.

- GSC: are facet URLs stuck as “Crawled – currently not indexed”? Tighten controls.

Trade-offs to note

- Over-indexation risks duplicate content and crawl traps; over-pruning sacrifices long-tail demand. Pilot on one category, measure, then scale.

Page Speed & Core Web Vitals for Product-Heavy Pages

In 2024 Google replaced FID with INP; current “good” thresholds at the 75th percentile are LCP < 2.5s, INP < 200 ms, CLS < 0.1. See web.dev on INP as a Core Web Vital (2024). The 2024 Web Almanac shows heavier images/JS on ecommerce/B2B correlating with worse CWV pass rates (Web Almanac 2024—Performance & Page Weight).

LCP (largest contentful paint) playbook

- Preload critical assets and prioritize the LCP image.

- Use responsive AVIF/WebP via srcset/sizes; eliminate render-blocking CSS/JS.

- Improve TTFB with edge caching and efficient server processing. See web.dev’s top CWV techniques and optimize TTFB guidance.

HTML examples:

<!-- Preload critical CSS and font -->

<link rel="preload" as="style" href="/css/critical.css">

<link rel="preload" as="font" href="/fonts/brand.woff2" type="font/woff2" crossorigin>

<!-- LCP image with high priority and responsive sources -->

<img src="/img/hero.avif"

srcset="/img/hero-800.avif 800w, /img/hero-1200.avif 1200w, /img/hero-1600.avif 1600w"

sizes="(max-width: 1200px) 100vw, 1200px"

width="1200" height="800"

fetchpriority="high"

alt="Food-grade silicone tubing on production line"/>

INP (interaction to next paint) playbook

- Break up long tasks (>50 ms), defer non-critical JS, and prioritize input readiness. Techniques and APIs are covered in web.dev’s long task optimization and Chrome’s scheduler.yield() primer (2024).

Minimal JS example:

// Defer heavy work until idle, yield to user input regularly

async function hydrateFilters() {

// Split big work into chunks

for (const chunk of getChunks()) {

doWork(chunk);

if ('scheduler' in window && scheduler.yield) {

await scheduler.yield();

} else {

await new Promise(r => setTimeout(r));

}

}

}

CLS (cumulative layout shift) hygiene

- Reserve space for images/cards, stabilize fonts (font-display, size-adjust), avoid late-loading banners that push content. See web.dev’s CWV overview.

Next-page speed wins

- Where applicable, Speculation Rules can prefetch/prerender likely next pages to cut perceived LCP on navigation; see the Ray-Ban case study on Speculation Rules (2024).

PDFs, Spec Sheets, CAD: Indexation and Delivery Without Penalties

Industrial sites lean on non-HTML assets. Treat them explicitly.

Indexing controls

- You cannot place a meta robots tag inside a PDF. Use an HTTP header instead. Example and rationale in Google’s “Block search indexing” guide.

# For a PDF that shouldn’t be indexed

X-Robots-Tag: noindex

Consolidation and UX

- Prefer an HTML “spec page” for every key document, then canonicalize the PDF to that page using the HTTP header method in Google’s duplicate URL consolidation.

Performance delivery

- Host downloads on a CDN, enable Brotli/gzip, support HTTP range requests, set Cache-Control, and trigger downloads only on user action so they don’t compete with critical page loads. See web.dev’s encoding & transfer optimization and MDN’s fast-loading guidance.

Discontinued or Out-of-Stock SKUs: Preserve Equity, Avoid Soft 404s

- Temporarily out of stock: keep the product page live and use Product structured data with Offer.availability = OutOfStock, per Google’s Product structured data documentation (2024+).

- Permanently discontinued: if you have a replacement or close equivalent, 301 to that product or the nearest relevant category. If not, return 404/410 (both acceptable; 410 can remove slightly faster). Avoid “soft 404s” that return 200 for gone content. See Google’s HTTP/network error guidance and this 2025 explainer on status codes and SEO.

Optional JSON-LD fragment for out-of-stock signaling:

<script type="application/ld+json">

{

"@context": "https://schema.org/",

"@type": "Product",

"name": "Silicone Tubing 70A, 6mm ID",

"offers": {

"@type": "Offer",

"availability": "https://schema.org/OutOfStock"

}

}

</script>

Diagnostics Cadence and Acceptance Thresholds

Weekly

- Crawl allocation: GSC Crawl Stats vs logs (HTML vs parameters; 5xx/429 spikes).

- Indexation: “Crawled – currently not indexed” trend for products/categories and indexable facet sets.

- Performance: Field CrUX or your RUM—LCP, INP, CLS at the 75th percentile.

Monthly

- Sitemap hygiene: canonical-only, lastmod accuracy, new product coverage.

- Facet controls: verify canonical/noindex/robots consistency; empty-result filters return 404.

- Pagination/infinite scroll: crawlable pages linked; History API updates URL states.

Acceptance thresholds (pragmatic targets)

- ≥80% of Googlebot HTML hits land on product/category/indexable-facet URLs

- CWV “good” pass rate ≥75% for top revenue categories

- <5% of crawled URLs are obvious crawl-waste patterns (sort/view/session)

Toolbox (neutral, under 100 words)

For content ops and SEO briefs, QuickCreator can streamline structured drafts and multilingual updates alongside tech fixes. Disclosure: QuickCreator is our product mention in this article. For crawling and diagnostics: Screaming Frog (fast site scans), Sitebulb (audit visualizations), and Lumar/Deepcrawl (enterprise-scale monitoring). For keyword/serp intelligence: SEMrush and Ahrefs (both strong for competitive gaps). Choose based on scale, collaboration needs, and whether you require continuous monitoring or ad-hoc audits.

Common Pitfalls We See (and How to Avoid Them)

- Disallowing facet pages that also carry noindex, so Google never reads the directive. Fix: allow crawl to see noindex; block only pure crawl-waste.

- Infinite-scroll-only category views with no paginated URLs. Fix: progressive enhancement with discoverable ?page=N and History API updates per Google’s pattern.

- Indexing PDFs instead of HTML spec pages, fragmenting link equity. Fix: publish HTML equivalents and canonicalize PDFs via HTTP header.

- Shipping unbounded JS to product pages, tanking INP. Fix: split work, defer non-critical scripts, and apply scheduler.yield().

Final Checklist You Can Run This Week

Crawl budget

- [ ] Robots rules align with noindex strategy; parameters audited in logs

- [ ] Sitemaps: canonical-only, accurate lastmod, split and compressed

- [ ] Pagination discoverable; infinite scroll uses progressive enhancement

Faceted navigation

- [ ] 3–5 indexable facets per family; self-canonicals and unique copy

- [ ] Parameter normalization; sort/view disallowed; empty facets 404

- [ ] JS filter URLs use History API; SSR/hydration for indexable states

Page speed & assets

- [ ] LCP image prioritized; critical CSS/fonts preloaded; AVIF/WebP

- [ ] Long tasks broken up; async/defer; input responsiveness profiled

- [ ] PDFs/CAD: X-Robots-Tag where appropriate; CDN + range requests

Discontinued SKUs

- [ ] OOS signaled in Product schema; permanent 301 or 404/410 handled

Ship these changes in small batches, validate with logs and field data, and iterate. That’s how industrial catalogs compound traffic without courting crawl traps.