Top LLM Tracking Tools for AI Visibility (2025)

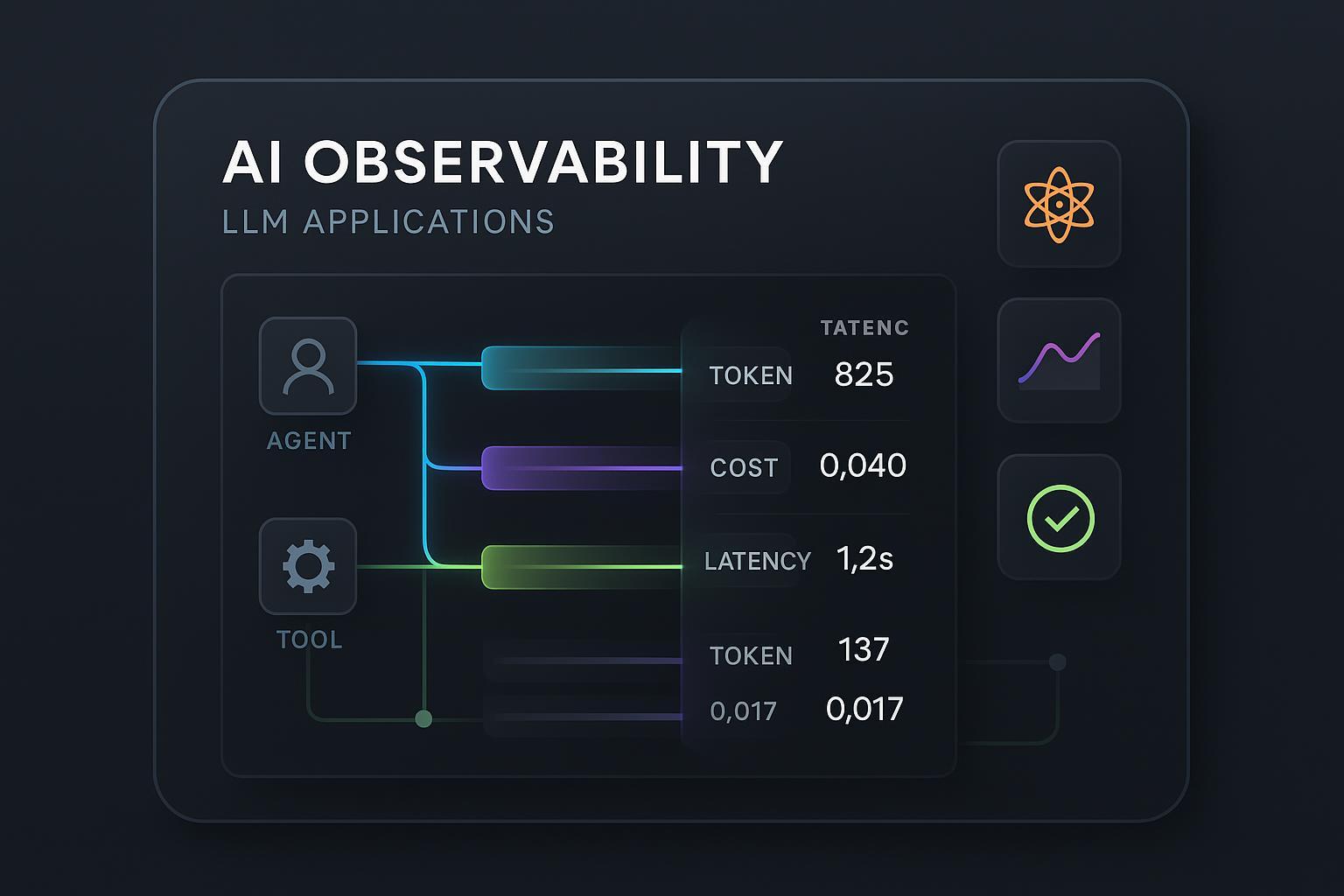

LLM features have outgrown simple prompt logs. In 2025, teams need end‑to‑end visibility across agents, tools, and RAG pipelines—complete with traces, cost/latency telemetry, evaluations, and compliance-ready audit trails. If you ship AI apps, the right tracking stack helps you catch regressions early, lower inference spend, and move faster without risking quality.

If your AI work overlaps with content and SEO workflows, you’ll also benefit from tight quality feedback loops alongside observability. For a primer on balancing AI writing quality with performance, see this practical overview of AI writing tools for SEO. And if you’re new to the space, this explainer on AI-generated content and where it’s headed sets helpful context for why measurement and guardrails matter.

How we chose (criteria we weighted)

We compared tools against real production needs:

- Capability coverage (tracing depth, evals, guardrails)

- Developer experience & integrations (SDKs, LangChain/LangGraph, OpenTelemetry alignment)

- Hosting & compliance (self‑host vs. SaaS, data controls)

- Cost/value (pricing clarity, free/OSS options)

- Reliability/community (docs, release cadence, adoption)

- Collaboration & workflow UX (feedback loops, experiment ops)

For background on the landscape, see Coralogix’s 2025 industry overview of LLM observability tools (2025, Coralogix). Below, we segment picks by “best for” so you can shortlist fast.

The short list: best LLM tracking tools by use case (2025)

1) Langfuse — Best open source foundation for full‑stack LLM observability

- Snapshot: OSS platform covering traces, evaluations, datasets, and feedback; cloud option available.

- Strengths

- Clear, extensible data model for spans across generations, tools, retrievers, and evaluators.

- Built‑in experiments and datasets enable repeatable regression testing.

- Self‑host for data control; growing ecosystem integrations.

- Limitations

- You manage infra at scale if self‑hosting.

- Cloud pricing details are less prominent than some SaaS peers.

- Best for: Teams needing OSS + deep tracing/eval; data‑sensitive deployments.

- Not for: Orgs wanting fully managed, turnkey SaaS with fixed SLAs out of the box.

- Evidence: Langfuse’s 2024 documentation on the observability data model (Langfuse, 2024) details spans for multi‑step systems.

2) LangSmith — Best for LangChain/LangGraph apps and agent debugging

- Snapshot: LangChain’s managed platform for tracing, datasets, and evaluations; strong LangGraph Studio UX.

- Strengths

- First‑class support for LangChain/LangGraph debugging and test datasets.

- Clean dashboards for latency/cost/quality; supports OTel ingestion patterns.

- Limitations

- Closed‑source; self‑hosting generally limited to enterprise special deployments.

- Usage‑based retention can add cost as volume grows.

- Pricing note (subject to change): As of 2025, public tiers outline free and paid seats with retention add‑ons; see the official LangChain pricing page (2025, LangChain) for details.

- Best for: Teams already standardized on LangChain/Graph.

- Not for: Orgs requiring OSS or straightforward self‑host from day one.

3) Helicone — Best for cost‑centric analytics and easy gateway‑style logging

- Snapshot: Gateway + observability layer with request logging, cost tracking, caching, alerts; OSS core with cloud service.

- Strengths

- Quick drop‑in for unified logging across providers; generous free tier to start.

- Emphasis on cost visibility and alerting; handy caching to reduce spend.

- Limitations

- Compliance and tier nuances should be confirmed on official pages.

- Some enterprise features may require paid plans.

- Best for: Teams that want simple setup, cost insights, and routing/caching wins.

- Not for: Deep, bespoke evaluation pipelines without extra tooling.

- Evidence: Helicone’s 2024–2025 write‑ups comparing platforms and describing pricing/capabilities, e.g., the roundup of LangSmith alternatives (2024, Helicone).

4) Arize Phoenix — Best OSS for RAG diagnostics and OTel‑aligned tracing

- Snapshot: Open‑source toolkit with tracing and specialized RAG evaluation (groundedness, context relevance).

- Strengths

- Useful diagnostics for retrieval pipelines; supports human/LLM feedback loops.

- OpenTelemetry alignment fits enterprise observability standards.

- Limitations

- More setup than fully managed SaaS; deepest features pair with the broader Arize platform.

- Best for: Data/ML teams needing RAG‑specific evals and OTel‑friendly traces.

- Not for: Teams wanting a hosted, point‑and‑click experience only.

- Evidence: The Arize Phoenix docs detail RAG and tracing workflows in their Phoenix documentation (Arize, 2024).

5) Traceloop OpenLLMetry — Best standards‑first approach on OpenTelemetry

- Snapshot: Open‑source semantic conventions and SDKs to instrument LLM spans, tokens, and costs into any OTel backend.

- Strengths

- Vendor‑neutral; works with Jaeger, New Relic, Datadog, and other OTel targets.

- Multi‑language SDKs and provider integrations; extensible for custom spans.

- Limitations

- You must run or adopt an observability backend; fewer out‑of‑the‑box eval dashboards.

- Best for: Platform teams standardizing on OTel.

- Not for: Teams seeking a fully packaged UI with built‑in evals.

- Evidence: The official GitHub documents conventions and SDKs under openllmetry (2024, Traceloop).

6) HoneyHive — Best enterprise eval + HITL review workflows

- Snapshot: Evaluation‑forward platform with distributed tracing, human‑in‑the‑loop review modes, and enterprise deployment options.

- Strengths

- Review Mode and dataset curation accelerate cross‑functional QA.

- Deployment flexibility (SaaS, single‑tenant, on‑prem/VPC) for strict environments.

- Limitations

- Closed‑source, sales‑led pricing.

- Best for: Enterprises formalizing AI QA with human + automated evaluators.

- Not for: Budget‑constrained teams that prefer OSS or transparent pricing.

- Evidence: HoneyHive’s announcement and product materials describe enterprise options and eval workflows in their funding post, HoneyHive raises $7.4M (2024, HoneyHive).

7) Humanloop — Best for versioned prompts/flows with production feedback

- Snapshot: Code‑ and UI‑first platform for versioned prompts, evaluators, and flows, with production tracing and feedback capture.

- Strengths

- Strong version discipline across prompts/tools/evaluators.

- Options for managed cloud and self‑hosted runtimes.

- Limitations

- Pricing and compliance details are less public; expect sales engagement.

- Best for: Teams blending prompt ops, evals, and production feedback loops.

- Not for: Those wanting OSS or purely gateway‑style logging.

- Evidence: The GA announcement outlines capabilities for evals and production tracing in Humanloop GA (2024, Humanloop).

8) Weights & Biases Weave — Best if you already use W&B for MLOps

- Snapshot: Lightweight LLM eval/monitor toolkit integrated with the broader W&B platform; includes safety guardrails.

- Strengths

- Safety scorers (toxicity, PII, hallucinations) and dataset‑from‑traces workflows.

- Benefits from W&B ecosystem, enterprise support, and integrations.

- Limitations

- Can be hefty if you only need simple tracing; enterprise‑leaning pricing.

- Best for: Orgs standardizing on W&B across ML/LLM life cycles.

- Not for: Small teams needing only basic logs and cost tracking.

- Evidence: The W&B product page for Weave (2025, Weights & Biases) highlights guardrails and evaluation features.

9) Gentrace — Best for CI/CD‑style testing and release gates

- Snapshot: Purpose‑built environment for large‑scale LLM test suites, run history, experiments, and hallucination checks.

- Strengths

- Designed to run thousands of evals in minutes; strong experiments UI.

- Case studies report substantial test cadence improvements.

- Limitations

- More focused on testing than general‑purpose tracing.

- Best for: Teams adding test gates to CI/CD for prompts, RAG params, and models.

- Not for: Those seeking a logging gateway or self‑hosted OSS.

- Evidence: A 2024 customer story notes dramatic cycle time improvements in the Quizlet + Gentrace case study (2024, Gentrace).

10) Portkey — Best gateway/control plane with observability and governance

- Snapshot: Unified API/gateway with routing, retries/failover, semantic caching, cost tracking, and governance features.

- Strengths

- Centralized policy/routing with observability; supports hybrid/on‑prem data planes.

- Useful for multi‑provider strategies and reliability.

- Limitations

- Pricing is less transparent publicly; enterprise features are gated.

- Best for: Platform teams seeking control‑plane reliability plus cost/usage insights.

- Not for: Teams that only need lightweight logs without routing policies.

- Evidence: The enterprise and hybrid docs describe deployment options, including hybrid (customer data plane) (2025, Portkey).

Also consider (platform observability entrants)

- Datadog LLM Observability — If your org already centralizes infra and APM in Datadog, its LLM features let you track prompts, tokens, and model costs alongside existing telemetry; see the official LLM Observability documentation (2025, Datadog).

- Coralogix LLM Observability — For teams invested in Coralogix, the AI Center adds span‑level LLM traces and cost metrics on top of unified logs/metrics/traces; their 2025 LLM observability tools guide (2025, Coralogix) explains positioning and capabilities.

Quick comparison table (at a glance)

| Tool | Best For | Pros | Cons | Hosting |

|---|---|---|---|---|

| Langfuse | OSS full‑stack tracing + evals | Deep model; experiments; self‑host | Run your infra; cloud price clarity | OSS + Cloud |

| LangSmith | LangChain/Graph teams | Tight ecosystem fit; solid dashboards | Closed‑source; retention costs | SaaS (enterprise special deploy) |

| Helicone | Cost‑centric analytics; gateway | Easy drop‑in; caching; alerts | Confirm compliance/tier details | OSS + Cloud |

| Arize Phoenix | RAG diagnostics; OTel | Groundedness/context evals | Heavier setup than SaaS | OSS (hosted options via Arize) |

| OpenLLMetry | OTel‑first standards | Vendor‑neutral; multi‑lang SDKs | Bring your own backend; fewer eval UIs | OSS |

| HoneyHive | Enterprise evals + HITL | Review Mode; deployment choices | Opaque pricing; closed‑source | SaaS / Single‑tenant / On‑prem |

| Humanloop | Versioned prompts/flows | Strong versioning; prod feedback | Pricing less public | Cloud + self‑hosted runtime |

| W&B Weave | W&B‑centric MLOps | Guardrails; ecosystem | Overkill for simple needs | SaaS/Enterprise |

| Gentrace | CI/CD test gates | High‑scale evals; experiments | Not a general tracer | SaaS |

| Portkey | Gateway + governance | Routing/failover; hybrid/on‑prem | Pricing opaque | SaaS/Hybrid/On‑prem |

Choosing guide: What’s “best” for your stack?

- Need OSS and deep traces/evals with control of data? Start with Langfuse or Arize Phoenix. If your org already runs OTel at scale, OpenLLMetry is a natural, standards‑first extension.

- Living in the LangChain/LangGraph world? LangSmith’s tight integration and LangGraph Studio save hours in debugging and dataset‑driven evals.

- Fighting model spend and wanting faster wins? A gateway‑style layer like Helicone or Portkey can unify logs, add caching, and surface cost anomalies quickly.

- Building rigorous release workflows? Combine a tracer with a testing workbench like Gentrace to create CI/CD gates for prompts, RAG parameters, and model changes.

- Enterprise platform already selected for observability? Consider staying in‑estate with Datadog or Coralogix to centralize dashboards and controls.

Pro tip for content teams: Pair evaluation tools with human review on top‑priority pages. A light HITL pass can dramatically reduce hallucinations and tone issues—tools like a simple AI humanizer for copy review are a practical complement to automated scores.

FAQ

-

What’s the difference between “tracing” and “evaluation” in LLM apps?

- Tracing captures what happened (prompts, tool calls, latencies, costs). Evaluation measures how well it worked (quality, groundedness, policy compliance) via metrics, LLM‑as‑judge, and human reviews.

-

Do I need OpenTelemetry (OTel)?

- Not required, but OTel makes LLM spans a first‑class part of your overall observability. If you already run OTel, tools like OpenLLMetry integrate cleanly.

-

Self‑host vs. SaaS?

- Self‑hosting offers data control and cost predictability but adds ops overhead. SaaS gives speed and managed reliability but may raise retention and PII considerations.

-

How do I control costs?

- Add request‑level logging, sampling, caching, and alerts. Track spend by model, route, and tenant. Gate releases with evals to avoid costly regressions hitting production.

Next steps

- Pick one tracer (Langfuse/LangSmith/Helicone) and one evaluator (Phoenix/Gentrace/HoneyHive) to pilot on a narrow workflow. Instrument a small dataset, define success metrics, and wire alerting.

- Standardize spans across tools/agents early so you don’t lose observability in agentic paths.

- Add a basic QA check for high‑impact surfaces (RAG answers, public‑facing copy) and monitor both cost and quality weekly.

If your team also ships AI‑generated articles, landing pages, or SEO content, you can streamline publishing while keeping quality standards inside one workspace using QuickCreator. Disclosure: QuickCreator is our product.