AI Visibility vs Topical Authority: How to Track Brand Authority in AI Search (2025)

AI search has changed where and how people encounter brands. In 2025, Google AI Overviews/AI Mode, Bing Copilot Search, Perplexity, and ChatGPT can answer questions directly—often with citations—reducing classic clicks and shifting what “visibility” means. This guide clarifies the difference between AI Visibility and Topical Authority and shows how to measure both without chasing vanity metrics.

Quick definitions (and why they matter now)

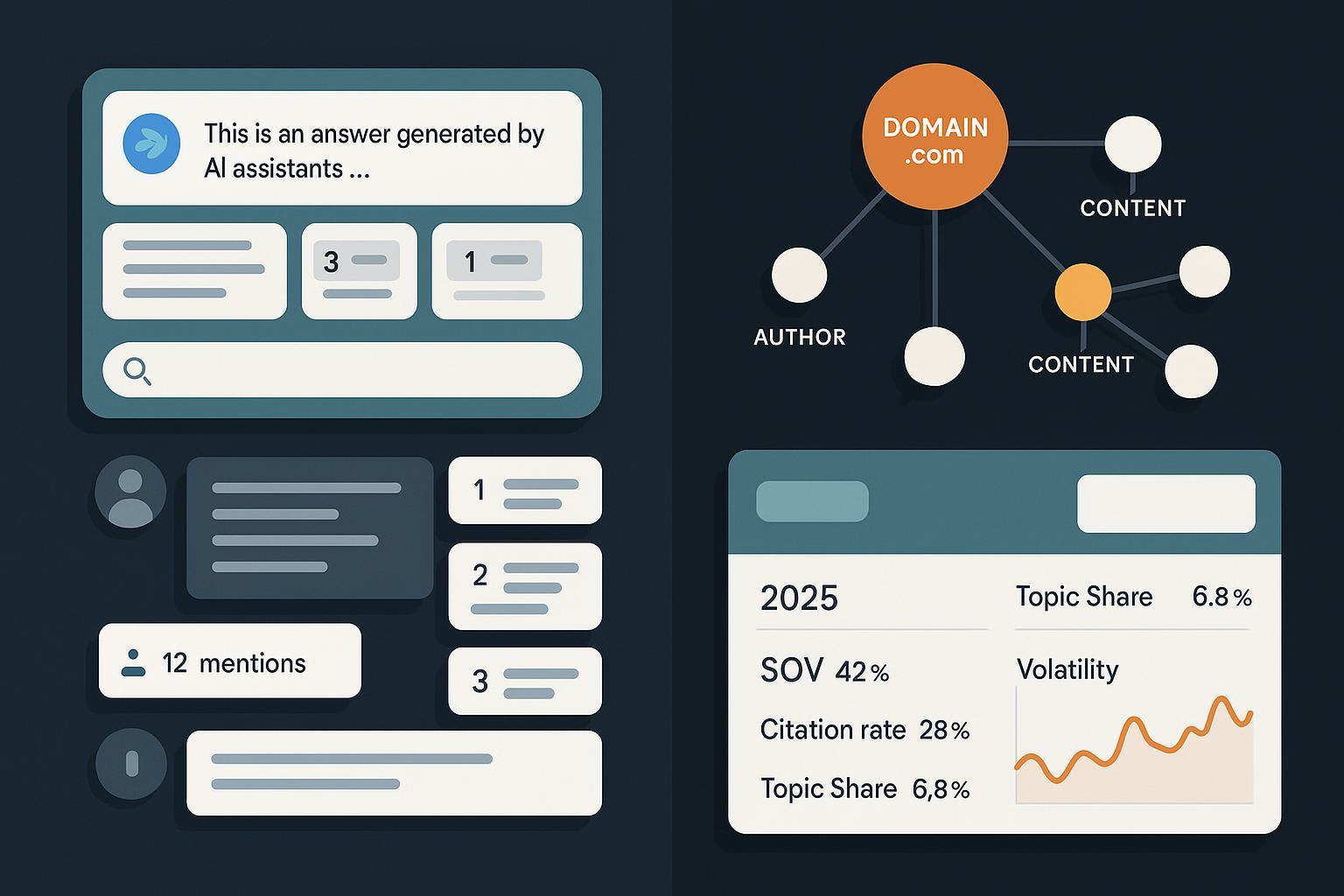

- AI Visibility: Your brand’s presence, mentions, and citations inside AI-generated answers across engines like Google AI Overviews/AI Mode, Bing Copilot Search, Perplexity, and ChatGPT. Think: “How often and how prominently do these systems select and attribute us?”

- Topical Authority: The depth, breadth, and credibility you’ve established on a subject via comprehensive cluster coverage, a strong internal link graph, clear entity signals, and E-E-A-T.

Google’s documentation on AI features acknowledges inclusion behaviors but offers no official reporting APIs for AI Overviews, underscoring why practitioners must DIY measurement and rely on third-party trackers, as described in the 2025 Search Central guidance on AI features and your website: Google Search Central — AI features and your website.

Side-by-side at a glance

| Dimension | AI Visibility | Topical Authority |

|---|---|---|

| What it measures | Selection/attribution in AI answers now | Depth/credibility across a topic cluster |

| Time horizon | Short to mid term | Mid to long term |

| Core inputs | Extractable answers, freshness, citations, sentiment | Cluster completeness, internal linking, entity clarity, E-E-A-T |

| Typical KPIs | AI SOV, citation rate, prominence, volatility, sentiment | Topic Share, coverage depth, link graph strength, entity/E-E-A-T signals |

| Biggest risk | Optimizing to volatile prompts | Moving slowly without near-term wins |

They are complementary: topical authority makes you eligible and resilient; AI visibility tells you whether you’re being chosen and credited today.

The reality check: AI surfaces are changing clicks

Multiple 2025 studies show that AI summaries can reduce clicks on traditional results. For example, a July 2025 analysis reports that users who see an AI summary are less likely to click links than those who don’t, as summarized by the Pew Research finding on lower clicks with AI summaries (2025). In April 2025, a large dataset review indicated position-one CTR dropped markedly on queries with AI Overviews, with a reported −34.5% effect size in that study; see the Ahrefs analysis of AI Overviews reducing clicks (2025). And AI Overviews aren’t rare: in March 2025, they were observed in 13.14% of queries (mostly informational), per the Semrush AI Overviews study (2025).

Implication: You need to track both your presence in AI answers and the enduring signals of authority that help you earn citations consistently.

KPI matrix: what to measure for both lenses

AI Visibility KPIs

- AI Share of Voice (SOV) across prompt sets

- Citation presence and frequency by surface

- Citation prominence/order within the answer

- Answer sentiment/tone (where discernible)

- Volatility index (week-over-week changes)

- Downstream brand-demand indicators (branded search, direct traffic, assisted conversions)

Topical Authority KPIs

- Topic Share per cluster (your share of organic traffic within a topic)

- Coverage completeness (pillar + subtopics, freshness)

- Internal link graph strength (hub → spoke relevance and density)

- Entity clarity and consistency (schema.org Organization/Person, FAQs, NER alignment)

- E-E-A-T (authorship credentials, first-hand data, transparent sourcing)

- High-quality, niche-relevant mentions/backlinks and knowledge panel presence where applicable

Compact mapping table

| KPI | Primary Lens | Where it shows up |

|---|---|---|

| AI SOV (by engine) | AI Visibility | Google AIO/AI Mode, Bing Copilot Search, Perplexity, ChatGPT |

| Citation rate + prominence | AI Visibility | Engine-specific answer modules |

| Sentiment | AI Visibility | Answer tone classification/logging |

| Topic Share | Topical Authority | Analytics + SEO suites, per cluster |

| Coverage depth | Topical Authority | Content inventory audits |

| Entity/E-E-A-T | Topical Authority | Schema, bios, first-party data, references |

Platform-by-platform tracking routines (2025)

Google AI Overviews / AI Mode

- What’s official: Google describes AI Search features but offers no AI Overview citation reporting/API as of 2025-10-18; refer to Google Search Central — AI features and your website (2025).

- Why track: Industry studies associate AI Overviews with reduced CTR and evolving prevalence (see the 2025 studies above). A blended measurement approach matters.

- How to track in practice:

- Build a representative prompt/keyword slate per topic cluster; sample weekly.

- Log AIO trigger/no-trigger, cited domains, citation order, and answer sentiment. Capture screenshots and timestamps.

- Where acceptable, use trackers that report AIO triggers/citations (coverage varies and changes quickly). An industry study and vendor documentation in 2024–2025 reference tools like seoClarity, Advanced Web Ranking, Semrush signals, ZipTie, Keyword.com, thruuu, and Otterly; see the Search Engine Land analysis of AI search citations across 11 industries (2025) and this Keyword.com guide to tracking AI Overviews (2025).

Bing Copilot Search

- What’s official: Microsoft presents Copilot Search summaries that include references/links.

- Programmatic note: Third-party providers report an ai_overview object exposed via the Bing SERP API; treat as credible but validate periodically because Microsoft hasn’t published a detailed schema page. See the DataForSEO update on Copilot Search in Bing SERP API (2025).

- How to track in practice:

- Maintain a prompt slate and run periodic manual panels; log summary presence and citations.

- If your toolchain ingests the Bing SERP API, capture and annotate ai_overview source references where available.

- Triangulate with downstream indicators (Bing Webmaster Tools, brand search, direct traffic, conversions).

Perplexity

- Behavior: Perplexity explicitly shows inline citations to sources; this is integral to its design, per the Perplexity “Getting started” explanation of real-time search with sources.

- Terms: Automated collection is restricted; align monitoring with the Acceptable Use Policy and API Terms.

- How to track in practice:

- Build fixed Q&A panels per topic; perform ToS-compliant checks on a regular cadence and record citations/frequency.

- If you have permitted API access, log citation presence across prompts and competitors; annotate changes after content updates/PR wins.

- Where feasible, add UTMs to cited pages to observe referral quality.

ChatGPT (with Search/Browsing)

- Behavior: ChatGPT Search provides timely answers with links to relevant sources; there’s no public “brand-mention API.”

- How to track in practice:

- Use controlled prompt panels; record whether/when you’re cited and how prominently.

- Re-test after major content and PR updates to observe shifts.

- Attribute impact with downstream signals (brand queries, direct traffic, assisted conversions) rather than expecting native visibility reports.

Building the measurement stack and governance

- Quarterly re-baselining: Refresh your prompt sets and topic clusters every quarter to reflect seasonality and model updates.

- Evidence log: Keep a time-stamped repository of screenshots/exports for all engines.

- Dashboards: Unite AI SOV, citation rates, and volatility with Topic Share, coverage depth, and entity/E-E-A-T status.

- ToS compliance check: Formalize guardrails for Perplexity and other engines; prefer official APIs and manual panels over scraping.

- Execution workflows: Strong topical authority depends on cluster completeness and consistent structure. If you need a primer, see how to design topic clusters and orchestrate end-to-end AI content workflows that reinforce entity clarity and E-E-A-T.

Scenario playbooks

-

Present in AIO but low clicks

- Improve on-page extractability (concise answer blocks, schema), add value props near cited sections, and test title/intro variations that attract qualified clicks from AI surfaces.

-

Absent from AIO but ranking well in classic results

- Audit entity clarity (Organization/Person schema, consistent names), expand coverage gaps in subtopics, and add scannable answer sections that LLMs can quote.

-

Competitors dominate Perplexity/ChatGPT citations

- Publish first-party data and updated how-tos; strengthen digital PR in niche publications; ensure author bios show real experience and credentials.

-

Agency and portfolio management

- Standardize prompt sets per client, unify evidence logs, and report “AI SOV” alongside Topic Share. Establish re-test cadences after content releases and PR pushes.

-

Multilingual and regional teams

- Localize prompt sets and content clusters by market; watch for region-specific differences in AI citations and adapt entity signals (names, transliterations) accordingly.

Limits, caveats, and how to stay honest

- Google offers no native AI Overview citation reporting/API as of late 2025; use third-party trackers and manual studies.

- Bing Copilot Search: third-party API coverage exists; schema details may change—validate before building automations.

- Perplexity: respect AUP and API Terms; avoid scraping.

- ChatGPT: no brand-mention API; rely on controlled panels and downstream indicators.

- CTR impacts vary by dataset and industry; treat effect sizes as directional and triangulate over time.

Also consider: workflow enablers

- QuickCreator — An AI-powered content platform that helps execute topic clusters and E-E-A-T-aligned content workflows efficiently across languages. Disclosure: QuickCreator is our product.

Action checklist (put this into practice)

- Define topic clusters and build a representative prompt slate per cluster.

- Track AI SOV, citation rate, and prominence weekly by surface; keep a screenshot log.

- Monitor Topic Share, coverage depth, entity clarity, and E-E-A-T quarterly.

- Correlate AI visibility shifts with brand-demand indicators in analytics.

- Re-baseline prompts/clusters each quarter; re-test after major content/PR updates.

- Stay within platform terms; favor official APIs/manual panels over scraping.

References you can trust while building your program

- Google AI features and your website (2025): Google Search Central — AI features

- Industry-level visibility patterns in AI search (2025): Search Engine Land — AI search citations across 11 industries

- Lower clicks with AI summaries (2025): Pew Research — users click less when AI summaries appear

- CTR impact size estimate (2025): Ahrefs — AI Overviews reduce clicks

- Prevalence of AIO (2025): Semrush — 13.14% of queries in March 2025

- Bing SERP API note (2025): DataForSEO — Copilot Search in Bing SERP API

- Perplexity citations design (2024/2025): Perplexity — Getting started with sources and citations