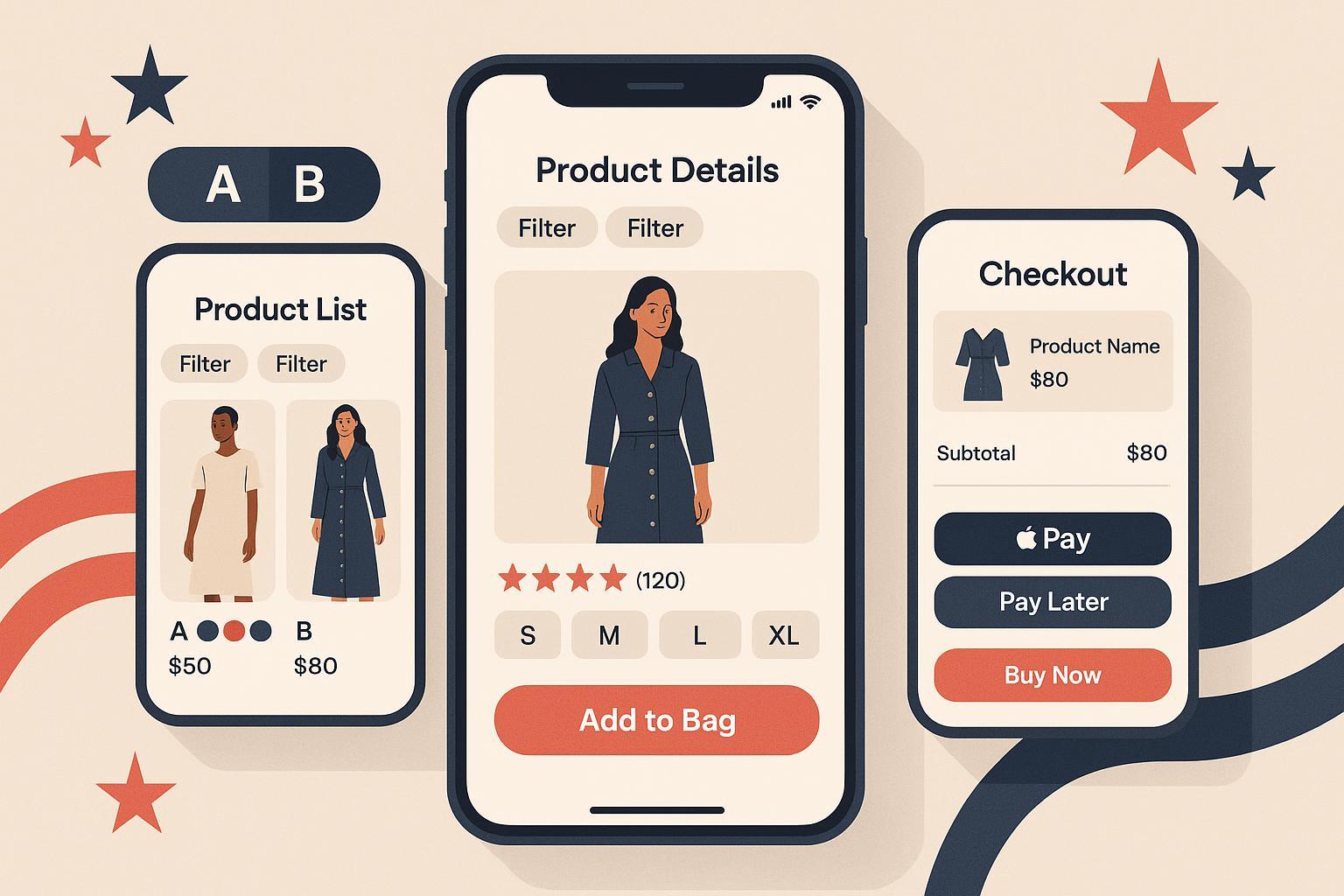

22 A/B Testing Ideas for US Fashion Ecommerce Brands in 2025

US apparel and accessories shoppers are decisive on mobile and unforgiving with friction. If you’re aiming to lift conversion, reduce fit-related returns, and increase AOV without wrecking margins, use this curated list of test ideas tailored to American fashion ecommerce. Each idea includes a clear hypothesis, what to vary, target context, KPIs, guardrails, and a brief evidence note—so your team can move fast while staying methodologically sound.

Note on test hygiene: run a power analysis before launch, include full weekly cycles, avoid peeking, and monitor for sample ratio mismatch. Segment results by device (mobile vs. desktop), traffic source, and category (e.g., women’s denim vs. menswear) to catch heterogeneous effects.

Product Discovery (PLP/Search/Navigation; Mobile‑First)

1) Multi‑select filters with applied “chips” on mobile

- Hypothesis: If shoppers can select multiple sizes/colors and see applied filter chips, they’ll navigate with more confidence, increasing PLP→PDP clicks and add‑to‑cart.

- What to vary: Filter control type (checkbox vs. pill), placement (sticky bar vs. drawer), chips behavior (removable vs. “clear all”).

- Who/where: Mobile PLPs, high‑SKU categories (dresses, denim).

- KPIs: PLP→PDP CTR, add‑to‑cart; Guardrails: page speed (LCP/INP), bounce.

- Evidence: Baymard’s 2024–2025 findings recommend multi‑select filters and visible applied chips to improve findability, based on large apparel UX benchmarks—see the public summary in Baymard 2025 product list & filtering.

2) Consolidate variants into one PLP tile with interactive swatches

- Hypothesis: Grouping colorways reduces visual clutter; interactive swatches increase scanning efficiency and relevant clicks.

- What to vary: Consolidated tile vs. separate tiles; swatch hover/tap updating image and price.

- Who/where: PLPs with many color variants (tees, sneakers).

- KPIs: PLP CTR, PDP dwell, add‑to‑cart; Guardrails: variant stock clarity.

- Evidence: Baymard’s apparel guidance favors consolidating variants for lower cognitive load, reflected in their product list research (see link above).

3) Meaningful sort orders and a “View All” option (desktop); infinite scroll vs. pagination (mobile)

- Hypothesis: Aligning sort options with intent (New, Best sellers, Most reviewed) accelerates product finding; “View All” helps scanners; mobile infinite scroll may increase item exposure.

- What to vary: Sort labels, default sort, pagination style; add a top “Back to filters.”

- Who/where: PLPs; mobile and desktop separately.

- KPIs: PLP→PDP CTR, conversion; Guardrails: performance and crawl budget.

- Evidence: Baymard’s product finding guidance emphasizes purposeful sort options in 2024–2025 updates (see the filtering summary link above).

4) Surface size/fit filters early and often on mobile

- Hypothesis: Putting size/fit filters near the top reduces dead‑end browsing and improves add‑to‑cart.

- What to vary: Filter order, quick size chips on PLP, copy clarity on size labels.

- Who/where: Mobile PLPs; categories with complex sizing (jeans, dresses).

- KPIs: PDP views, add‑to‑cart; Guardrails: availability sync.

- Evidence: Baymard’s apparel research stresses grouping and labeling size filters for discoverability (supported in their public apparel summaries).

5) Collection page thumbnails that preview on‑model imagery on swatch change

- Hypothesis: If swatch interactions update to on‑model imagery, shoppers judge fit/style faster, boosting clicks to PDP.

- What to vary: Thumbnail image source, zoom level, model vs. flat lay.

- Who/where: PLPs for visually driven categories (outerwear, dresses).

- KPIs: PLP CTR, PDP dwell; Guardrails: image weight and LCP.

- Evidence: Consistent with Baymard’s recommendation for robust visual previews (see product list & PDP UX links below).

PDP Persuasion (Imagery, Reviews/UGC, Size/Fit Confidence)

6) Expand image sets: on‑model, multiple angles, robust zoom; add short video

- Hypothesis: Richer visuals reduce uncertainty, increasing add‑to‑cart and lowering fit‑related returns.

- What to vary: Number of images, zoom type, video length/placement.

- Who/where: PDPs; mobile gallery interaction focus.

- KPIs: Add‑to‑cart, conversion, return rate; Guardrails: LCP/INP.

- Evidence: According to the Baymard 2025 product page UX overview, comprehensive imagery and zoom features are core to apparel PDP performance.

7) Prioritize visual UGC (review photos/videos) above the fold

- Hypothesis: Shoppers who engage with visual UGC are more likely to convert; front‑loading UGC thumbnails increases interaction.

- What to vary: Placement of UGC thumbnails, gallery layout, “See customer photos” CTA.

- Who/where: PDPs with review volumes; categories where fit varies.

- KPIs: Add‑to‑cart, conversion; Guardrails: moderation workload and authenticity.

- Evidence: PowerReviews reports in its 2024–2025 guidance that conversion can jump when shoppers interact with visual UGC; see the quantified lift in PowerReviews’ Ratings & Reviews guide.

8) Review summary chips with a dedicated “Fit” subscore

- Hypothesis: Summarizing rating count plus a “runs small/true/large” subscore near the size selector reduces hesitation.

- What to vary: Chip design, proximity to size selector, subscore scale.

- Who/where: Apparel PDPs; mobile first.

- KPIs: Size selection completion, add‑to‑cart; Guardrails: avoid misleading aggregates with tiny samples.

- Evidence: Baymard’s apparel patterns advocate clearer fit signals in the review summary (see PDP UX link).

9) Interactive size guides or fit recommenders; remember size profile

- Hypothesis: A guided size recommendation increases confidence and reduces bracketing, improving conversion and lowering fit‑related returns.

- What to vary: Quiz vs. one‑click recommender, data inputs, memory/preselection on revisit.

- Who/where: Categories with variable sizing (denim, dresses, footwear).

- KPIs: Add‑to‑cart, conversion, return rate; Guardrails: accuracy calibration, privacy/consent.

- Evidence: Numerous vendor case studies show directional reductions in fit‑related returns; treat claims cautiously and validate with your own tests.

10) Above‑the‑fold attribute highlights (fabric, stretch, care, rise)

- Hypothesis: Concise attribute summaries near the size selector reduce scanning burden and increase add‑to‑cart.

- What to vary: Attribute selection, microcopy, iconography.

- Who/where: PDPs; mobile first.

- KPIs: Add‑to‑cart; Guardrails: clutter vs. clarity.

- Evidence: Baymard’s PDP guidance emphasizes critical attribute clarity for apparel (see PDP UX link).

Cart and Checkout (Trust, Friction, Payments, Delivery/Returns Clarity)

11) Prominent guest checkout; account creation after purchase

- Hypothesis: Removing forced account creation increases checkout starts and completions, especially on mobile.

- What to vary: Guest checkout prominence, copy, post‑purchase account upsell.

- Who/where: Checkout entry step; mobile emphasis.

- KPIs: Checkout start→completion, order conversion; Guardrails: fraud screening for guest.

- Evidence: Baymard’s checkout research continues to find forced accounts as a leading abandonment driver; reducing friction here is central (supported across their 2024–2025 checkout summaries).

12) Minimize visible form fields and use progressive disclosure

- Hypothesis: Fewer visible fields and smarter defaults/autofill raise completion rates.

- What to vary: Field grouping, autofill, optional field deferral.

- Who/where: Shipping and payment steps.

- KPIs: Checkout completion; Guardrails: address accuracy, data quality.

- Evidence: The Baymard 2024 checkout flow field analysis shows typical flows with ~11 visible fields; reducing them correlates with better UX and conversion.

13) Upfront estimated delivery dates and transparent fees in cart

- Hypothesis: Clear delivery windows and full cost transparency reduce surprises and abandonment.

- What to vary: Delivery date presentation, shipping copy, returns policy teaser.

- Who/where: Cart and early checkout; mobile first.

- KPIs: Step drop‑off, conversion; Guardrails: SLA accuracy.

- Evidence: US shoppers favor clear delivery expectations; McKinsey’s 2025 discussion on e‑commerce deliveries highlights evolving expectations—see McKinsey’s US e‑commerce deliveries outlook.

14) Express wallets placement (Apple Pay/Google Pay/PayPal) at cart vs. payment step

- Hypothesis: Earlier placement of express wallets increases conversion on eligible devices.

- What to vary: Cart-level express buttons vs. payment‑step only; copy and logo treatment.

- Who/where: Checkout; iOS and Android segments.

- KPIs: Checkout completion, revenue per visitor; Guardrails: wallet‑specific error handling.

- Evidence: Stripe’s 2024–2025 experiments found offering Apple Pay can raise conversion on eligible checkouts by around 22% on average—see Stripe’s payment methods testing study.

15) BNPL messaging: PDP vs. cart vs. payment step

- Hypothesis: Contextual BNPL messaging increases AOV and conversion for price‑sensitive segments without spiking fraud.

- What to vary: Placement, copy clarity on terms/fees, PDP price breakdown vs. checkout messaging.

- Who/where: Higher‑ticket categories; new vs. returning segments.

- KPIs: AOV, checkout completion; Guardrails: chargebacks and fraud rate.

- Evidence: US adoption continues to grow; see the 2024 eMarketer BNPL industry guide for market context, and pair with layered risk controls per the Merchant Risk Council’s 2025 Fraud & Payments report (PDF).

16) Trust indicators and recognizable payment logos near CTAs

- Hypothesis: Familiar payment/wallet logos and concise trust cues reduce anxiety and increase payment‑step completion.

- What to vary: Logo set, placement proximity to CTAs, copy (“Secure checkout”).

- Who/where: Payment step; mobile first.

- KPIs: Payment step completion; Guardrails: avoid deceptive seals.

- Evidence: Baymard’s checkout usability summaries support prominent, recognizable trust cues—balanced to avoid clutter.

17) Cart abandonment recovery modals vs. email/SMS sequencing

- Hypothesis: Timing and channel mix for exit‑intent modals and follow‑up can recover more carts without hurting brand perception.

- What to vary: Modal copy/value, delay timing, email vs. SMS cadence.

- Who/where: Cart page; new vs. returning segments.

- KPIs: Recovery rate, conversion; Guardrails: compliance (TCPA), unsubscribes.

- Evidence: Global cart abandonment averages hover near 70%, highlighting the room to recover; see the benchmark context in Statista’s global cart abandonment rate.

Pricing/AOV Levers (Thresholds, Bundles, Progressive Discounts, Cross‑Sells)

18) Free shipping threshold set ~20–30% above current AOV

- Hypothesis: A calibrated threshold nudges basket expansion and preserves margins.

- What to vary: Threshold level, progress bars, qualifying item cues.

- Who/where: Cart, mini‑cart, PDP.

- KPIs: AOV, conversion; Guardrails: contribution margin, return‑adjusted profit.

- Evidence: Common CRO practice supported by enterprise retail guidance; test the economics against your margin model.

19) Outfit bundles (“complete the look”) with size‑aware availability

- Hypothesis: Curated outfits increase units per order without overwhelming shoppers.

- What to vary: Bundle composition, discount vs. no discount, PDP placement.

- Who/where: Apparel categories with complementary items.

- KPIs: AOV, attach rate; Guardrails: inventory complexity.

- Evidence: Merchandising best practices support curation; run local validation.

20) Progressive discount tiers (e.g., $100 → 10%, $150 → 15%)

- Hypothesis: Tiered incentives push basket expansion while preserving contribution margin.

- What to vary: Tiers, messaging, eligibility.

- Who/where: Cart and checkout; promo‑sensitive shoppers.

- KPIs: AOV, margin, return rate; Guardrails: discount abuse and erosion.

- Evidence: Widely used in US retail; simulate basket economics before testing.

21) Size/color‑aware cross‑sells (in‑stock complements)

- Hypothesis: Recommending complements available in the shopper’s selected size/color increases attach rate without frustration.

- What to vary: Recommendation logic, placement (PDP vs. cart), visual density.

- Who/where: PDP and cart; categories with complementary accessories.

- KPIs: Attach rate, AOV; Guardrails: site speed, cognitive load.

- Evidence: Personalization literature and numerous vendor cases suggest relevance is the key driver; validate locally.

Retention / Post‑Purchase (Exchanges‑First, Size Memory, UGC Prompts)

22) Exchange‑first returns flow to preserve revenue

- Hypothesis: Offering easy size/color exchanges and store credit defaults retains more revenue than direct refunds.

- What to vary: Default option (exchange/credit vs. refund), flow steps, incentives (free size exchange).

- Who/where: Returns portal and post‑purchase emails; categories with high fit variability.

- KPIs: Exchange rate, retained revenue, CSAT; Guardrails: abuse/fraud controls.

- Evidence: US apparel returns are high; industry reports show exchange‑first platforms retain more revenue, though lifts vary—validate with your own RMA data.

How to prioritize and analyze your tests (quick blueprint)

- Impact vs. effort: Start with mobile discovery and checkout friction fixes (Items 1–4, 11–15). These typically deliver larger conversion lift with moderate effort.

- Guardrail metrics: Track return rate and size‑related RMA reasons for PDP fit tests; monitor fraud/chargebacks for BNPL/wallet placement; watch Core Web Vitals (LCP under 2.5s, INP under 200ms) during media‑heavy tests.

- Segmentation: Expect different effects by category (e.g., women’s dresses vs. men’s tees), device, and traffic source. Plan analyses to capture these.

- Economics first: Define minimum detectable effect sizes based on margin/CAC. A “win” that harms contribution margin or spikes returns is not a win.

Sources and benchmarks referenced in this guide

This list draws on recognized authorities and recent US‑relevant publications. Where vendor case studies are mentioned, treat them as directional and verify with your own experiments.

- Baymard Institute (2025): Product list & filtering patterns

- Baymard Institute (2025): Product page UX overview

- Baymard Institute (2024): Checkout flow average form fields

- PowerReviews (2024–2025): Ratings & Reviews guide (visual UGC impacts)

- McKinsey (2025): US e‑commerce deliveries expectations

- Stripe (2024–2025): Testing payment methods including Apple Pay

- eMarketer (2024): Buy Now, Pay Later industry guide

- Merchant Risk Council (2025): Global Fraud & Payments Report (PDF)

- Statista (ongoing): Global online shopping cart abandonment rate

If you need a deeper blueprint (sample size calculators, SRM checks, and device/category segmentation plans), we can extend this into an experiment playbook tailored to your catalog and margins.