Reverse ETL for Marketing: A 2025 Best‑Practice Playbook

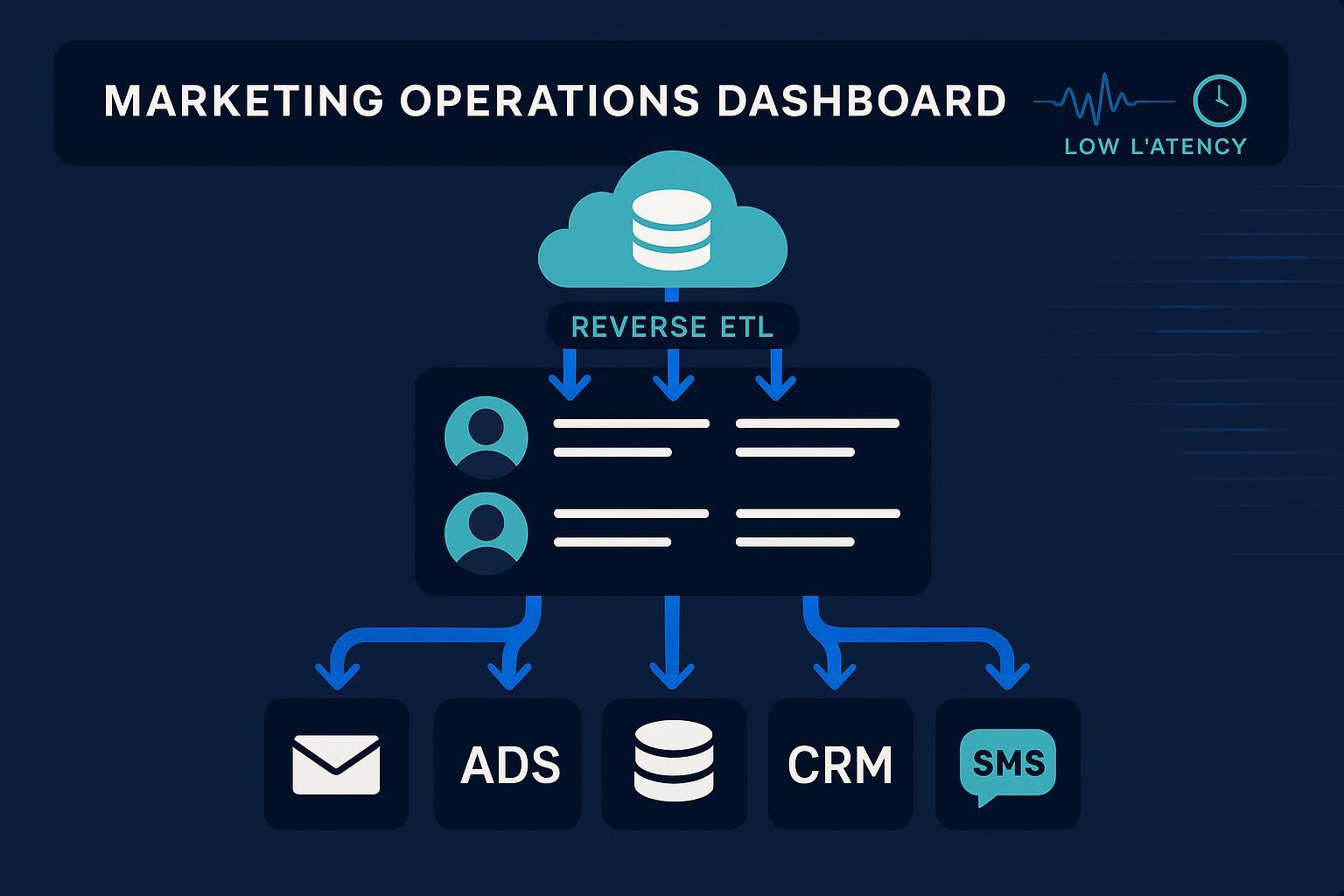

If your data warehouse is where truth lives, Reverse ETL is how that truth shows up in your marketing tools at the exact moment it matters. Over the past two years, the shift toward warehouse‑native activation and composable CDPs has made Reverse ETL a staple in marketing ops. Practitioners can now push enriched profiles, segments, and conversion events into ad platforms, MAPs, CRMs, and SMS in minutes—not weeks. That said, success depends on concrete implementation choices, guardrails, and observability.

This playbook distills what consistently works in the field—where Reverse ETL shines, where it doesn’t, and how to operate it safely at scale.

Why this matters in 2025

- Warehouse‑native activation is mainstream: Vendors have introduced streaming or near‑real‑time syncs; for instance, sub‑minute “live syncs” on Snowflake and streaming Reverse ETL have been publicized by leading providers since 2023–2024, enabling timely triggers for marketing journeys, as outlined by the announcements from Census on Snowflake live syncs and Hightouch’s streaming Reverse ETL.

- Composable CDP is the prevailing architecture: Analysts and practitioners highlight a shift from monolithic CDPs to warehouse‑centric stacks that activate governed data, a trend covered in Martech.org’s composability analysis (2024–2025) and the 2025 ChiefMartec landscape commentary.

- Data‑activated marketing continues to capture outsized value: McKinsey’s 2024–2025 research attributes a significant share of gen‑AI impact to sales/marketing functions, underscoring the ROI potential when data is operationalized end‑to‑end, as discussed in the McKinsey analysis of AI’s value in the workplace (2024).

Caveat: Public, independently verified ROI studies isolating Reverse ETL as the single causal factor remain scarce. Treat Reverse ETL as an activation enabler; measure its impact within your broader lifecycle program.

When Reverse ETL fits—and when it doesn’t

Use Reverse ETL when:

- You have a cloud warehouse with reliable identity keys and marketing‑ready models (e.g., customer 360, LTV scores, product affinity).

- Freshness SLAs are in the tens of seconds to minutes for triggers, or hourly/daily for audience syncs.

- You want to avoid creating yet another customer data store and prefer governed, warehouse‑native activation described by RudderStack’s warehouse‑native CDP perspective.

Avoid or complement Reverse ETL when:

- You require in‑session, sub‑second personalization. Consider a customer engagement platform or direct event streaming; several vendors note that pure Reverse ETL may not meet ultra‑low‑latency needs in such cases, as discussed in Simon Data’s Snowflake personalization discussion.

- You need heavy identity resolution beyond your warehouse capabilities; evaluate dedicated identity/CDP solutions per CDP vs. data warehouse role primers.

- Data minimization is paramount and duplicating attributes into tools is risky or costly—investigate zero‑copy/zero‑ETL patterns summarized by CDP.com’s industry statistics and discussion.

Foundational practices that prevent 80% of issues

- Define use cases and freshness SLAs up front

- Cart abandonment and browse abandonment: <5 minutes end‑to‑end.

- Lead scoring to CRM routing: 30–60 seconds.

- Audience refresh for paid media: 1–6 hours depending on spend/volatility.

- Reactivation cohorts: daily.

- Lock down identity keys and mapping

- Adopt a canonical set of identifiers: email (lowercased, trimmed), phone (E.164), device/platform IDs, and platform‑specific IDs.

- Maintain an identity map/model in the warehouse; destination mappings should reference this model to prevent drift.

- Establish data contracts between warehouse models and destinations

- Declare schemas, types, and allowed null rates; fail fast on violations. Data‑contracted activation reduces runtime surprises and API rejections. This approach aligns with streaming Reverse ETL guardrails noted in Hightouch’s 2023–2024 streaming overview.

- Build consent and privacy into the pipeline

- Hash PII (SHA‑256) where required by ad platforms; propagate opt‑outs and deletions to all destinations.

- Follow well‑architected governance advice for analytics pipelines such as the AWS Analytics Lens guidance (2024 edition).

- Design for observability and recovery

- Track freshness, lag, error rates, and diff counts per sync.

- Emit alerts for schema drift, null spikes, API 4xx/5xx, and throttling.

- Keep idempotent upserts and replay capabilities using CDC logs and run history, a pattern echoed in RudderStack’s data pipeline architecture notes (2024).

Implementation steps (battle‑tested)

Step 1 — Model your marketing entities

- Customers, accounts, subscription status, product catalog, events (orders, sessions), and computed features (RFM, LTV, churn score) in dbt or SQL. Align model outputs with destination field constraints.

Step 2 — Choose sync modes per use case

- Streaming/near‑real‑time for triggers (abandonment, price‑drop alerts) leveraging providers with low‑latency claims such as Census live syncs on Snowflake or Hightouch streaming.

- Scheduled batch for audience refreshes (paid media, email nurtures).

Step 3 — Map, validate, and test

- Field‑level mapping with type checks, default values, and unit tests. Validate required keys per destination.

- Run a dry‑run sync to a sandbox destination; compare record counts and rejected rows.

Step 4 — Platform‑specific guardrails

- Meta (Facebook) Conversions API: Deduplicate using event_id/external_id; hash emails/phones; follow the 2024 troubleshooting guidance in Meta’s CAPI Gateway troubleshooting guide and the GTM server‑side CAPI guide.

- Google Ads Enhanced Conversions/Customer Match: Ensure SHA‑256 hashing, correct conversion actions, and respect quotas, per Google’s Enhanced Conversions support (updated 2024) and the Google Ads API conversions overview.

- HubSpot/Salesforce: Validate field mappings after schema changes; plan for API rate limits and use batch upserts with retries. See HubSpot’s guide to syncing Google Ads conversion events (2024) and consult official Salesforce API limit docs.

- Braze/Iterable: Use stable identifiers (external_id); pre‑validate profile attributes; respect rate limits; refer to their developer portals for endpoint quotas and error handling.

Step 5 — Promote to production with SLAs and alerts

- Define on‑call rotations for high‑impact syncs. Create dashboards for freshness, retries, and failure categories.

High‑impact marketing use cases (with activation recipes)

- Cart and browse abandonment triggers

- Data needed: user ID/email, last product viewed, cart contents, timestamp.

- Flow: Detect abandonment → compute trigger cohort → stream to MAP/CEP within <5 minutes → send personalized message with product details.

- Keys to success: Event deduplication, frequency capping, QA in sandbox.

- Paid media audience sync and suppression

- Data needed: lifecycle stage, high‑value segments, purchase recency.

- Flow: Nightly/hourly sync cohorts to Google, Meta, TikTok; suppress recent purchasers; refresh lookalikes.

- Keys: Hashing PII, consent enforcement, ad platform match‑rate monitoring.

- Churn risk and win‑back

- Data needed: churn score, product usage velocity, last engagement.

- Flow: Sync scores to CRM/MAP; trigger outreach sequences or discount offers.

- Keys: Score recalculation cadence, guardrails for incentives.

- Cross‑sell/upsell recommendations

- Data needed: product affinity, next‑best action.

- Flow: Sync attributes to email/onsite personalization; push lists to paid channels for targeted promotions.

- Keys: Catalog freshness, feature drift checks, content availability.

- Offline conversions back to ads platforms

- Data needed: order ID, value, timestamp, user identifiers.

- Flow: Batch upload or CAPI/API to attribute downstream revenue to ads for better bidding.

- Keys: Identity resolution, time‑window alignment, dedup, per Meta CAPI troubleshooting and Google Ads conversions docs.

Monitoring, validation, and governance

- Freshness and latency SLOs: Alert if end‑to‑end exceeds thresholds; correlate warehouse job times with destination receipt timestamps.

- Data quality checks: Completeness, uniqueness, referential integrity, and business rules at both model and sync stages. Enforce via data contracts and dbt tests.

- Schema drift detection: Auto‑pause syncs on breaking changes; notify owners.

- Audit and lineage: Keep immutable logs and run histories; support replay. These patterns align with RudderStack’s pipeline architecture guidance (2024).

- Privacy compliance: Right‑to‑erasure end‑to‑end tests; masking; purpose‑based access controls guided by the AWS Analytics Lens (2024).

Tool selection criteria that actually matter

- Latency capabilities and SLAs (streaming vs. batch) documented by the vendor—see examples such as Hightouch streaming and Census live syncs.

- Observability depth: native dashboards, alerting hooks, failure categorization.

- Governance and security: masking, encryption, audit logs, RBAC—capabilities frequently emphasized in enterprise data tooling roundups like Airbyte’s security/replication overview and Hevo’s automation guide.

- Transformation integration: dbt compatibility, pre‑sync validation, Python transforms.

- Connector coverage for marketing destinations: ads (Google, Meta), MAPs (Braze, Iterable), CRM (Salesforce, HubSpot).

- Identity handling and offline conversion support.

- Total cost of ownership: Warehouse compute, egress, API costs; ops overhead.

Troubleshooting playbooks (marketer‑friendly)

-

“My segment isn’t updating in Facebook/Meta.”

- Check event_id/external_id dedup, data hashing, and the destination’s ingestion status. Use the 2024 Meta CAPI troubleshooting guide.

-

“Google Ads shows low match rates.”

- Normalize identifiers (lowercase emails; E.164 phone), ensure SHA‑256 hashing, and verify consent. Cross‑check Google’s Enhanced Conversions guide.

-

“We hit API limits on Salesforce/HubSpot/Braze/Iterable.”

- Implement batching, exponential backoff, and schedule windows. Prioritize critical fields; defer low‑value updates. Consult official developer docs for current quotas.

-

“Freshness SLA breaches after peak traffic.”

- Scale warehouse compute during peak windows; use incremental models; stagger destinations; validate no downstream throttling.

-

“Schema changes broke our sync.”

- Enforce data contracts; auto‑pause on drift; notify data owners; provide a mapping diff and a rollback plan.

Measuring ROI without over‑claiming

Because public benchmarks isolating Reverse ETL are limited, treat measurement as a first‑class discipline:

- Establish pre/post baselines: time‑to‑launch (TTL) for campaigns, segment refresh time, match rate, conversion rate, ROAS, and LTV.

- Use holdouts/A‑B where feasible for triggered programs.

- Attribute impact at the workflow level (e.g., improved abandonment trigger timeliness) and connect to outcomes. McKinsey’s 2024–2025 work suggests sales/marketing capture a large share of AI‑driven value, reinforcing the importance of operationalizing data end‑to‑end per the McKinsey analysis on AI value in sales/marketing.

2025 trends and how to future‑proof

- Composable and warehouse‑native CDP: Keep activation close to governed data; avoid redundant stores. See Martech.org’s composability overview (2024–2025).

- Streaming activation: Adopt streaming for time‑sensitive triggers; evaluate sub‑minute pipelines as highlighted by Census live syncs and Hightouch streaming Reverse ETL.

- Privacy‑first orchestration: Bake right‑to‑erasure tests and consent propagation into CI/CD; align with the AWS Analytics Lens governance patterns.

- Zero‑copy data access: Reduce duplication when possible; consider direct query/clean‑room patterns per CDP.com’s industry summaries.

Future‑proofing checklist

- Document data contracts for every destination mapping and enforce in CI.

- Maintain a single identity map and run nightly integrity checks.

- Define per‑use‑case latency SLOs; add synthetic canaries that verify end‑to‑end timeliness.

- Keep platform‑specific runbooks updated to the latest vendor docs.

- Review costs quarterly; right‑size compute and adjust sync cadences.

A pragmatic maturity roadmap

- Phase 1: Prove value on one trigger and one audience sync. Target <5‑minute abandonment trigger and a weekly reactivation cohort.

- Phase 2: Expand to paid media suppression and offline conversions; add alerting and data contracts.

- Phase 3: Introduce streaming for critical triggers; formalize consent propagation and right‑to‑erasure tests.

- Phase 4: Optimize cost and latency; roll out cross‑channel orchestration with ML‑powered features.

Summary: What to do next

- Start with a narrowly scoped, high‑value use case and define explicit freshness SLAs.

- Stabilize identity and contracts before scaling destinations.

- Instrument observability from day one with actionable alerts.

- Adopt streaming only where latency materially affects outcomes; keep the rest on batch to control costs.

- Treat ROI measurement as an experiment discipline, not an afterthought.

Implement these practices and you’ll take Reverse ETL from “we synced some fields” to a reliable, governed activation layer that moves the needle on real marketing KPIs.