OpenAI’s Sora 2 Brings Synchronized Audio-Visual AI Video — What Marketers Should Pilot Now (2025)

Updated on 2025-10-02: Initial analysis published. Anchored to OpenAI posts and early trade coverage. Fast‑moving facts include access geographies, API timing/pricing, duration/resolution caps, and third‑party benchmarks. We’ll refresh this piece on Day 5 and Day 10.

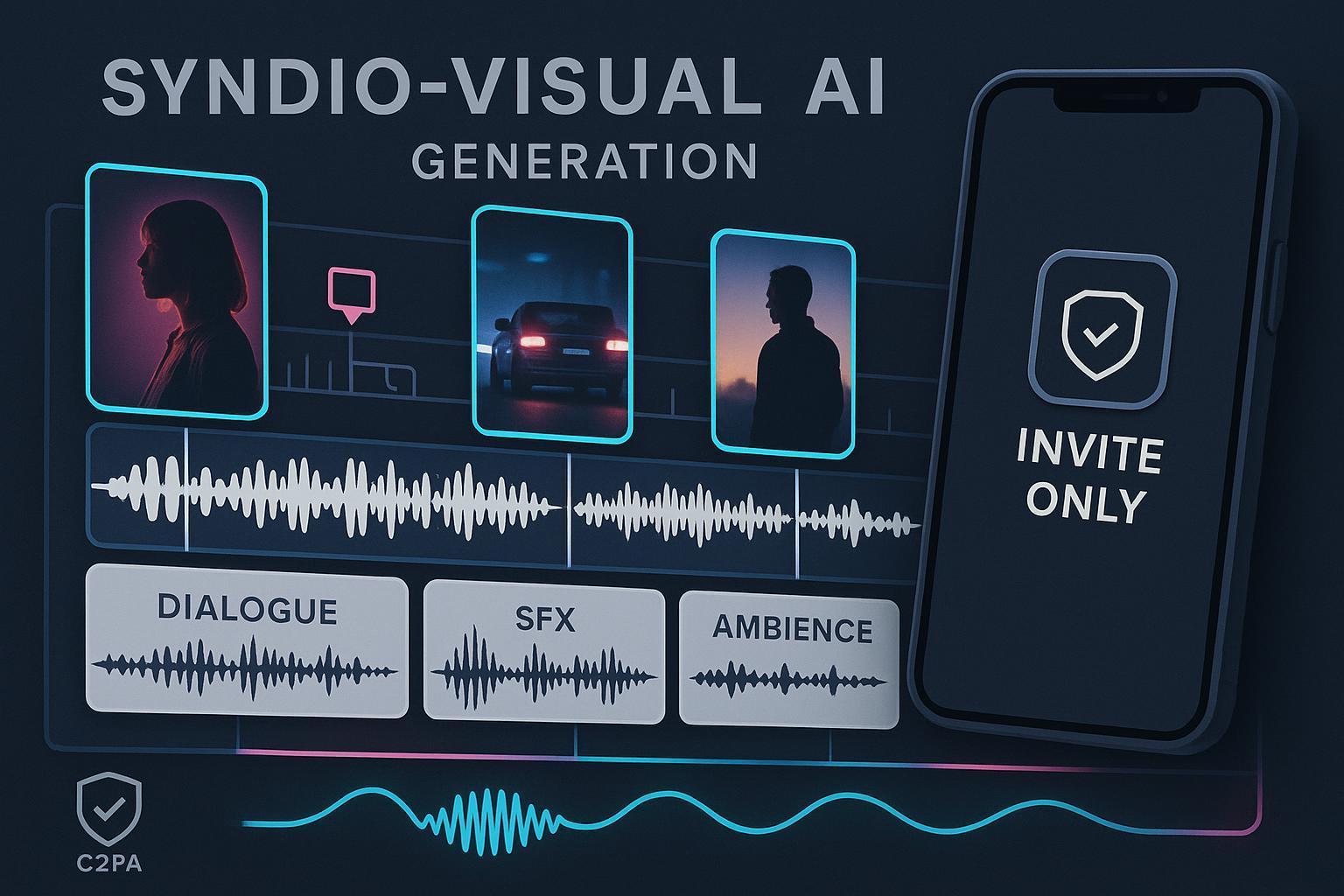

OpenAI’s Sora 2 shifts text‑to‑video from silent storyboard drafts to synchronized audio‑visual outputs. For content teams, that means faster ideation: dialogue, ambience, and sound effects can be generated in alignment with visuals, cutting temp audio and reducing turnaround on short‑form clips. The headline: Sora 2 is a strong ideation engine for marketers and creators; finishing still belongs to your NLE for frame‑accurate timing and polish.

Because this launch is breaking, some specs are evolving. Below, we separate confirmed details from early impressions, and lay out a pragmatic, governance‑ready workflow you can pilot this week.

What’s confirmed, and what’s still evolving

- Synchronized audio-visual generation is in Sora 2. OpenAI’s launch page states the model “features synchronized dialogue and sound effects,” and emphasizes improved realism and control. See OpenAI — “Sora 2 is here” (2025).

- Safety and world modeling have been documented with an iterative approach and risk focus; details on safeguards, evaluation, and limitations are outlined in the OpenAI — “Sora 2 System Card” (2025).

- Watermarks and provenance are embedded at launch: visible moving watermark on downloads plus C2PA metadata for all Sora videos, with internal reverse‑image/audio search tools to trace origins. This is explicitly described in OpenAI — “Launching Sora responsibly” (2025).

- Access model: an invite‑only iOS rollout began September 30, 2025, with guidance in the Help Center; geographies are initially limited and expected to expand. See OpenAI Help Center — “Getting started with the Sora app” (updated 2025-10-02). OpenAI also notes an experimental higher‑quality “Sora 2 Pro” tier accessible to ChatGPT Pro users.

- Improved physical realism and continuity are a stated aim. Early trade coverage offers anecdotal context—for instance, TechCrunch describes more believable physics (e.g., rebounds off a backboard) compared with prior models. See TechCrunch — Sora app + Sora 2 launch coverage (2025).

What’s evolving: duration caps (e.g., exact seconds), resolution limits (e.g., up to 1080p), pricing, and API timing are not fully codified on official pages at publication. Treat media‑reported figures as provisional until OpenAI’s docs confirm them.

Why this matters for marketing workflows

Sora 2 reduces the gap between prompt and publish for short‑form content. Generating synced dialogue and sound effects alongside visuals is a leap for ideation, UGC‑style explainers, and pre‑viz/animatics. Yet the hard truth remains: frame‑accurate lip‑sync, nuanced pacing, and continuity across multi‑shot sequences still benefit from finishing in a non‑linear editor (NLE) where you can fine‑tune timing, stems, color, and captions.

For SMBs and agencies, the near‑term gains are: faster concept testing for 15–30 second spots; lower dependency on stock footage for draft iterations; and an easier path to A/B testing creative in social or paid placements. Governance is equally central—preserving provenance (C2PA + watermarks) and disclosing AI assistance where platforms require it.

A 7‑day pilot plan you can run next week

Day 1–2: Prepare prompts with explicit audio intent

- Specify camera moves, environments, and sound design cues: “close‑up of product with whispered VO,” “city street ambience with passing cars,” “basketball court with squeaks and a missed rebound.”

- Write dialogue styles (calm, upbeat, authoritative) and pacing notes. Plan a parallel NLE track for final timing.

Day 3: Generate 3–5 draft clips (15–30s)

- Iterate for realism (physics, lighting, motion) and intelligible dialogue. Identify where audio sync feels solid vs. off by frames.

Day 4: Review against criteria

- Check physical realism, continuity across cuts, brand safety (no impersonation/minors issues), and watermark/provenance presence.

Day 5: NLE finishing

- Perform micro‑timing fixes for lip‑sync and pacing. Add captions, color grade, and mix music dialogue stems. Ensure C2PA metadata and watermark persist through export when possible.

Day 6: Channel prep

- Export per platform specs (vertical/landscape, bitrate, captions). Draft copy variants for social A/B tests.

Day 7: Publish and measure

- Track watch time, retention, CTR, and comments. Flag prompts that consistently perform and backlog them into your content calendar.

Workflow and SEO distribution: embed, optimize, measure

If you’re publishing Sora‑generated clips on blogs or landing pages, a content platform can streamline planning, embedding, and SEO metadata.

- Use QuickCreator to plan the post, embed the video, and optimize titles, descriptions, and schema for search and social. Disclosure: QuickCreator is our product.

- For a practical walkthrough of planning and SEO for posts that embed AI video, see the Step‑by‑Step Guide to Using QuickCreator for AI Content.

- When you embed videos inside articles and want to optimize metadata and publishing workflow, explore the AI Blog Writer.

- If you distribute on YouTube and shorts, align with platform policies on AI‑assisted content and measurement fundamentals; see AI‑Generated Content on YouTube: Everything You Need to Know.

Tip: Pair your post with 2–3 social snippets and a lightweight performance dashboard. Republish successful clips into an evergreen resources page.

Governance checklist for brand safety and compliance

- Preserve provenance: keep visible watermarks on downloads and retain C2PA metadata across edits and exports whenever your pipeline supports it. OpenAI documents both the watermark and C2PA embedding at launch.

- Disclose AI assistance: follow platform norms in descriptions and captions; avoid misleading impersonations or minors content.

- Cameo/consent: manage likeness permissions if you insert real identities; respect revocations and age‑appropriate safeguards.

- Editorial review: institute a pre‑publish check for physics plausibility and ethical context, especially in sensitive topics.

Competitive context and near‑term predictions

Most major video AI vendors emphasize high‑fidelity visuals, camera controls, and motion tools; few have officially announced end‑to‑end synchronized audio generation that aligns dialogue/SFX to visuals. Expect rapid follow‑ups from competitors on audio sync and multi‑shot continuity.

Short‑term: marketers and creators will test cinematic and UGC styles for social/ads; agencies will use Sora 2 for animatics/pre‑viz. Medium‑term: look for API triggers and integrations into CMS/DAM, templated motion kits, and brand‑safe prompts baked into content pipelines.

What we’ll update next

We will monitor and add:

- Access geographies, duration/resolution caps, pricing, and API documentation.

- Third‑party creator/editor benchmarks on lip‑sync precision, physics edge cases, and continuity.

- Comparative tables against other video models once vendor docs confirm audio sync and continuity features.

Refresh cadence: daily checks this week, then twice weekly for a month.

Citations and sources referenced above:

- OpenAI capability overview — “Sora 2 is here” (2025): synchronized dialogue and sound effects, improved realism/control. Linked once above.

- OpenAI safety & modeling — “Sora 2 System Card” (2025): safeguards, evaluation, limitations. Linked once above.

- OpenAI governance — “Launching Sora responsibly” (2025): watermarks and C2PA metadata policy. Linked once above.

- OpenAI access — Help Center “Getting started with the Sora app” (updated 2025-10-02): invite‑only rollout. Linked once above.

- TechCrunch coverage (2025): early realism anecdote; app + model context. Linked once above.