What is Google’s Gemini Robotics-ER 1.5 (Embodied Reasoning) — and why it matters for marketing

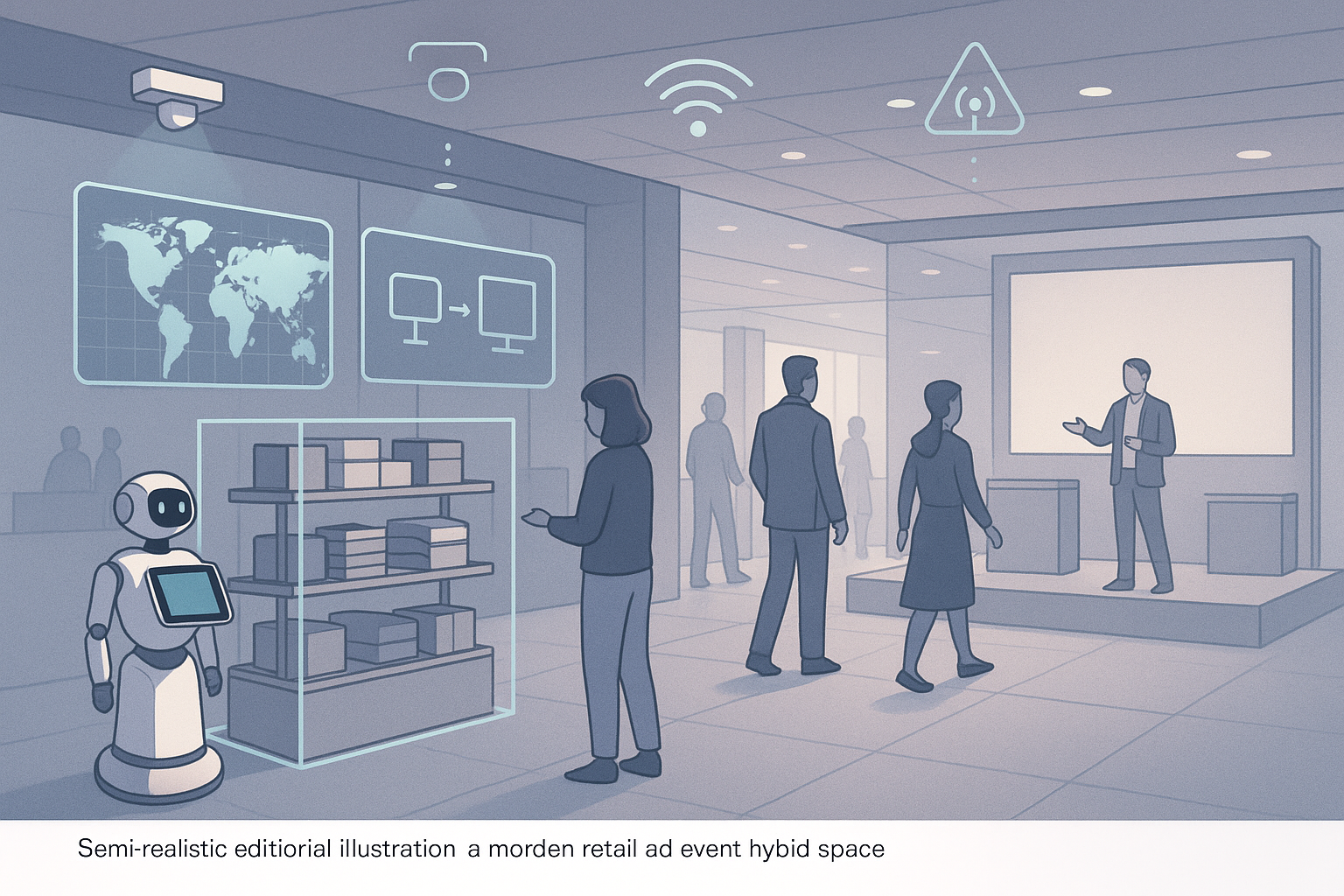

Marketing has lived mostly online for a decade. Gemini Robotics-ER 1.5 signals a shift: intelligent agents that understand physical spaces, plan multi-step actions, and coordinate tools and robots. For visionary marketing leaders, this points to “phygital” strategies—campaigns that blend digital content with on-site context from sensors, kiosks, and, selectively, robots.

A plain-English definition

Gemini Robotics-ER 1.5 is Google’s robotics-focused vision‑language model built for embodied reasoning. In simple terms, it constructs a grounded “world model” of a real environment from cameras and other sensors, uses that understanding to plan multi‑step tasks from natural‑language instructions, and can call tools or instruct robot controllers to execute. ER 1.5 acts like the strategist in a team: it thinks about the space and the steps; lower‑level controllers do the fine motor work. Google provides an official overview of these capabilities in the Robotics-ER 1.5 documentation (2025), and DeepMind frames ER 1.5 as “the first thinking model optimized for embodied reasoning” in its launch blog (2025).

Two highlights marketers should care about:

Grounded world modeling: ER 1.5 can output spatial structures (like bounding boxes and 2D points) that align with a physical scene, providing context awareness beyond text alone, per Google’s 2025 documentation.

Multi-step planning and tool use: It decomposes a goal into steps and orchestrates API calls to tools or robot stacks—a pattern analogous to orchestrating marketing activations across channels, as described in the Google Developers announcement (Sept 2025).

How ER 1.5 differs from standard LLMs and non‑ER Gemini Robotics

Not just another LLM: Standard language models reason in text and images but lack native interfaces for spatial outputs and robot/tool sequencing. ER 1.5 is purpose‑built to reason about physical spaces and coordinate actions.

ER vs. Robotics 1.5 (non‑ER): ER 1.5 emphasizes high‑level planning, grounded spatial reasoning, and tool orchestration, handing plans to lower‑level controllers for execution. DeepMind’s Gemini Robotics model page (2025) contrasts the roles within the stack.

Think of ER 1.5 as the “project manager” for physical tasks, while perception‑to‑action controllers are the “drivers.”

Why this matters to marketing

Most marketing today assumes a static, online-only context. ER-style agents can:

Reduce mismatch between content and reality by using world models grounded in the physical scene (e.g., what’s actually on the shelf or happening at a booth).

Plan cross‑touchpoint activations: trigger content on screens, apps, or kiosks in the right sequence based on in‑person behavior.

Improve measurement: structure event streams from physical signals (dwell time, interactions) and tie them to CRM outcomes for closed‑loop optimization. As of September 26, 2025, Google’s documentation highlights built‑in safety and developer controls that support reliable orchestration in physical settings.

If you’ve been exploring agentic AI in content operations, this is the physical-world extension. For conceptual grounding on agentic orchestration in marketing, see LLMO in Marketing — what it means and why it matters.

Practical, industry‑agnostic scenarios

Retail: In‑store cameras or shelf sensors detect a stock‑out or long dwell time. An agent pushes localized content to the nearest screen or app, offers help, and logs the event to CRM for attribution and post‑visit personalization.

Events/experiential: Vision‑enabled kiosks guide booth journeys. Post‑visit sequences adapt based on in‑person interactions (which demos were used, which topics were favored) to increase relevance.

Logistics/service: Field devices surface hyper‑local how‑tos when a technician scans a part. Feedback loops improve documentation and SEO content with context‑specific insights.

Sim‑to‑real testing: Use digital twins or synthetic environments to pre‑test message flows and agent logic before limited on‑site pilots, reducing risk and cost.

These aren’t science fiction—they’re software‑first patterns where embodied agents coordinate tools, with robots added selectively for value.

Debunking common myths

“This is only for factories.” While robotics demos often show industrial tasks, embodied agents also orchestrate software, kiosks, and screens—highly relevant to retail, events, and service.

“Irrelevant to marketers.” Marketing is increasingly context‑aware. ER‑style planning connects physical signals to content decisions.

“Just an LLM rename.” ER 1.5 includes grounded spatial reasoning and tool/robot orchestration not typical of generic LLMs; see Google and DeepMind’s 2025 materials.

“Requires expensive robots on day one.” Start with cameras/IoT and software agents. Add limited hardware later where it clearly pays off.

A readiness roadmap for marketing leaders

Literacy: Build a foundational understanding of embodied reasoning, world models, and agentic planning. Review Google’s and DeepMind’s 2025 overviews to align teams on definitions.

Data and consent: Inventory physical touchpoints—POS, Wi‑Fi, sensors, kiosks—and update privacy notices, signage, and opt‑in frameworks. Minimize data capture; set retention limits.

Content ops: Create modular, metadata‑rich content (components, variants, context tags) that agents can assemble dynamically. For trust and quality scaffolding, explore AI EEAT Checker — how it works and why it matters.

Measurement: Map physical signals to CRM and content outcomes. Define KPIs: localized engagement rate, in‑store → online conversion lift, response time to context signals, and SEO visibility on context‑rich queries. For distribution strategy, consider SEO optimization for Google Discover — step‑by‑step.

Pilots: Start software‑only (agentic orchestration, simulations, tool use), then add limited hardware for specific sites or events. Maintain human‑in‑the‑loop review for high‑impact actions.

Ethics, safety, and brand governance

Privacy and consent: Physical spaces require clear notices and opt‑ins. Align with local regulation (e.g., GDPR/CCPA) and practice data minimization.

Accessibility and inclusivity: Ensure kiosks and physical interfaces meet accessibility standards; provide alternative interaction modes.

Brand safety: Keep approvals for high‑impact autonomous actions; monitor for drift or unexpected behaviors.

Safety engineering: Follow standard robotics safeguards (collision avoidance, force limits, e‑stops) alongside model‑level controls described in Google’s Robotics-ER 1.5 overview (2025) and the DeepMind launch blog (2025).

A practical workflow example (software‑first, content‑led)

Imagine you’re preparing adaptive content modules for a major event. You want an agent to assemble the right variant based on booth interactions and dwell time.

Plan content structure: Define components (hero lines, benefits, case snippets, CTAs) with context tags like “demo‑A,” “long‑dwell,” “first‑time visitor.”

Create and optimize: Use QuickCreator AI Blog Writer to research, outline, draft, and SEO‑optimize content variants with E‑E‑A‑T scaffolding and clean metadata. Disclosure: QuickCreator is our product.

Orchestrate: In a pilot, an embodied agent (software‑only) reads interaction signals from the kiosk, selects the matching content component, and pushes it to the screen/app. A human reviews key decisions during the trial.

Measure and learn: Log events to CRM, map to outcomes, and refine your component library.

This delivers immediate value without touching robot hardware, while building the operational muscle for future phygital integrations.

KPIs to watch

Localized engagement rate (screen or app interactions proximate to a physical trigger)

In‑store → online conversion lift (post‑visit actions linked to in‑person signals)

Response time to context signals (how quickly content adapts after an event)

SEO visibility on context‑rich queries (e.g., “near me” + product/category with event tags)

Agent decision accuracy (match between selected content and observed context)

Where this is headed

As of late 2025, Google positions ER 1.5 in preview for developers, with layered safety and configurable “thinking” budgets for planning latency—points referenced across the Developers announcement (Sept 2025) and the technical perspective in “Gemini Robotics: Bringing AI into the Physical World” (arXiv, 2025). For marketers, the near‑term path is software‑first agentic orchestration and simulations; physical integrations come selectively where they demonstrably improve customer experience and measurement.

Next steps

Primary: Subscribe to your team’s trends tracker and follow official updates from Google and DeepMind so you can time pilots with maturity.

Secondary: If you want to operationalize agentic planning for content today, explore QuickCreator AI Blog Writer for research → outline → draft → optimize → publish workflows.

By building literacy, modular content, and measurement architecture now, you’ll be ready to capitalize as embodied agents move from demos to dependable phygital activations.