Communicating AI Usage to Your Audience Transparently

Transparency is crucial when communicating AI usage. People want to know when artificial intelligence is being used, especially in customer care.

More than 90% of businesses and customers agree that communicating AI usage is important.

85% of people want companies to explain how they keep AI safe and protected.

74% are concerned about fake political ads created by AI.

Experts say that clearly communicating AI usage helps everyone understand how AI makes decisions. When you share this information, you build trust and reduce confusion.

Key Takeaways

Tell people when and how you use AI. This helps build trust and shows you care about them.

Use simple labels and notes to say if AI helped make something. This helps people know and trust your work.

Let humans check what AI does. They can find mistakes and make sure AI is fair and safe.

Keep data private by telling people how you use and protect it. This helps customers feel safe and respected.

Update your AI rules often and follow new laws. This keeps your AI fair, trusted, and ready for changes.

Why Transparency Matters

Trust and Credibility

When you tell people how you use AI, you help them trust you. People want to know what happens with their data and how decisions are made. If you hide things, people start to worry. They might even stop using your service.

“Transparency builds trust and credibility by openly sharing operations and financials.”

Many companies have learned this the hard way. For example, a financial company once shared that its AI system had a problem with bias. The company explained what went wrong and how they fixed it. People appreciated the honesty, and trust in the company grew. On the other hand, a healthcare group tried to hide a similar issue. When the truth came out, people lost trust and the company’s reputation suffered.

You can see how much people care about transparency in surveys:

What People Value | Percentage |

|---|---|

Data safety and transparency | |

Willingness to share data if they trust the company | 66% |

When you are open, you show that you care about your customers. You also stand out from other brands. Trusted brands often see more loyal customers and faster adoption of new products.

Ethics and Compliance

Being transparent is not just about trust. It is also about doing the right thing. Many rules and laws now require you to explain how your AI works. For example, in Europe, the GDPR and the new AI Act say you must tell people when they interact with AI. These laws want to make sure AI is fair and does not harm anyone.

Ethical guidelines also say you should explain how your AI makes decisions. This helps people understand and feel safe. When you follow these rules, you protect your users and your business. You also show that you respect everyone’s rights and privacy.

Remember, transparency is not just a good idea—it is the law in many places and the right thing to do everywhere.

Communicating AI Usage

When you talk about AI usage, you help people know what is real. People want to know if they are talking to AI or a person. You can build trust by being open about how you use AI in your work.

Clear Disclosure

You should always tell people when AI helps make content or choices. This is not just about rules. It shows you care about your audience and want a good relationship.

Here are some best ways to share this:

Use easy words to say when and how you use AI.

Put notes where people can see them, like at the top of a page or near AI content.

Do not make AI look like a person. Only list real people as authors if they checked or changed the content.

Tell how much of your content comes from AI. For example, you can say, “About 30% of this article was made with AI tools.”

Use both clear and hidden signs. Clear signs are labels, warnings, or watermarks. Hidden signs are things like metadata or digital signatures that experts can check.

Make rules for when to tell people about AI. If AI only made small changes, you may not need a big note. If AI wrote most of it, you should say so.

Tip: Being honest about AI helps people feel safe and know what is going on. This can make them trust your brand more.

You can also use numbers to show you are being open. For example, a study found that 33.9% of a research paper’s words came from AI, and the writers showed which parts. This kind of detail helps people see what AI really did.

Here is a table that shows how researchers check and share AI use:

Aspect | Description |

|---|---|

When AI makes a big part of the content | |

Terminology | Use words like "AI-generated" or "AI-influenced" |

Human oversight | Make sure a person checks the AI’s work |

Feedback | Ask people what they think about your notes |

You can see that clear notes are not just about saying you used AI. It is about giving enough info so people know how and why you used it.

Labeling AI Content

Labels are a big part of talking about AI usage. Labels help people spot AI content fast. This is important for stopping fake news and keeping your brand honest.

There are many ways to label AI content:

Visible labels: Add a tag like “AI-generated” or “Created with AI” at the top or bottom of the content.

Watermarks: Put a small mark or logo on pictures or videos made by AI.

Metadata: Add hidden tags that show where the content came from. This helps experts and websites track AI content.

Digital signatures: Use safe codes to prove where the content started. This is good for news, ads, and important papers.

Description | Effectiveness and Challenges | |

|---|---|---|

Clear objectives | Labels show how content was made and if it could trick people | Helps people trust the content |

Detection techniques | Use self-disclosure, machine learning, and crowdsourcing | Each way has good and bad points |

Digital signatures | Add safe info about where content started | Hard to fake, but needs many groups to agree |

Label design | Mix “AI origin” and “misleading risk” in labels | Works best for stopping fake news |

Indirect effects | Labels can change how people trust media | Must balance honesty and trust |

Note: Labels work best when they are easy to see and use simple words. If you hide labels or use hard words, people may not trust your content.

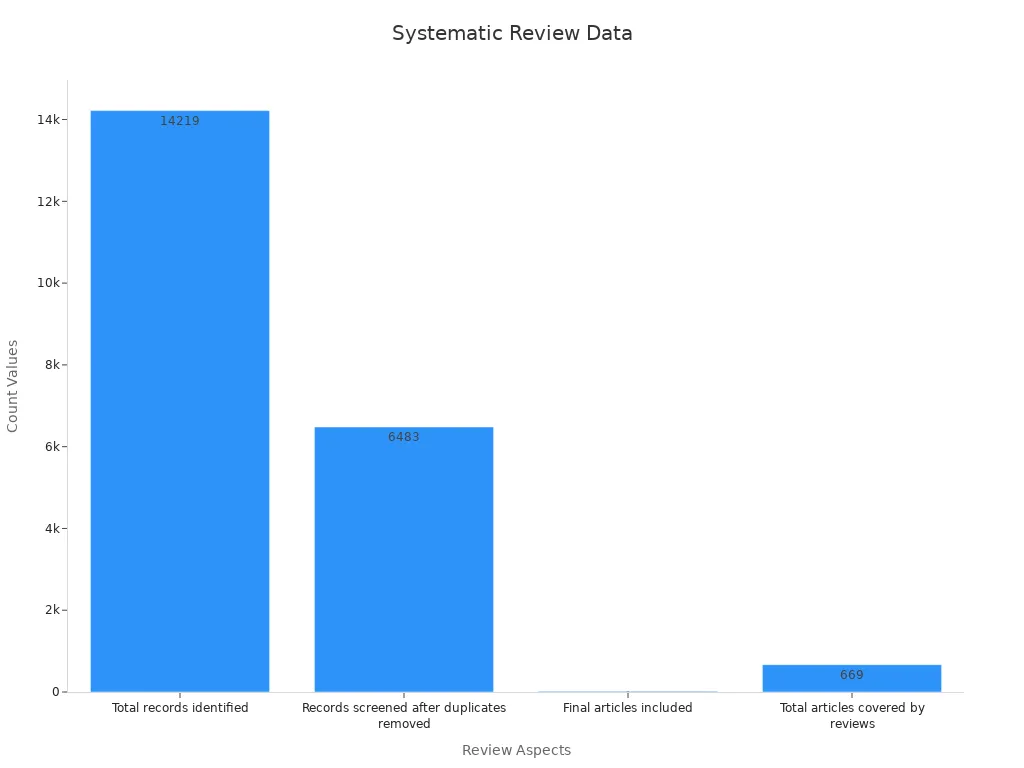

Big studies show that using both process labels (how content was made) and impact labels (if it could trick people) helps people make better choices. Here is a chart that shows how researchers check and study AI content:

When you label AI content, you help your team be careful. You remind everyone to check for mistakes, bias, or copying. This keeps your brand strong and your audience happy.

Remember: Talking about AI usage is not something you do just once. You need to keep updating your labels and notes as AI tools and rules change.

Human Oversight

Human-in-the-Loop

You might wonder how you can make sure AI works the way you want. The answer is simple: keep people involved. When you use a human-in-the-loop (HITL) approach, you let people check and guide what AI does. This helps you catch mistakes, spot bias, and make sure the AI acts fairly.

In many industries, experts say that human oversight is key. For example, in healthcare, doctors work with AI to read scans. The AI finds patterns fast, but the doctor makes the final call. This teamwork means fewer errors and better care for patients. You see the same thing in emergency rooms. AI helps sort patients quickly, but people use their judgment and empathy to decide what happens next.

Let’s look at some numbers that show why HITL matters:

Evidence Aspect | Description |

|---|---|

Training Data Efficiency | HITL needs less than half the training data to reach good accuracy. |

Model Uncertainty Understanding | HITL helps you see where the AI is unsure or lacks knowledge. |

Correlation with Human Ratings (Low Uncertainty) | When AI is confident, it matches human ratings 90% of the time. |

Correlation with Human Ratings (High Uncertainty) | When AI is unsure, HITL can flag these cases for review. |

Safeguards Against Bias and Errors | HITL reduces bias and mistakes, making AI more reliable. |

You can use HITL for quality checks, bias reviews, and to make sure your AI stays respectful and fair. This keeps your AI helpful and safe.

Clarifying Authorship

When you use AI to create content, you need to be clear about who did what. People trust content more when they know if a human or AI made it. If you show who wrote or edited something, you help your audience understand the message and trust your brand.

Adding clear authorship signals, like digital tags or metadata, lets people see the creator’s intent. This helps protect your work from changes you didn’t approve. It also gives your audience a way to check if the content is real.

Simple signals, like “Written by AI” or “Reviewed by [Name],” help most people make quick trust decisions.

Detailed trails, like a list of edits or digital signatures, help experts check the content’s history.

When you clarify authorship, you support both your team and your audience. You show respect for creators and help everyone feel confident about what they read or watch. This is especially important as AI becomes a bigger part of how we share information.

Data and Accountability

Data Use and Privacy

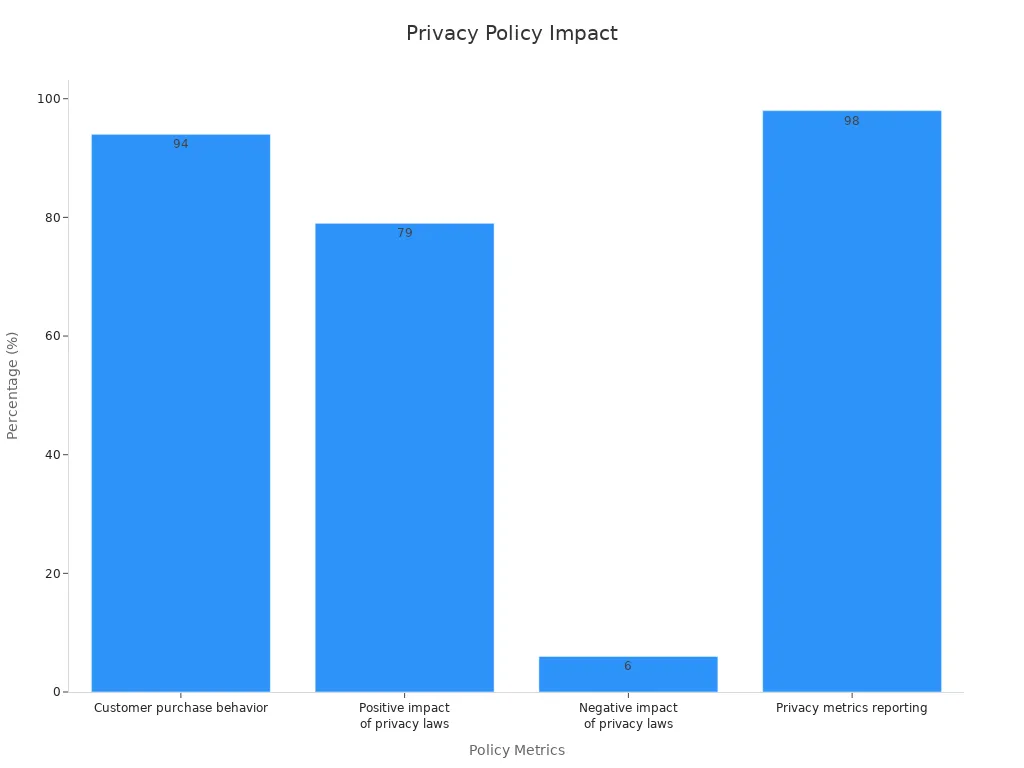

You want your data to be safe. When you use a service, you trust the company. You hope they will keep your information safe. Good privacy rules do more than follow laws. They help you feel safe and respected. Most companies get big rewards from caring about privacy. Over 70% of workers say privacy helps their business. Almost all leaders—94%—think people will not buy if data is not safe.

Here is how privacy helps trust and business:

Business Impact Metric | Statistic / Finding |

|---|---|

Benefits from privacy efforts | Over 70% see significant benefits |

Customer purchase behavior | 94% say customers won’t buy without proper data protection |

Positive impact of privacy laws | 79% report positive effects |

Negative impact of privacy laws | Only 6% see negative effects |

Privacy metrics reporting | 98% report privacy metrics to their board |

Privacy program budget | $2.5 million+ average annual budget by 2024 (Gartner) |

Privacy is not just about following rules. It changes how people see your brand. In fact, 76% of people will not buy from a company they do not trust with their data. If you show clear privacy rules and explain why you collect data, people worry less. They trust you more and feel better about your service.

Tip: Use easy words and pictures in your privacy rules. This helps people understand and trust you.

Governance and Explainability

Good AI needs strong rules and clear jobs. You want to know who is in charge and how choices are made. Data governance gives you this plan. It sets up teams, rules, and checks to keep AI fair and safe. For example, many companies use a “Three Lines of Defense” model. This means different groups check each other’s work so nothing gets missed.

Here are some ways groups make AI more open and easy to understand:

Ethics boards check high-risk AI projects.

Data science teams watch every AI system and its data.

Contracts with outside helpers need clear tests and reports.

Centers of excellence share good ideas with all teams.

New laws also ask for more openness. In Europe, the AI Act says companies must share details about their AI models, like what data they use and how well they work. In the U.S., new rules ask for reports on how AI affects people, especially in important areas.

When you set clear rules and explain how your AI works, people trust your technology more. You can also fix problems fast and keep your AI safe for everyone.

Continuous Improvement

Feedback and Adaptation

You can’t just set up your AI and forget about it. If you want your AI to stay fair and clear, you need to keep improving it. Continuous improvement means you always look for ways to make your AI better. You watch how it works, listen to what people say, and make changes when needed. This helps your AI stay honest and useful.

Many big companies use feedback to make their AI smarter and more transparent.

Nike checks customer feedback from different places and saw a 15% jump in how much people liked their new products.

Netflix uses real-time feedback from millions of viewers. About 80% of shows people watch come from AI suggestions, which keeps users happy.

Starbucks uses AI to read customer reviews and feelings. They improved customer satisfaction by 30% in just six months.

You can use these feedback tools too:

Short and regular feedback cycles with different people

Surveys that are easy to fill out on your phone

Mixing numbers and stories in your feedback

Making sure feedback is part of every customer chat

Tip: When you act on feedback, you show people you care about their opinions. This builds trust and helps your AI stay on track.

Continuous improvement is not a one-time thing. You need to keep checking, learning, and updating your AI. This way, your AI stays fair, open, and ready for new challenges.

Evolving Standards

AI rules and standards change all the time. You need to keep up if you want to stay trusted. New laws like GDPR, the EU AI Act, and the OECD AI Principles all say you must be open about how your AI works. These rules help everyone know what to expect and keep AI safe for everyone.

Here are some key standards you should know:

OECD AI Principles push for trustworthy and explainable AI.

The U.S. GAO framework makes sure companies stay accountable.

The EU AI Act sets rules for how AI gets built and used in Europe.

Note: High-quality data and clear rules help your AI stay reliable and fair. You need to balance being open with keeping your AI creative and useful.

By following these changing standards, you show your audience that you care about doing the right thing. You also make sure your AI stays safe, fair, and trusted as the world changes.

When you talk clearly about Communicating AI Usage, people trust you more. They also think your brand is honest. Good communication can help your business do better. You might see more money, safer ads, and customers who stick around.

Important KPIs are customer lifetime value, conversion, and viewable impressions.

Outcome-based KPIs prove that being open helps your business grow.

Rules and standards change often, so pay attention. Keep making your way of sharing better. Take some time to check what you do now. Make your AI communication even stronger.

FAQ

How do I tell my audience when I use AI?

You can add a clear note or label. Place it where people will see it, like at the top of your page. Simple words work best.

Why should I label AI-generated content?

Labels help people know what is real. They build trust and stop confusion. Your audience feels safer when you are honest.

What if I only use AI for small edits?

If AI only fixes spelling or grammar, a big label is not needed. For bigger changes, you should tell your readers. Use your best judgment.

Can I use both visible and hidden labels?

Yes! You can use a tag like “AI-generated” for everyone to see. You can also add metadata or digital signatures for experts to check.

See Also

Comparing Writesonic AI And QuickCreator For Content Creation

Complete Guide To Achieving SEO Success Using Perplexity AI

Understanding SEO Writing And Its Importance In Content Creation