Avoiding Bias in AI-Written Content

Avoiding Bias in AI-written content means being fair and correct. You can lower bias by making careful prompts, checking results, and picking many types of data. New studies show that AI often uses fewer words about women and shows racial bias. This can hurt businesses and people.

You should test prompts, fix them, and compare AI answers to trusted sources. Experts found that good prompt design and checking often help lower bias and make AI content better.

Key Takeaways

Give clear and exact prompts so AI gives fair answers. Use many kinds of data to help AI treat everyone the same. Always check and change AI content to find and fix bias before you share it. Use special tools and people to find and lower bias in AI writing. Keep checking AI often to find new bias and make it more fair over time.

Understanding Bias

What Is Bias

Bias in AI means that the system does not treat all people or ideas fairly. You can see bias when an AI gives better results for one group and worse for another. Bias can come from many places, such as the data used to train the AI or the way the AI is built.

Selection bias happens when the training data does not include enough different types of people or situations. For example, some image tools work worse for darker-skinned females because the training data did not include enough images of them.

Group attribution bias appears when the AI makes general rules about a whole group based on a few examples. For instance, a hiring tool might favor people from certain schools, even if others are just as qualified.

Implicit bias comes from hidden ideas or cultural habits. An AI might link women to housekeeper roles, even if you do not want it to.

Note: Bias in AI means the system does not match a fair standard. Sometimes, bias helps find patterns, but it can also lead to unfair results. Bias is not always the same as discrimination, but it can cause it.

You can also find bias in three main areas:

Modeling: Bias enters when you design the algorithm.

Training: Bias comes from the data you use.

Usage: Bias shows up when you use the AI in the wrong way.

Why It Matters

Bias in AI affects fairness and accuracy. When you use biased data, the AI can make wrong choices. For example, in predictive policing, arrest records often reflect who gets policed more, not who commits more crimes. This makes it hard to judge fairness.

Studies show that when you explain how AI makes decisions, people feel the system is fairer. If people think the AI is unfair, they trust it less and may even take legal action. Unfair AI can hurt a company’s reputation and lower how much people accept its decisions.

You need to care about bias because it shapes how people see and use AI. Fair AI builds trust and helps everyone get better results.

Sources of Bias

Data Issues

You often see bias in AI because of problems with the data. Data bias happens when the information used to train AI does not show the real world. This can lead to unfair results. Many types of data problems can cause bias:

Data Quality Issue | Description | Impact on AI Bias |

|---|---|---|

Biased Data | Data may not represent true groups or may reflect old human biases. | AI gives unfair results because it learns from bad examples. |

Unbalanced Data | Some groups have more data than others. | AI favors the bigger group and ignores the smaller one. |

Data Silos | Data is kept separate in different places. | AI cannot learn from all the data, so bias risk goes up. |

Inconsistent Data | Records do not match or have errors. | AI makes more mistakes and becomes less fair. |

Data Sparsity | Not enough data points for some groups. | AI cannot learn well, so it gives poor answers for those groups. |

Data Labeling Issues | Labels are wrong or not the same everywhere. | AI learns the wrong things and makes more errors. |

You can see these problems in real life. For example, some healthcare AI tools do not work well for women because the data did not include enough female patients. This shows how data issues can lead to unfair AI decisions.

Model Limitations

AI models have limits that can cause bias. You need to check how well the model works for different groups. Some models use fairness metrics like demographic parity or equalized odds to measure bias. Others use human reviewers to spot hidden problems. Here is a quick look:

Evidence Type | Description | Example/Application |

|---|---|---|

Quantitative Metrics | Use numbers to check fairness for different groups. | Demographic parity, equalized odds, disparate impact in finance. |

Qualitative Methods | Use people to review and test for bias. | Reviewers find gender or racial bias in AI content. |

Continuous Monitoring | Track fairness over time to catch new bias. | Streaming analytics spot bias changes in live AI systems. |

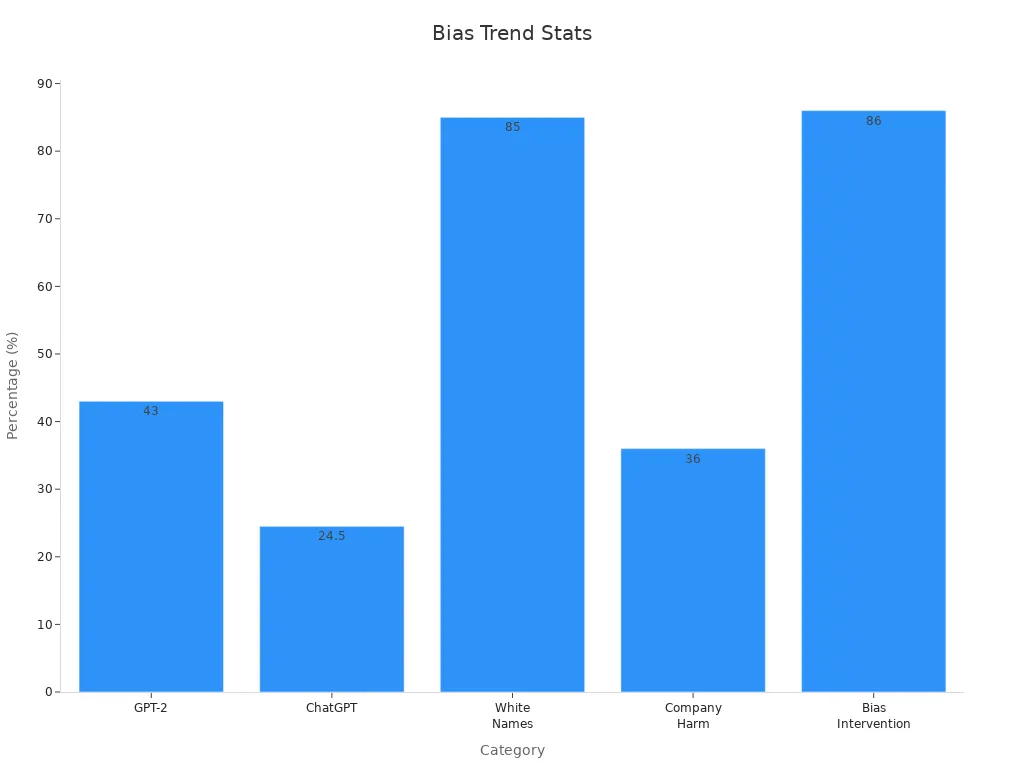

A study found that some AI models, like ChatGPT, show less bias and even refuse to answer biased prompts. Other models still show gender and racial bias, especially in news articles and hiring tools. This means you must watch for bias in both the data and the model.

Prompt Design

The way you write prompts for AI can also cause or reduce bias. Good prompt design helps AI give better and fairer answers. Research shows that when you use clear and careful prompts, the AI gives higher-quality results. If you learn more about how to write prompts, you can help the AI avoid bias. This means your skills in prompt design play a big role in making AI content fair for everyone.

Avoiding Bias: Practical Steps

Prompt Design

You can help AI give fair answers by making better prompts. Clear and specific prompts guide the AI to be more fair. For example, you can ask the AI to answer like an expert or not use stereotypes. You should give both good and bad examples in your prompts. This helps the AI not pick one side over the other. Mixing up the order of examples also helps. These steps make it less likely for the AI to copy bias from its training data.

Researchers say prompt debiasing works well. If you tell the AI not to use biased thinking, it gives more balanced answers. Adding details like time or place in your prompts helps the AI stay fair. Using these prompt design ideas can make AI-written content better and more fair.

Tip: Always check your prompts before you use them. Small changes can really help avoid bias.

Review & Edit

You should always check and fix AI-written content. Blind testing is a good way to find bias. In blind testing, you hide who the people or groups are and see if the AI treats everyone the same. Checking with trusted sources helps you find mistakes or unfair parts. You can use checklists to help you review. These checklists look at fairness, accuracy, and inclusivity.

A famous healthcare case showed that reviewing and changing an AI model made results much fairer. After fixing it, more Black patients got extra care, going from 17.7% to 46.5%. This proves that careful checking and editing can really help avoid bias.

Note: Regular checks and edits help you find new problems as AI and data change.

Diverse Data

Using diverse data is very important to avoid bias. If your data comes from only one group, the AI will not treat everyone fairly. You need to collect data from many people and situations. This helps the AI learn about the real world, not just a small part.

Studies show that good, diverse datasets are key for fairness. When you use data from many backgrounds, you lower the risk of bias. Some programs, like the NIH All of Us Research Program, collect data from millions to make AI more fair and correct. You can also use fake data or fix your datasets to fill in missing parts.

A review of AI research shows that diversity in data, people, and process gives better results. When you focus on diversity and inclusion, you build trust and make your AI systems stronger.

Bias Detection Tools

You can use special tools to find and fix bias in AI-written content. Tools like Textio and OpenAI’s API help you check for unfair words or patterns. There are also frameworks and toolkits, like AI Fairness 360, TEHAI, and DECIDE-AI, that help you avoid bias. These tools use numbers and people to find problems.

It is important to keep checking for bias. Over time, data can change and new bias can show up. By using bias detection tools often, you keep your AI content fair and current. Many experts say you should keep checking and work with others to share data and make models better.

Reminder: No tool is perfect. Always use tools and human judgment together for the best results.

Avoiding Bias in AI-written content takes work at every step. You need to make good prompts, check and fix content, use diverse data, and use bias detection tools. By following these steps, you help make AI content fair, correct, and inclusive.

Best Practices

Guidelines

You need clear guidelines to make AI-written content fair and transparent. These rules help you build trust and avoid mistakes. Many experts and laws agree on some key principles:

Transparency: You should explain how your AI works and how it makes decisions. This helps people understand and trust your content.

Fairness: You must treat all groups equally. Use fairness checks and audits to find and fix bias.

Accountability: You should take responsibility for your AI’s actions. If something goes wrong, you need to fix it.

Privacy: You must protect people’s data and follow privacy laws.

Many countries, like those in the European Union, have laws such as the GDPR and the AI Act. These laws require you to make AI systems explainable and fair. Research shows that when you are open about your AI, people trust it more. Fairness audits and bias checks are now common in places like Canada and the EU. You can use tools like AI Fairness 360 to help with these tasks.

Tip: Write down your rules for fairness and transparency. Share them with your team and update them as needed.

Ongoing Monitoring

You cannot stop at setting rules. You need to keep checking your AI for bias and fairness. Bias can show up over time, even if you start with good data. Regular audits help you catch new problems early.

Many companies use statistical tests, like hypothesis testing, to find bias in their AI. For example, some hiring tools have favored men because of biased data. Loan and healthcare algorithms have also shown unfair results for minorities. These cases show why you must keep watching your AI.

A good plan includes:

Run regular bias audits.

Collect feedback from users.

Monitor your AI’s impact.

Be ready to change your AI if you find problems.

Repeat these steps often.

A review of AI systems found that data gaps, group sameness, and hidden patterns can cause bias. Ongoing checks and updates help you fix these issues and keep your AI fair.

Remember: Bias can return as data and society change. Stay alert and keep improving your AI systems.

You can take real steps to make AI content fair. Start by using clear prompts and checking your results. Choose data from many groups. Use tools to spot bias. Keep watching for new problems. Avoiding Bias means you stay alert, share your process, and include everyone. Stay informed about AI ethics. Put these best practices into action to help build trust in AI.

FAQ

What is the easiest way to spot bias in AI content?

You can look for words or ideas that favor one group over another. Compare the AI’s answers to trusted sources. If you see unfair patterns, you have likely found bias.

How often should you check AI-written content for bias?

You should review AI content every time you use it. Regular checks help you catch new problems. Bias can appear as data changes.

Can you remove all bias from AI content?

You cannot remove all bias, but you can lower it. Use diverse data, clear prompts, and regular reviews. These steps help you make AI content as fair as possible.

What tools help you find bias in AI writing?

You can use tools like Textio, OpenAI’s API, or AI Fairness 360. These tools scan your content for unfair words or patterns. Always combine tools with your own careful review.

See Also

Comparing Writesonic AI And QuickCreator For Content Creation

Strategies To Analyze Content And Beat Your Competition

How AI Blog Builders Are Transforming Modern Blogging