Auditing AI Content for Brand Safety

Brands face major challenges because AI content spreads rapidly online. Without proper auditing AI content, false information can circulate, biases can worsen, and reputations can be damaged.

AI deepfakes and fabricated conversations can manipulate people's emotions and beliefs, posing an even greater threat to vulnerable groups.

When systems rely on biased data, they amplify unfairness in employment, healthcare, and financial sectors.

Lack of transparency in AI decisions makes it difficult to assign responsibility, increasing overall risk.

Effective auditing AI content requires a clear strategy and works best when humans and machines collaborate.

Key Takeaways

Use both AI tools and human experts to check AI content for brand safety. AI works fast, but people catch hidden problems.

Set clear rules and train teams regularly to spot risks and keep content honest and fair.

Check AI content often to find and fix mistakes before they harm the brand or customers.

Choose trustworthy AI partners who protect data and follow safety rules.

Stay ready for new challenges like deepfakes by updating tools, rules, and training to keep trust strong.

Brand Safety and AI

Defining Brand Safety

Brand safety is about keeping a brand’s reputation safe. Brands do this by making sure their content does not show up next to bad or harmful things. With AI, brand safety is more than just blocking certain words. It means looking at the whole message and what it really means. Some platforms use smart tools to check not only the words but also the feeling and reason behind them. This helps brands not block good content, like news about climate change or health.

Note: Human moderators are very important. They look at content that AI is not sure about. They help find cultural and local problems that machines might not see.

The table below explains how experts talk about and check brand safety in AI:

Aspect | Description | Examples / Metrics |

|---|---|---|

Definition of Brand Safety | Rules for what content is okay, what it means, and how it sounds | Rules for hate speech, fake news, and legal things |

Metrics to Measure Safety | How well AI finds problems, how fast it works, and if users are happy | Watching for mistakes, quick action on risks |

Contextual Understanding | AI learns to spot sarcasm, irony, and sneaky harmful messages | Fewer errors when blocking content |

Human-in-the-Loop Moderation | Experts check flagged content, keep records, and use AI tools | Better handling of hard or sensitive cases |

Example Platform | AI tools for checking text, pictures, and videos, and watching in real time | Brand safety on many platforms at once |

AI’s Impact on Brand Safety

AI changes how brands keep things safe online. AI can look at lots of content very fast. It can find dangers that people might not see. Studies show that when people trust AI and think it works well, they also trust the brand more. For example, when people feel good about AI and think it is right, they trust the brand more. This trust helps people feel safe and loyal to the brand.

Generation Z uses digital tools all the time. They care a lot about how brands use AI. Brands that use AI in a good way can earn trust from this group. When brands use both AI’s speed and human thinking, they can protect their name and keep people safe.

Key Risks of AI Content

Misinformation

AI-made content can spread wrong information very fast. Studies say fake pictures and videos on social media got over 1.5 billion views in less than a year. Many of these were used in political ads or deepfakes, so people could not tell what was real. AI makes new content faster than people can check it, so mistakes and lies grow quickly. Fact-checkers found about 80% of false claims use pictures or videos, and AI-made images are now a big part of these. As AI gets better, fake things are harder to find, and this can make people stop trusting brands.

Bias and Negative Narratives

AI systems learn from data that can have hidden bias. When brands use these systems, they might share unfair or bad messages. Some people use AI to copy brands and make fake posts or videos that hurt the brand’s name. AI can also make mixed messages that confuse customers. Companies should teach workers to spot these problems and use AI tools to find off-brand words or harmful stories. Giving someone the job to check content helps lower these risks.

Data Privacy

AI tools use lots of data, which can include private company or customer info. Studies warn that AI marketing can cause data leaks, people getting into data they should not, and using private info in the wrong way. These problems can lead to fines, lawsuits, and people not trusting the company. The table below shows new trends and numbers about data privacy risks:

Category | Key Findings / Numbers |

|---|---|

AI Incident Increase | |

Public Trust Decline | Trust in AI companies fell from 50% in 2023 to 47% in 2024 |

Regulatory Activity | 59 new AI rules made in the U.S. in 2024 |

Types of AI Risks Identified | Privacy problems, bias, wrong information |

Consequences of Risks | Fines, lawsuits, and damage to reputation |

Scale and Complexity

AI can look at huge amounts of data and do jobs fast, so audits are quicker and more correct. But the size and difficulty of AI content bring new problems. Experts need to look at what AI finds, fix data problems, and handle bias in the system. Security and privacy worries make things even harder. Watching in real time and using smart tools helps, but AI cannot take the place of human thinking. Brands need to use both machines and experts to handle these risks well.

Auditing AI Content

Auditing AI Content needs a clear plan to keep brands safe. This helps companies follow rules and protect their name. The process uses rules, both AI and people, smart tools, and strong data safety. Each part helps make sure content is safe and people can trust it.

Policy and Guidelines

Every company should make simple rules for Auditing AI Content. These rules show what is okay and what is not. Experts suggest these steps:

Find out which AI tools workers use and see if they use private data.

Check AI-made content often to find rule or ethics problems.

Make a risk chart to look at risky AI work before sharing it.

Set up checks inside the company to make sure AI content is good.

Teach workers with real examples, like making ads or money reports.

Tell workers how AI uses data and what can go wrong with big language models.

Let workers help check AI work and talk about how to lower risks.

Use lessons that teach about real AI risks, and remind staff that private modes do not always keep data safe.

Tip: Big banks and hospitals use these steps to stop big mistakes and keep private info safe.

Good rules help everyone know what to do when Auditing AI Content and help stop mistakes.

AI and Human Review

AI can look at lots of content fast, but people are still needed. The best way is to use both AI and people for Auditing AI Content. AI can find easy problems, but people check for truth, style, and meaning.

AI-made content can miss natural words, good order, and what readers like.

People fix mistakes, add details, and make sure the content sounds like the brand.

Studies say AI is fast for first drafts, but people make the content special and honest.

For example, a big store uses AI to find risky content, but editors check each one before posting. This helps catch things AI misses, like jokes or culture hints.

Note: The best way is to use AI’s speed and people’s skills together. This makes sure Auditing AI Content is both correct and fair.

Detection Tools

Detection tools are important for Auditing AI Content. These tools help find fake, unfair, or AI-made content. The table below lists some top detection tools and what they do well:

Detection Tool | Validated Metrics | Key Findings |

|---|---|---|

GLTR | Good at finding AI code; tested with numbers. | |

GPTZero | Recall, Precision, F1, Accuracy, AUC | Not as good with code; sometimes not correct. |

Sapling AI Detector | Recall, Precision, F1, Accuracy, AUC | Works well with normal words; not as good with code. |

GPT-2 Detector | Recall, Precision, F1, Accuracy, AUC | Not great with many models or code. |

DetectGPT | Recall, Precision, F1, Accuracy, AUC | Uses a special way to check; has trouble with code. |

Some companies use their own AI tools to find fake facts or wrong sources in school papers. These tools get tested many times to make sure they work for different topics. But people still need to check the results and find small problems.

Tip: Use more than one tool and have people check too for the best Auditing AI Content results.

Data Security

Data safety is very important for Auditing AI Content. Companies must keep private data safe from leaks, bad use, and hackers. Good ways to do this are:

Use ways to hide, lock, or change data so AI does not see private info.

Keep safe records of all AI data use.

Give people only the data they need for their job.

Check how private data is as it moves and change safety steps if needed.

Find and stop data changes using smart checks and math.

Connect AI safety with other systems like SIEM and ID checks.

Pick safety tools that work with many AI companies to avoid getting stuck with one.

Reports say 75% of safety workers saw more hacks last year, and 85% say AI is part of the reason. Almost all experts think AI is now needed to fight these attacks.

Companies should also lock data when it is stored or sent, use safe places to keep it, and watch AI models for bad use. Checking often and using strong rules helps stop problems and keeps trust high.

Hybrid Oversight

AI Automation

AI automation lets brands check lots of content very fast. Automated systems look at big groups of text, pictures, and videos for risks. These systems find possible problems like harmful words or fake facts before posting. AI automation makes fewer mistakes and helps reviews go faster. It also helps brands follow rules and keep clients happy.

A semi-attended AI method works best for brands. In this way, AI gives ideas, but people check them before sharing. This keeps people in charge and lowers mistakes from AI surprises. AI automation also makes work easier, so experts can focus on big choices instead of small checks.

A study of 106 tests showed human–AI teams do better than just humans, with a medium to large effect.

If humans do better than AI, mixing both helps more. If AI does better, the team may not beat AI alone.

Sometimes, mixing humans and AI makes decisions worse, but teamwork helps with creative jobs.

Tip: Brands should use AI automation for speed and reach, but always have people check the work.

Human Judgment

Human judgment brings understanding that AI cannot give. People can see sarcasm, culture hints, and small risks that machines miss. Human editors make sure content fits the brand’s style and values.

Studies say human help is most useful in editing and fixing tasks. For example, in one study, people did about 93% of editing work, but only 16% in creative writing. This means people are more important when fixing AI content.

New teachers found AI-made texts right 45% of the time.

Experienced teachers got 38% right.

People could tell GPT-2 from human writing 58% of the time, but only 50% for GPT-3.

In a Turing test, people picked human writing 67% of the time, but often thought AI text was human.

These results show people alone have trouble finding AI content. But when humans and AI work together, they get better results. Human judgment also depends on knowing where advice comes from and having good past experiences. People trust AI more when they know it is AI and have used it before.

Note: Brands should teach teams to check AI content and use their own judgment to keep the brand safe.

Ongoing Best Practices

Regular Audits

Brands keep things safe by checking AI content often. How often they check depends on what the business does. Stores usually check every three months to get ready for busy times. Restaurants like to check every month to watch their ads. Banks check twice a year to make sure customers are happy. Franchises check before they grow or sign new deals. These checks help brands find problems, fix how they work, and make sure everyone does their job.

Most brands check every month, three months, or twice a year.

Checks help find dangers and make sure rules are followed.

Regular checks help brands get better all the time.

Tip: Brands should make a clear plan for checks and change it if needed.

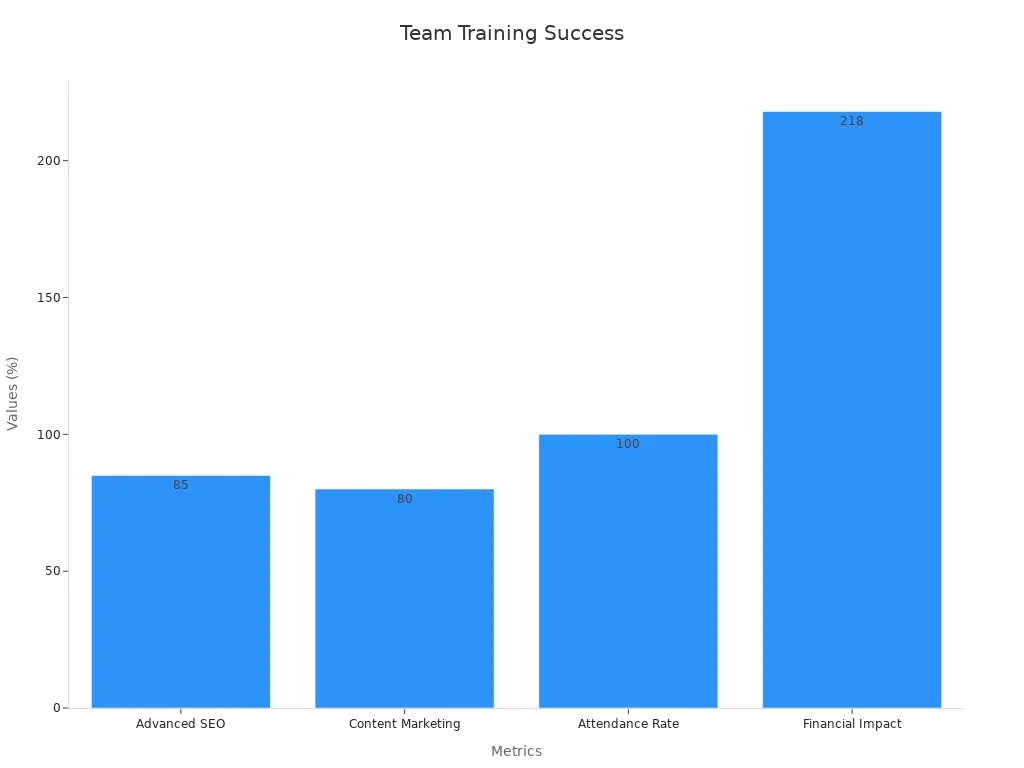

Team Training

Good training helps teams check content and keep brands safe. Training makes workers better and more sure of themselves. After training, teams do better in SEO and marketing. More people are happy, and everyone comes to training. One report says money made per worker goes up a lot after training.

Metric | Before Training | After Training / Result |

|---|---|---|

Advanced SEO | 60% | 85% |

Content Marketing | 55% | 80% |

Participant Satisfaction | N/A | 85%-90% |

Attendance Rate | N/A | 100% |

Financial Impact | N/A | 218% increase |

Training classes, online lessons, and must-attend workshops help teams learn and do well.

Policy Updates

Brands need to change their rules to match new AI risks and laws. When AI changes, old rules might not work anymore. Experts say brands should look at their rules often and make changes. This helps brands follow new laws and handle privacy, safety, and fairness. Changing rules often keeps brands safe and trusted.

Choosing AI Partners

Picking the right AI partners helps keep brands safe. Brands should check if partners are good, helpful, and have done well before. Good partners tell brands how their AI works and show real examples. Brands should pick partners with strong safety, clear talk, and good results. They should also look for smart tools, ways to check risks, and new ideas.

Check if partners know a lot and have done this before.

Make sure they keep data safe and private.

Look at real stories and results.

Pick partners who can grow and change with you.

Brands that check partners well build trust and keep their AI content safe.

Future Challenges

Deepfakes and Synthetic Media

Deepfakes and synthetic media bring new problems for brands. Researchers try to find out where fake content comes from by looking for special marks made by generative models. They also use passive authentication, which checks if media is real by looking at how it looks and sounds. Active authentication puts digital watermarks on media when it is made. It is still hard to spot deepfakes in real life, especially in blurry or changed videos.

Projects like FF4ALL are making strong tools that use both passive and active ways to find deepfakes. Brands will need tools that work for lots of content, work quickly, and can keep up with new deepfake tricks. There are also worries about privacy and people using these tools in the wrong way. Experts say brands should use ethical rules and explainable AI to help people trust them and use these tools the right way.

Deepfake tools must get faster and use less energy.

Tools should work even if the media is not clear.

Brands need to think about privacy and ethics when using these tools.

Transparency and Disclosure

Transparency rules for AI are getting tougher. Laws like the EU AI Act make companies share how their AI works, like what logic, algorithms, and training data they use. The EU Data Act says companies must let users see data from smart products. In Colorado, companies have to show risk plans and reports to the government.

To keep secrets safe, companies should mark trade secrets, use agreements to keep things private, and use tech like encryption. Companies also need to update papers, check for risks, and make sure AI partners follow the rules. Training and clear talk help teams know about AI risks and follow new laws.

Companies that are open and update their info build trust and lower the chance of tricking customers.

Adapting to Trends

Brand safety plans must change as AI content changes. At first, brands used simple lists of words, but now they use AI tools that understand feelings and meaning. These tools work on sites like YouTube, Meta, and TikTok, where old ways do not work well.

AI moderation helps brands because it is fast, saves money, and makes users happier. But there are still problems, like mistakes and different cultures, so people still need to check content. The best way is to have clear rules, use both AI and people, and watch how well things work.

Brands should use AI to check content quickly and the same way each time.

People should review hard or sensitive cases.

Training and being open help keep moderation working well.

Using new AI tools and changing plans helps brands stay safe and ready for new risks.

Checking AI content often helps brands avoid hidden dangers and keeps trust high. Experts say using both people and AI is best because AI alone is not always safe. The main ideas are to keep checking, have people watch over things, and make clear rules. Brands need to watch out because online dangers keep changing. They can do these things:

Have both AI and people check all content.

Make sure to check often and have simple rules.

Teach teams and change rules when needed.

Pick partners who are good at keeping things safe.

Brands that do these things now will be safer and more trusted.

FAQ

What is brand safety in AI content?

Brand safety in AI content means keeping a company’s good name safe. Teams make sure AI-made content does not have harmful, fake, or rude things. This helps brands stay out of trouble and keep people’s trust.

How often should companies audit AI content?

Most experts say companies should check content often. Many brands look at their content every month or every three months. Some, like banks, check two times a year. Checking often helps companies find problems early and keep their work good.

Can AI tools detect all harmful content?

AI tools can spot many problems fast. But they can miss jokes, culture problems, or hidden bias. Human reviewers help by finding things that AI does not catch.

Why do brands need both AI and human oversight?

AI checks lots of content quickly. Human experts use their own knowledge and understand culture. When both work together, brands are safer and stronger.

See Also

Mastering Content Analysis To Beat Your Industry Rivals

Common Content Marketing Errors Law Firms Must Avoid

Exploring Key B2B Content Marketing Trends For 2024