AI Compliance Tips for Regulated Industries

Organizations in regulated industries have tough compliance problems when they use artificial intelligence. Many leaders now see clear benefits from using AI compliance tips every day.

Metric | Statistic | Impact |

|---|---|---|

Organizations using AI extensively in compliance | Extensive use in operations | |

Data breach cost reduction with AI and automation | Up to $2.2 million less | Big cost savings compared to not using AI for prevention |

Faster breach containment with AI | Nearly 100 days faster | Quicker detection and response to breaches |

Overall organizations employing some AI security automation | 67% | Most organizations use it for security and compliance |

AI helps find risks, makes compliance tasks easier, and helps keep records clear. More than two-thirds of organizations already use some AI security automation, showing they are moving past old ways.

Key Takeaways

Match every AI use case with the right rules. This helps avoid risks and keeps compliance strong.

Use clear records and one main dashboard to track AI projects, data, and risks. This makes audits and updates easy.

Use a risk-based plan and watch AI systems all the time. This helps find problems early and keeps things fair.

Train teams often and make groups from different jobs. This helps improve compliance culture and teamwork.

Use AI automation tools to save time and cut down on mistakes. This also helps keep up with changing rules fast.

AI Compliance Tips

Map Use Cases to Regulations

Groups in regulated industries need to match each AI use case with the right rules. This helps teams know which laws and guidelines fit each AI project. For example, healthcare companies must follow HIPAA. Banks and other financial groups must follow rules like the Gramm-Leach-Bliley Act. Matching use cases to rules helps stop hidden risks and keeps compliance strong.

Leaders in these fields have seen real gains from this method. Big drug companies like Novartis, GSK, Pfizer, and Merck have shown clear progress by linking AI projects to rules. Novartis uses AI to watch production and spot quality problems early. This helps them follow rules better and have fewer failed batches. GSK cut down on wrongly rejected good products by over half. This helped them make more products and stay in good standing with regulators. Pfizer got better at handling drug safety for many products. This made FDA checks easier and faster.

Tip: Make a table or checklist that connects each AI use case to the rules it must follow. Update this list often as new rules come out.

Experts also say to map out how AI data moves, check risks from outside partners, and watch things inside the company. Written AI policies should say who does what, and these rules should be updated often. Training helps workers know how to keep up with compliance. These steps make things clear, keep people responsible, and help manage risks well.

Align with Data Protection Laws

Data protection laws are a key part of AI compliance in these fields. Groups must make sure every AI system follows privacy rules like GDPR, HIPAA, and new AI laws. This means tracking where data comes from, how it is used, and who can see it. Encryption and access controls keep private data safe from people who should not see it.

A new study showed that 85% of healthcare groups use AI, but only 30% have ways to show how AI works. This gap can cause lawsuits and fines. For example, University of Chicago Medicine was sued in 2019 for an AI mistake. Microsoft’s facial recognition system was also criticized for being unfair. These cases show why it is important to follow both old and new data protection rules.

Rules keep changing. The US FTC now gives advice on AI transparency. The EU AI Act and GDPR have strict data rules. In Asia, Japan’s METI and Singapore’s IMDA focus on fairness and making AI easy to explain. Groups must keep up with these changes to avoid big problems.

Regular checks and risk reviews help find compliance gaps.

Clear records of AI decisions build trust with regulators and customers.

Explainable AI tools, like IBM Watson OpenScale, help make AI fair and accountable.

Note: Use AI tools to watch for rule changes and check compliance. This cuts down on mistakes and keeps records up to date.

By using these AI Compliance Tips, groups can lower risks, be more open, and work better with regulators. These steps also help teams react fast to new laws and rules.

Governance and Documentation

Centralized Records

Centralized records are very important for strong AI governance. Top organizations keep all their AI information in one place. They track data sources, model versions, and risk checks. This helps teams watch for risks and follow rules. It also lets them answer audits fast.

AI governance frameworks say to make teams with people from different jobs. These teams set rules and help keep AI safe.

A main list tracks every AI or machine learning system. It shows what each system does, who owns it, and its life cycle. This makes it easier to watch for risks.

Standards like ISO 42001 and the NIST AI Risk Management Framework need organized records in one place.

Financial services companies use shared words and risk lists. Centralized records help with this.

Watching all AI systems in one place helps groups change rules as AI grows. This keeps things clear and helps fix problems fast. 6. It is easier to handle outside risks with good records. This is true when working with vendors or outside models.

Tip: Use a main dashboard to keep track of all AI projects. Include their data, owners, and risk levels. This makes audits and updates much easier.

Clear Documentation

Clear documentation helps people trust your company. It also helps you follow rules. Regulators want to know how AI systems work. They want to know what data is used and how choices are made. Good records help teams follow every step in the AI process.

Data protection laws say you must tell people how you use their data. People should have control over their own information.

The GDPR’s “right to explanation” means you must explain AI choices to users.

Anti-discrimination laws say you must show that AI is not unfair.

The California Consumer Privacy Act says you must list what personal data you collect and why.

Good records also help you track changes in AI and data. Reports about updates and risks keep everyone informed. One financial company had bias in its AI credit scoring. They told people about the problem and how they fixed it. This helped people trust them again. This shows that clear records and honesty help with compliance and public trust.

Note: Keep good records of how you make, test, and change models. Use tools to track changes and show you care about fairness and doing the right thing.

Risk Management

Risk-Based Approach

A risk-based approach helps groups focus on big AI compliance problems. Many companies now use systems that sort AI projects by risk. For example, Castor uses three levels. Low-risk projects do not use personal health data. Medium-risk projects use secret business data. High-risk projects use sensitive patient health information. These need the strictest controls. This matches rules like the EU AI Act. That law sorts AI into unacceptable, high, limited, and low risk.

AI-driven RegTech cuts down manual document review by 94%. This saves 87 workdays every six months.

Companies using RegTech AI save up to 30% on compliance costs.

The EU AI Act says high-risk AI needs ongoing risk management. This includes credit scoring and insurance.

Human oversight is still needed to check AI results and make fair choices.

Groups like EY use risk levels to sort AI use cases. They set controls based on risk. This helps them handle high-risk AI in clinical trials or finance. It also keeps them ready for audits.

Ongoing Monitoring

Ongoing monitoring makes sure AI systems follow rules over time. Automated tools can find risks like bias or drops in performance right away. Teams test and approve AI before using it. This makes sure they meet ethical and legal rules. Platforms like Collibra and FairNow help track AI. They automate checks and keep good records.

Continuous risk checks find problems early.

Regular model tests keep things fair and clear.

Automated tools cut down manual work and help with audits.

Most groups still have problems. Only 47% have a risk management system. About 70% do not have ongoing controls. Teams with different jobs and clear roles help fix these gaps.

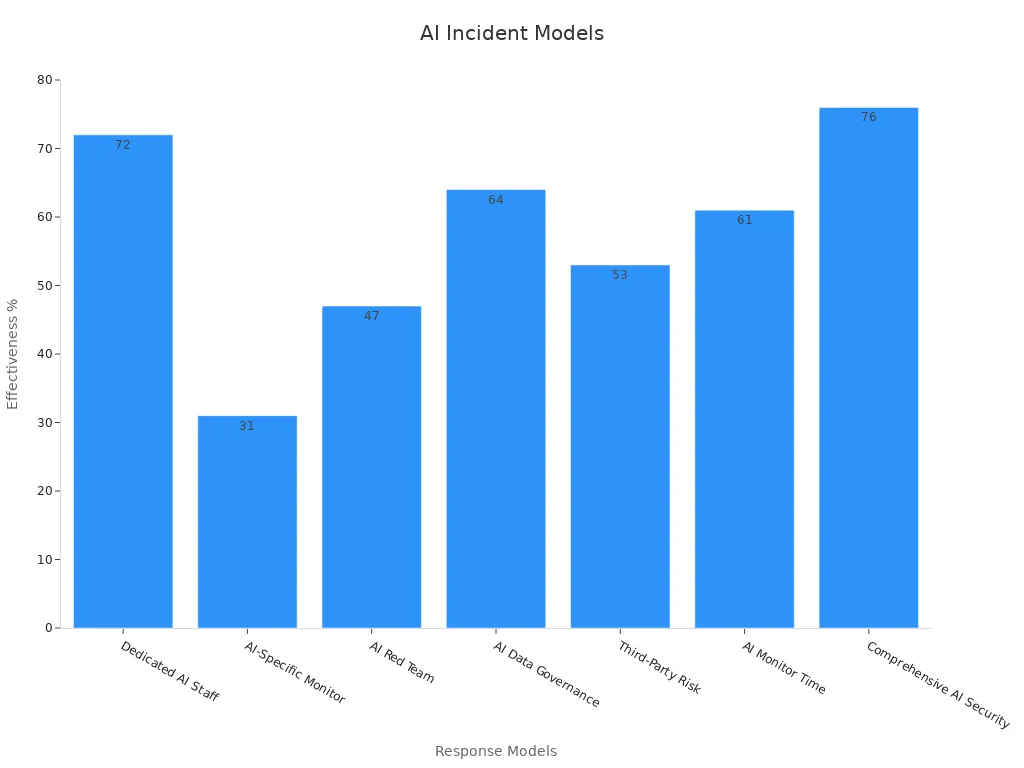

Incident Response

A strong incident response plan helps groups get ready for AI compliance failures. Teams should make and update plans. Plans need clear roles, steps for talking, and ways to recover. Real-time monitoring and alerts help find problems fast. Regular audits and security tests keep systems safe.

Incident Response Model | Effectiveness Metric | Statistical Result / Impact |

|---|---|---|

Dedicated AI Security Personnel | Faster breach detection | |

AI-Specific Security Monitoring | Reduced breach costs | 31% reduction in breach costs |

Regular AI Red Team Exercises | Fewer successful attacks | 47% fewer successful attacks |

AI Data Governance Frameworks | Lower regulatory penalties | 64% lower penalties |

Third-Party AI Risk Management | Fewer supply chain-related incidents | 53% fewer incidents |

AI-Specific Security Monitoring (detection time) | Reduced detection time | 61% reduction in detection time |

Comprehensive AI Security Programs | Fewer AI-related breaches by 2026 | 76% fewer AI-related breaches |

Tip: Give clear jobs for incident response and review each incident to make future plans better. Human oversight and regular training help spot problems early and lower risks.

Compliance Culture

Training and Awareness

Regular training is very important for a strong compliance culture. When organizations use AI to do boring compliance tasks, things go faster. There are also fewer mistakes made by people. This change lets compliance workers spend more time on risk management and new ideas. The culture becomes more active and ready for change.

AI-powered tools like Lingio and WorkRamp help with compliance training. They have fun courses, leadership lessons, and support many languages.

Barclays uses an AI assistant called Aida. Aida gives real-time advice and short training. Workers get answers fast, so they make decisions quicker and make fewer mistakes.

AI Tool | Key Features Supporting Compliance Culture Improvement |

|---|---|

Lingio | Custom, fun compliance training; helps leaders and supports many languages. |

WorkRamp | Special modules, eSignature for proof, mobile use, and all learning in one place. |

Training works better when it is made for each person. Games and regular quizzes also help. Leaders who join in and a habit of always learning keep these good results going. Ongoing training for each job cuts down on compliance problems and keeps workers alert.

Tip: Use workflow automation to send reminders and check if training is done. This helps everyone stay current.

Cross-Functional Teams

Cross-functional teams are very important for compliance. When legal, compliance, and technical teams work together early and often, they avoid working alone. This also means less work has to be redone. These teams share the job of AI governance, make checklists together, and set goals as a group.

Regular compliance checks during AI projects help with audits.

Using the same words and keeping records in one place helps everyone talk clearly.

Making choices together stops slowdowns and helps fix risks faster.

Legal and compliance experts help from start to finish, not just at the end.

This way of working makes things go faster, keeps things open, and helps manage risks before they grow.

Appoint Compliance Leads

Having special compliance leads makes sure people are responsible. These leaders run training, help teams work together, and talk to regulators. They keep the compliance culture strong by setting clear rules and always looking for ways to get better.

A strong compliance lead keeps everyone working together and ready for new problems.

Automation and Tools

Compliance Automation

AI-driven compliance automation tools help groups follow new rules. These tools use machine learning and robotic process automation. They track new rules and update compliance steps. In healthcare, AI systems help enforce HIPAA. They control who can see data and watch sensitive data all the time. Financial services use AI to follow SOX and PCI-DSS. They check things all the time and make audit reports right away.

Platform | Key AI Features | Industry Focus | Impact |

|---|---|---|---|

Real-time regulatory tracking, risk assessments | Manufacturing, Pharma, Food | Turns complex rules into clear steps, saves time | |

SGS Digicomply | Monitors food safety, scans for risks, auto compliance | Food Industry | Manages regulatory changes from many data sources |

Avatier | Identity management, real-time access monitoring | Healthcare, Financial Services | Keeps compliance up to date, ready for audits |

AI automation cuts down on manual work by up to 90%. It gathers proof, updates files, and finds risks before they grow. These tools grow with the business. This makes it easy to add new rules or handle more data. Automation also saves money and lets teams do more important work.

Tip: Use AI dashboards to check compliance fast and get alerts about new risks.

Reporting Solutions

Automated reporting tools help audits go faster and make fewer mistakes. Platforms like DataSnipper and ACL Analytics pull out and study data quickly. They find errors people might not see. Workflow tools like Workiva and HighBond keep documents in order. They track compliance and make reports with less work.

Auditor productivity went up by 35% with AI audit tools.

Automation cut project time in half and lowered mistakes.

Companies saved about $1.88 million in data breach costs with security automation.

Real-time checks and alerts help teams find problems early. For example, Mayo Clinic uses AI to watch who looks at patient records. This helps catch problems fast and keeps HIPAA compliance strong. Big companies that use AI for compliance reporting make fewer mistakes and fix problems much faster.

Keeping records up to date and using automated tools makes compliance easier and helps the business.

Regulator Engagement

Proactive Communication

Groups in regulated industries need good relationships with regulators. Talking early and often helps companies keep up with new rules. It also helps them avoid surprises. Many top companies have a plan for talking to regulators. They do not wait for problems. They plan meetings and use the same steps each time.

Teams use special tools to watch for rule changes.

Compliance teams meet often to talk about updates.

Companies buy regtech to help with compliance work.

Staff get training and practice for different situations.

Teams check how new rules might affect them.

Using the same steps makes following rules easier.

Open talks with regulators and groups help everyone understand.

Internal checks help find and fix problems.

Compliance is part of risk management. 10. Leaders show good habits and reward people who plan ahead.

Companies that do these things can react fast when rules change. They also earn trust from regulators and lower their chance of getting fined.

Banks use a careful plan for meetings with regulators. They plan each meeting and share calendars for checks. They pick people to lead each meeting. All papers go into one system. This makes it easy to find what they need for audits.

Industry Forums

Industry forums are important for AI compliance. These groups bring together companies, experts, and regulators. People share news, talk about new rules, and learn from each other. Being part of forums helps groups stay up to date and help make new rules.

Being active in AI policy helps match new ideas with what people need.

The Biden Administration asked the public for ideas about AI rules.

Co-governance lets many people help make rules.

Forums stop one group from having too much power.

Many voices, like companies, teachers, and the public, help guide AI rules.

Joining industry forums often helps groups keep up with new rules. It also lets them help make fair and good AI rules.

When groups use AI Compliance Tips, they get real results. They test compliance 75% faster. They also avoid $1.4 million in fines. Teams work better and learn more. They get better at quality and training. Groups that use governance, automation, and teamwork change with new rules faster. They keep up by checking often and asking for feedback. Leaders should join industry forums and keep training to learn more.

Benefit Category | Impact Example |

|---|---|

Employees have more time for big tasks | |

Quality Assurance | |

Error Reduction | Accuracy gets better overall |

FAQ

What is the first step for AI compliance in regulated industries?

Teams need to find all their AI use cases. They should match each one to the right regulation. This helps make sure nothing is missed. It also lowers the chance of breaking rules.

How often should organizations update AI compliance documentation?

Experts say to check and update records every three months. Doing this often keeps information correct. It also makes sure new rules or AI changes are included.

Why does ongoing monitoring matter for AI compliance?

Ongoing monitoring finds risks early. Automated tools can catch bias, mistakes, or drops in how well AI works. This lets teams fix problems before they get worse.

Who should lead AI compliance efforts?

A special compliance lead or officer should be in charge. This person runs training, keeps records, and talks to regulators.

What tools help automate AI compliance tasks?

AI-driven platforms like Signify, SGS Digicomply, and Avatier help with rule tracking, risk checks, and reports. These tools save time, cut down on mistakes, and help teams get ready for audits.

See Also

Common Content Marketing Errors Law Firms Should Avoid

Complete Guide To Successful Off-Page SEO Techniques

Stepwise Method To Mastering Competitor Analysis Effectively