Synthetic A/B (Simulated): A Practitioner’s Guide to Predicting Experiment Outcomes Safely

What is “Synthetic A/B” in plain English?

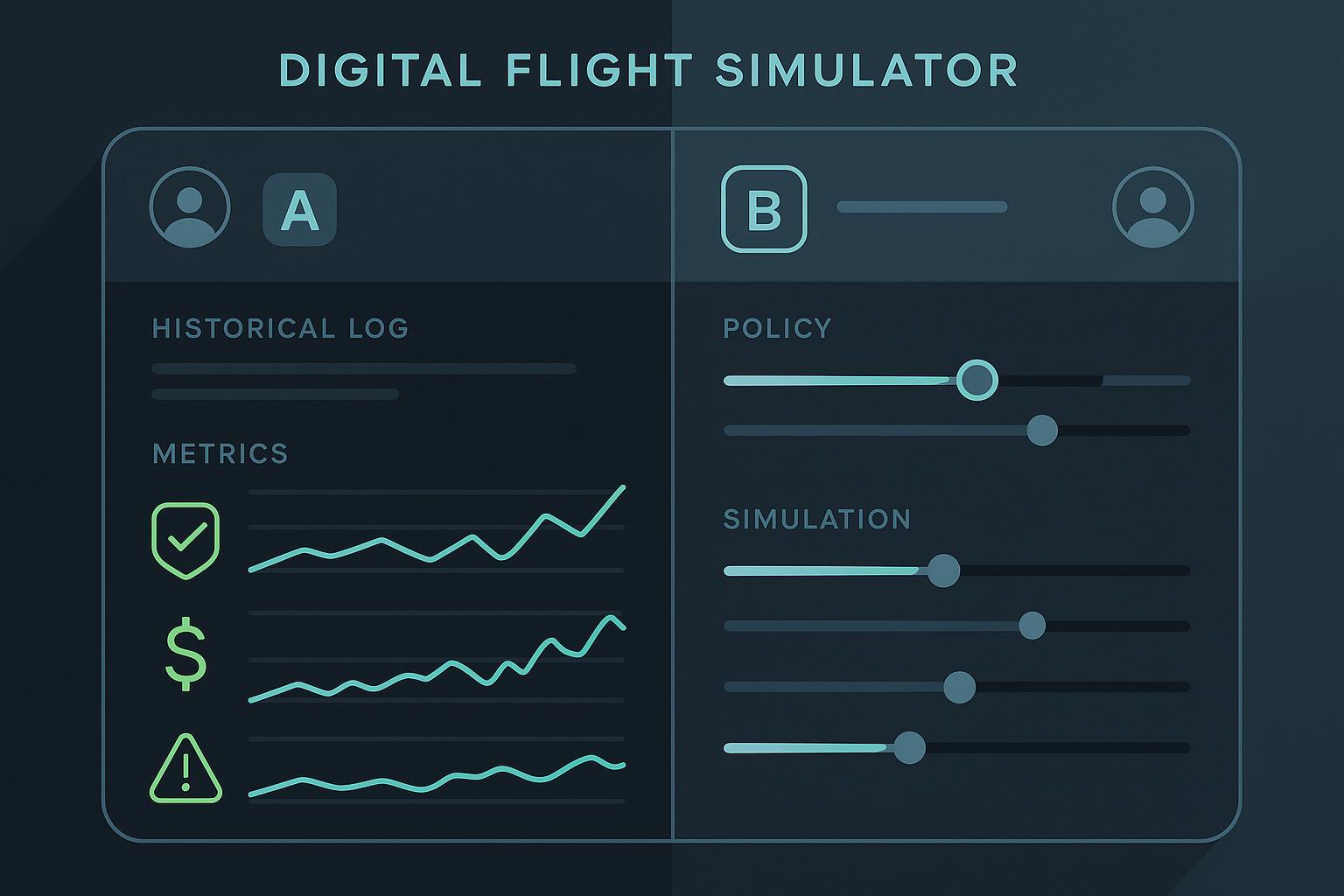

Synthetic A/B (also called simulated A/B testing) is a way to estimate what would happen in an A/B test without exposing real users. Instead of running a live experiment, you use historical logs, causal models, or simulators to predict how a new variant or policy might perform.

Think of it as a flight simulator for experimentation: you “fly” the change in a safe environment, learn where it may fail, and decide whether (and how) to run a real test.

What it is—and what it is not

- What it is: A family of techniques for estimating counterfactual outcomes and policy performance offline. Common approaches include log replay/bootstrapping, uplift (individual treatment effect) modeling, off-policy evaluation from bandits/RL, and agent-based or Monte Carlo simulation.

- What it is not: A guarantee of real-world lift, a substitute for well-run randomized online experiments, or the same thing as synthetic monitoring (scripted uptime checks) or synthetic data (fabricated records). It’s a complement that trades some external validity for speed, safety, and cost-efficiency.

Why it matters now

- Lower risk: You can screen out unsafe variants (e.g., pricing, ranking, or UX changes with guardrail risks) before any user sees them.

- Faster iteration with scarce traffic: You can triage more ideas offline, then reserve live traffic for the best candidates.

- Modern tooling: Causal and bandit methods are mainstreaming in 2024–2025 through open libraries and platform practices, making synthetic workflows more accessible to growth and marketing teams.

For example, variance reduction like CUPED is well-established in industry experimentation to improve sensitivity, as detailed by Microsoft researchers in the 2013 paper on pre-experiment covariates and its 2023 update on generalized augmentation (Deng et al., 2013 ACM; Deng et al., 2023 arXiv). Guardrails and trustworthy process are synthesized in the textbook by industry veterans (Kohavi, Tang, and Xu, 2020, Cambridge University Press).

The methods—explained without heavy math

- Log replay and bootstrapping

- Idea: “Rewind the tape” of historical sessions and resample users to estimate what a new variant would have produced. In ranking/recommendation contexts, teams often compute offline replay metrics that correlate with online results. LinkedIn, for instance, reports using offline replay to triage feed ranking candidates prior to live tests (LinkedIn LiRank, 2024 preprint).

- When to use: Quick pruning of many UX/model variants; early-stage products with small logs; sanity checks on guardrail metrics.

- Uplift modeling (individual treatment effects)

- Idea: Train a model to predict the incremental impact of a treatment for each user or segment. Meta-learners such as T-/X-/R-/DR-learners are widely used, with evaluation via uplift/Qini curves summarized in a peer-reviewed overview (Gutierrez & Gérardy, 2017 PMLR).

- When to use: Targeting who to treat in marketing/lifecycle campaigns, segmented rollouts, or personalized promotions.

- Off-Policy Evaluation (OPE) from bandits/RL

- Idea: Estimate the expected performance of a new policy using data logged under a different policy. Core estimators include importance sampling and the doubly robust estimator introduced in 2011, which combines outcome modeling with propensity weighting to improve bias–variance trade-offs (Dudík, Langford, and Li, 2011 ICML). Open datasets and pipelines, such as the 2020 Open Bandit Pipeline, have accelerated practical OPE experimentation (Saito et al., 2020 arXiv).

- When to use: Evaluating recommenders, ranking, or personalization policies where running many online tests would be costly or risky.

- Agent-based and Monte Carlo simulation

- Idea: Build a sandbox with user dynamics and platform rules, then simulate many rollouts to examine likely ranges, edge cases, and long-term effects. Google’s RecSim and its successor RecSim NG are canonical starting points for recommender simulations (Ie et al., 2019 arXiv; Google Research, 2021).

- When to use: High-stakes changes with complex feedback loops (marketplaces, long-term engagement) or when you want to stress-test adaptive strategies before real exposure.

When to use synthetic A/B—and when not to

Use it when:

- You need to shortlist many variants before a single live run.

- Traffic is limited or seasonality makes live tests slow/expensive.

- The change is high risk and you want guardrail confidence first.

- You’re tuning an adaptive policy (bandit/personalizer) and want offline safety checks.

Prefer a live randomized experiment when:

- Stakes are high, traffic is sufficient, and assumptions for simulation or OPE are shaky.

- You suspect strong interference (network effects) that your simulator or model does not capture.

- You lack the logs, covariates, or measurement discipline to support credible offline estimates.

A starter workflow you can adopt

- Define metrics and guardrails

- Clarify your primary KPI and guardrail metrics (e.g., conversion, revenue, retention, latency). The industry-standard framing of an Overall Evaluation Criterion (OEC) and guardrails is covered in the 2020 textbook on trustworthy experimentation (Kohavi et al., Cambridge UP).

- Data readiness checks

- Ensure reliable identifiers, exposure definitions, timestamps, and outcome windows. Detect and fix data-quality issues that would invalidate offline estimates.

- Diagnose allocation issues like sample-ratio mismatch before trusting any analysis; Microsoft provides a practical overview of SRM detection and remediation (Microsoft Research blog, 2020).

- Choose the method to match the decision

- Variant pruning: log replay/bootstrapping.

- Targeting: uplift modeling with Qini/uplift evaluation (Gutierrez & Gérardy, 2017).

- Policy evaluation: OPE with doubly robust estimators (Dudík et al., 2011 ICML); consider pipelines like OBP (Saito et al., 2020).

- Complex dynamics: agent-based simulation with RecSim/RecSim NG (Ie et al., 2019; Google Research, 2021).

- Apply variance reduction and calibration

- Where appropriate, use CUPED to increase sensitivity (Deng et al., 2013 ACM; Deng et al., 2023 arXiv). If modeling uplift, calibrate predicted vs. realized lift using holdouts and uplift curves.

- Validate before trusting

- Backtest against prior launches: Did the offline method predict the correct sign and ordering? LinkedIn reports replay–online alignment when triaging feed models (LiRank, 2024). Spotify has shown offline-to-online alignment in playlist sequencing work using simulation and OPE (Spotify Research 2023).

- Decide the live plan

- If synthetic A/B looks promising, run a smaller-scope online pilot with strict guardrails. If results diverge, investigate assumptions and data issues before full rollout.

Practical examples

- Email and lifecycle marketing: Use uplift modeling to identify segments that are most likely to respond to a new subject line or offer, validated via uplift curves and holdouts (Gutierrez & Gérardy, 2017 PMLR).

- Ranking/recommendation: Use log replay with offline metrics to rank candidate models, escalating the top ones to live A/B only after passing guardrails (LiRank, 2024 preprint).

- Personalization policies: Evaluate changes to a bandit’s exploration or reward shaping offline with doubly robust OPE before risking production traffic (Dudík et al., 2011 ICML; OBP 2020).

Tools you’ll likely use (2024–2025)

- Causal and uplift libraries: DoWhy (PyWhy) documentation and releases, EconML by Microsoft, and CausalML by Uber.

- Off-policy evaluation: Open Bandit Pipeline and Dataset (2020).

- Simulation: RecSim 2019 and RecSim NG 2021.

Risks, assumptions, and how to manage them

- Overlap/positivity: Your target policy must not take actions that never occurred in the logs; otherwise OPE extrapolates dangerously. Doubly robust estimators can reduce—but not eliminate—risk (Dudík et al., 2011 ICML).

- Model misspecification: Outcome or propensity models that miss key confounders can bias estimates. Use sensitivity analyses and refutation tests (supported in libraries like DoWhy) to gauge robustness (DoWhy releases and docs).

- Dataset and behavior shift: If user behavior or traffic mix changes, past logs may mislead. Periodically revalidate with fresh data and small online pilots; CUPED can stabilize variance but not fix drift (Deng et al., 2013 ACM).

- Interference and feedback loops: Simulators often simplify multi-agent effects and network externalities. RecSim/RecSim NG help prototype long-term dynamics but still require live confirmation (Google Research on RecSim NG, 2021).

Ready-to-run checklist

- Problem and KPI are crisply defined, with guardrails agreed.

- Logs contain exposures, contexts/covariates, outcomes, and stable IDs; windows are defined.

- SRM and data-quality checks pass (Microsoft SRM guidance, 2020).

- Method matches the decision (replay vs. uplift vs. OPE vs. simulation).

- Assumptions register created: overlap, stationarity, model classes, leakage rules.

- Variance reduction and calibration steps planned (e.g., CUPED; uplift curves).

- Backtests done; uncertainty quantified with confidence intervals.

- Live pilot plan in place if synthetic results are green.

FAQ

-

If synthetic says “win” but the online A/B says “no effect,” what happened?

- Common culprits: overlap violations, leakage (using post-treatment features), model misspecification, or dataset shift. Re-check assumptions, rerun sensitivity analyses, and verify your measurement windows and guardrails. Use a smaller online pilot to recalibrate.

-

Can I skip all live tests if my simulators are excellent?

- No. Even sophisticated OPE and simulators are estimates, not measurements. Treat synthetic A/B as a strong filter and a rehearsal, not a replacement. Textbook guidance on trustworthy experimentation emphasizes staged rollout and guardrails (Kohavi et al., 2020).

-

What’s the minimum data I need?

- At a minimum: reliable exposure logs, user/context covariates, and outcome events with timestamps. Without these, you’re better off running a small, well-instrumented online test first.

-

Where do I start with tools?

- For uplift/causal: DoWhy, EconML, CausalML. For OPE: Open Bandit Pipeline. For simulation: RecSim/RecSim NG. Each has tutorials and examples to shorten your ramp-up (OBP 2020; RecSim 2019).

Key takeaways

- Synthetic A/B is a practical, safety-first complement to live experiments.

- Pick the method to match the decision: replay for pruning, uplift for targeting, OPE for policy evaluation, simulation for complex dynamics.

- Make assumptions explicit, validate with backtests, and—when stakes are high—confirm with a small live pilot before scaling.