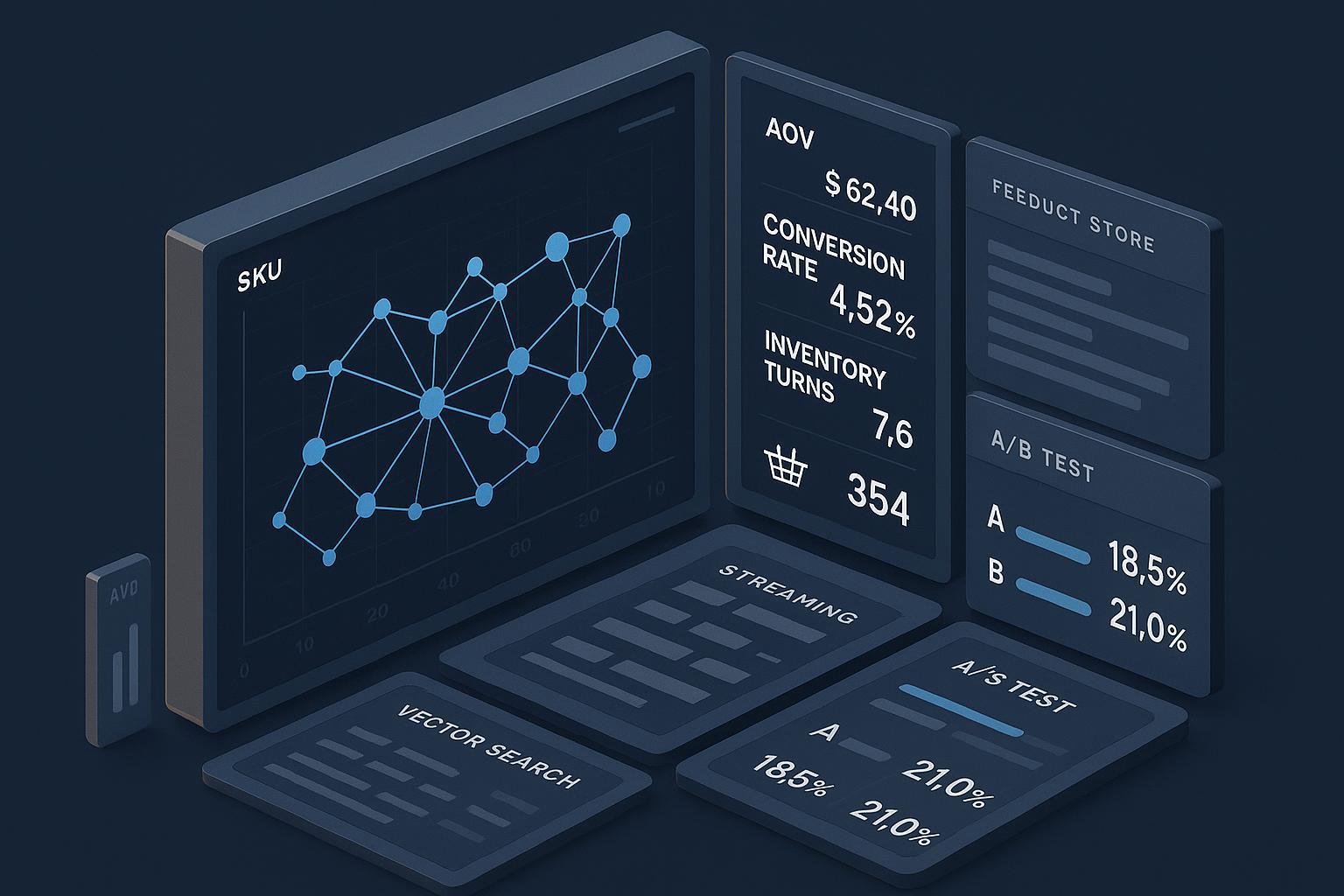

SKU Affinity Modeling: A 2025, Production-Grade Playbook for Retail and Ecommerce

If you still equate “affinity” with static market basket rules, you’re leaving money on the table. In 2025, SKU affinity modeling is multi-basket, time-aware, omni-channel, and operational by design. The goal isn’t only to surface “frequently bought together,” but to predict complementary and sequential purchases, feed personalized search and recommendations, inform assortment and inventory, and do it all with governance guardrails.

This playbook distills what consistently works in practice—where to start, how to architect, how to measure, and how to avoid the failure modes I’ve seen derail otherwise promising initiatives.

1) What SKU Affinity Modeling Means in 2025 (and what it’s not)

Traditional market basket analysis (MBA) centers on single-transaction patterns (support, confidence, lift). It’s useful, but it’s static and short-sighted. Affinity modeling in 2025 tracks cross-session, cross-basket relationships and changing contexts (seasonality, promotions, geos), enabling predictive and dynamic decisions beyond one-time co-purchase rules. This distinction is well captured in the discussion of cross-basket analytics vs. MBA in the 2025 explanation by Veryfi, which highlights temporal and behavioral breadth in “cross-basket” approaches (Veryfi 2025 cross-basket vs. MBA). In ecommerce personalization practice, modern stacks use affinity signals across channels to tailor experiences, not just checkout add-ons, as framed in the 2025 expert guide on personalization by Netcore (Netcore 2025 personalization guide).

Where it applies today:

- Personalized recommendations and search/ranking

- Assortment and category management

- Inventory forecasting and stockout substitution

- Price and promotion optimization

- Store/warehouse placement and omni-fulfillment routing

2) What “Good” Looks Like: Outcomes You Should Expect

When executed with discipline, SKU affinity programs deliver measurable commercial impact:

- Personalized offers and product experiences can lift sales and margins. For example, McKinsey reported in 2025 that targeted promotions produced a 1–2% sales lift and a 1–3% margin improvement, with one program achieving a 3% annualized margin boost in three months (McKinsey 2025 personalized marketing impact).

- Product discovery improvements translate to higher add-to-cart and revenue per visit. In 2024, The Vitamin Shoppe saw +11% category add-to-cart rate and notable search KPIs lift within two weeks under an AI-driven product discovery program (Bloomreach 2024 case results).

- AI-powered experiences can materially increase engagement during peak traffic. Adobe’s 2025 Digital Trends reported a dramatic year-over-year surge in retail site traffic from chatbot interactions on 2024 Cyber Monday, underscoring demand for AI-infused journeys (Adobe Digital Trends 2025).

Treat these as directional benchmarks. Your exact gains depend on baseline maturity, catalog complexity, traffic levels, and the rigor of experimentation.

3) Data Foundations: Identity, Taxonomy, Freshness, Consent

In practice, most affinity initiatives stumble on data plumbing before they ever hit modeling. Get these right:

-

Product identity at scale: Use global trade item numbers (GTIN) as the canonical key wherever possible. GS1 places the responsibility for numbering trade items with the brand owner, enabling unambiguous product identity across channels and partners—critical for clean joins and cross-channel analytics (GS1: responsibility for GTIN assignment). If you operate in brick-and-mortar, plan for 2D barcode adoption and proper parsing to maintain identity fidelity through POS and beyond (GS1 2D in retail guidelines).

-

Taxonomy alignment: Normalize products to a unified taxonomy (e.g., Google Product Taxonomy, UNSPSC) and maintain hierarchical mappings. Feed back model performance (coverage, novelty, error analyses) to improve taxonomy quality over time.

-

Event streaming and feature store: Ingest clickstream and transactions in real time (Kafka/Kinesis/Pub/Sub), compute session and short-term behavior features in stream processing (Flink/Spark/Kafka Streams), and serve through an online feature store with versioning and lineage. Sub-minute freshness for user-behavioral features is now common practice in high-traffic personalization systems; architect for seconds-to-minutes SLAs and hourly/daily batch refresh for slower features. For concrete production patterns, see deep recommender system designs integrating vector retrieval and streaming pipelines in Databricks’ practitioner series (Databricks deep recommender systems).

-

Consent and privacy gating: Merge consent signals into the feature store and serving path to prevent leakage. Profile-level flags should gate which features are computed, stored, and used online. Nailing this early avoids later rework and compliance risk.

4) Modeling Approaches: How to Choose for Affinity Use Cases

No single model serves every scenario. Here’s how I select in 2025:

-

Association rule mining (Apriori/FP-Growth):

- Use when you need interpretable, low-latency co-purchase rules (e.g., quick “frequently bought together” widgets) and your data density supports it. Limitations include lack of sequential awareness and sensitivity to sparsity.

-

Matrix factorization and Neural Collaborative Filtering (NCF):

- Strong baselines for global user–item relationships; neural variants capture richer nonlinearities. They underperform when session context or sequences dominate behavior.

-

Graph-based recommenders (including GNNs):

- Ideal when relationships extend beyond pairwise co-occurrence—complementarity, multi-hop paths, and long-tail coverage. Expect higher infra complexity and compute cost. For a current technical overview, see the 2024 ACM survey on GNN-based recommendation (ACM 2024 GNN recommender survey).

-

Sequential/session-based Transformers:

- Best for next-item prediction in session commerce, where recency and order matter (drops, promotions, fast fashion). Requires robust streaming data and careful regularization.

-

Hybrid stacks with reranking:

- In production, I combine retrieval (e.g., Two-Tower embeddings with vector search) with a learned ranker (NCF/DLRM/Transformer) and a business-rule/risk reranker to respect margin, inventory, and diversity constraints. For architecture patterns and vector retrieval integration, see Databricks’ guidance on deep recommenders and vector stores (Databricks vector retrieval patterns) and AWS’s practical recipes for managed recommenders (AWS Personalize patterns and recipes).

Selection rule of thumb:

- Low data maturity or need for fast win → association rules + MF baseline

- Rich behavioral data, need for personalization at scale → Two-Tower retrieval + neural ranker

- Complex complementarity, long-tail catalog → add graph-based signals/reranker

- Session-driven business (flash sales, grocery, QSR) → add sequential Transformers

5) Validation That Predicts Reality: Offline, Online, and Causal

I’ve stopped launching any model that only looks great offline. Build a validation ladder:

-

Offline with time-based splits: Use temporal splits to mimic production, preventing leakage from future data. Track Precision@K, Recall@K, NDCG@K, and business-aligned beyond-accuracy metrics (coverage, diversity, novelty). For current perspectives on diversity/novelty metrics, see the 2024 “beyond-accuracy” survey (Beyond-accuracy metrics survey, 2024) and ACM’s work on diversity/serendipity objectives (ACM diversity/serendipity overview). For broader tutorials and evaluation pitfalls, the RecSys 2024 tutorial index is a useful waypoint (RecSys 2024 tutorials index).

-

Online A/B with guardrails: Start with low-traffic canaries. Measure conversion rate, revenue per session, AOV, add-to-cart, and recommendation CTR, but include guardrails for latency, error rate, and inventory health. Expect some offline–online divergence; tune feature freshness, exploration, and re-ranking constraints accordingly.

-

Causal rigor for incrementality: Where recommendations influence demand and exposure is biased, apply causal inference or attribution guardrails. The IAB-MRC Retail Media Measurement explainer (2024) summarizes standards for incrementality testing, data quality, and attribution practice you can adapt for onsite personalization (IAB-MRC retail media measurement explainer).

6) Serving and MLOps: From Lab to Low-Latency Reality

The architecture I rely on in 2025 has three layers:

- Candidate retrieval

- Two-Tower model produces item and user embeddings; nearest neighbors retrieved via vector search (FAISS/ScaNN/managed vector store). This narrows tens of thousands of SKUs to a few hundred candidates within milliseconds.

- Learned ranking

- A neural ranker (NCF/DLRM/Transformer) scores candidates using real-time features (recent interactions, price, margin, availability) and slow features (category, brand, content embeddings). Batch features refresh hourly/daily; streaming features update in seconds.

- Business/risk reranking

- Constraints and policies: inventory availability, margin thresholds, category diversity, personalization caps. This layer ensures the list is not just accurate but commercially viable.

Operational patterns and references:

- Deep recommender pipelines and vector retrieval integration are outlined in Databricks’ practitioner blog series (Databricks deep recommender systems).

- Managed stacks show end-to-end MLOps examples. One case: LotteON used Amazon SageMaker and MLOps automation to build a personalized recommendation system, illustrating robust training, deployment, and inference operations in retail-scale contexts (AWS LotteON recommender case).

- Plan elasticity and resource scaling for peak events (e.g., Cyber Week). Google Cloud’s performance framework covers elasticity tactics and resource planning patterns applicable to ML serving clusters (Google Cloud elasticity framework).

Key SLOs I use:

- P50/P95 latency: <50 ms / <150 ms per request for retrieval + ranking under nominal load

- Online feature freshness: sub-minute for behavior-driven features; alert if freshness > 1–2 minutes

- Serving availability: ≥99.9% with graceful fallbacks (popular items, last-good model)

- Data staleness and drift monitors: PSI/KL thresholds with automatic retrain triggers

7) Privacy-by-Design: Compliance You Operationalize, Not Bolt On

Affinity modeling qualifies as profiling in many jurisdictions. Build compliance into the pipeline:

-

Lawful basis and transparency: Ensure clear notices and consent/opt-out pathways aligned to your region. The European Data Protection Board’s 2025 updates emphasize clarity and coordinated enforcement under GDPR—use them to inform your notices and DPIA triggers (EDPB 2025 GDPR updates).

-

US state regs: Treat CCPA/CPRA requirements as table stakes—disclosure, access, deletion, and opt-out for sale/share of personal information (California AG CCPA overview).

-

Risk management framework: Adopt control families and documentation aligned to the NIST AI Risk Management Framework (governance, transparency, fairness, privacy) so model changes and new features follow a repeatable, auditable path (NIST AI RMF portal).

-

Operational controls: Consent-gated features; data minimization and pseudonymization; retention limits; model cards; human-in-the-loop escalation for significant decisions. Bake these controls into CI/CD and the feature store so they’re enforced automatically.

8) Common Failure Modes (and how to avoid them)

-

Popularity traps: Models over-recommend bestsellers, starving the long tail and cannibalizing discovery. Mitigation: include coverage/diversity objectives and exploration; add graph-based signals to surface complementary long-tail items; implement diversity-aware reranking.

-

Data leakage: Using future or post-exposure signals in training inflates offline metrics but fails online. Mitigation: time-based splits; strict feature lineage and “no peeking” checks in CI.

-

Cold start ignored: New SKUs and new users receive poor recommendations. Mitigation: content-based embeddings from titles/descriptions/images; seller/brand priors; hierarchical smoothing; campaign-level bootstrapping.

-

Inventory blind spots: Recommending out-of-stock or slow-moving SKUs harms trust and P&L. Mitigation: real-time availability features; reranker constraints; margin- and inventory-aware objective blending.

-

Over-optimizing offline: Great NDCG doesn’t guarantee revenue lift. Mitigation: tight offline–online metric mapping; small-scope online trials; causal lift tests.

-

Feature freshness debt: Stale user/session features ruin relevance. Mitigation: streaming features with freshness SLOs; alerting and fallbacks.

-

Privacy retrofits: Trying to add compliance late forces rework. Mitigation: consent gating and DPIAs from day one; model cards in the release process.

9) Roles and Cadence: How High-Performing Teams Execute

Successful programs run on clear ownership and weekly rhythm:

-

Data Science

- Own problem framing, feature design, model selection, and offline evaluation. Write experiment briefs linking offline metrics to business KPIs; define guardrails and counterfactual plans.

-

ML Engineering / Platform

- Build feature store (online/offline), training pipelines, model registry, deployment automation, observability, drift detection, and retraining jobs.

-

Software Engineering

- Integrate APIs/widgets/search hooks; guarantee latency/reliability; implement fallbacks and client-side AB exposure logging with schema governance.

-

Product & Merchandising

- Set objectives and constraints (margin, inventory, category exposure); approve curated business rules; provide qualitative feedback loops.

-

Privacy/Legal/Security

- Conduct DPIAs; validate notices and consent; audit access controls and retention; sign off on sensitive feature usage.

Weekly sprint template that has worked for me:

- Monday: Review last week’s online KPIs, experiment readouts, drift/freshness dashboards

- Midweek: Ship one incremental improvement (feature tweak, reranker rule, candidate filter)

- Friday: Plan next week’s A/Bs and deployment; update model card and documentation

10) A 90-Day Rollout Plan You Can Execute

Days 1–30: Foundations and Baselines

- Scope: Choose 1–2 surfaces (e.g., PDP recommendations and search results page) and 1–2 categories with sufficient traffic.

- Data: Implement GTIN-based product identity and taxonomy mapping; stand up streaming event collection; define online feature store schemas and freshness SLOs.

- Modeling: Train association rule and MF/NCF baselines; instrument time-based offline evaluation with NDCG@K, coverage, diversity.

- Governance: Draft DPIA, publish notices, wire consent gating to the feature store.

- Success criteria: Offline metrics beat existing baseline by agreed deltas; pipeline SLOs met in staging.

Days 31–60: First Production Launch and Learning

- Serving: Deploy Two-Tower retrieval + neural ranker; integrate business/risk reranker (inventory and margin constraints).

- Experiments: Run A/B on 10–20% traffic with guardrails; monitor conversion/AOV/add-to-cart and latency/availability.

- Observability: Stand up dashboards for drift, freshness, and error budgets; configure alerts and automated retraining triggers.

- Governance: Finalize model card; validate CCPA/GDPR requests flow through.

- Success criteria: Stat-sig lift on at least one business KPI; no SLO breaches; privacy controls verified.

Days 61–90: Scale and Sophistication

- Modeling: Add graph-based signals or sequence model for session-heavy surfaces; introduce diversity-aware reranking.

- Surfaces: Expand to additional placements (homepage, cart, email); integrate with personalized search.

- Commercial: Add promotion/price optimization hooks; coordinate with merchandising to align category goals.

- Success criteria: Sustained KPI lift at broader exposure; enhanced catalog coverage; stable latency at peak.

11) Tooling and Reading List (curated)

- Modeling and architecture patterns: See Databricks’ hands-on series for deep recommenders and vector retrieval integration (Databricks recommender systems overview).

- Managed recommender workflows and MLOps: Review the LotteON personalization system on SageMaker for a reference deployment pattern (AWS LotteON recommender case).

- GNN-based recommenders: Technical landscape via the 2024 ACM survey (ACM 2024 GNN recommender survey).

- Evaluation: Beyond-accuracy metrics survey (2024) and RecSys 2024 tutorial index for practical guidance (Beyond-accuracy metrics survey, 2024; RecSys 2024 tutorials index).

- Privacy & governance: EDPB 2025 GDPR updates, CCPA AG overview, and NIST AI RMF to structure your controls (EDPB 2025 GDPR updates; California AG CCPA overview; NIST AI RMF portal).

- Personalization outcomes and business cases: McKinsey 2025, Bloomreach 2024 cases, Adobe Digital Trends 2025 (McKinsey 2025 impact; Bloomreach 2024 case results; Adobe Digital Trends 2025).

- Elasticity planning: Google Cloud’s guidance on elasticity and resource allocation for peak events (Google Cloud elasticity framework).

Closing advice

Don’t chase the fanciest model out of the gate. Most of the ROI comes from getting identity, freshness, evaluation, and guardrails right—and from tight weekly iteration with clear roles. Once your baseline is solid and your validation predicts online reality, layering in graph or sequence models becomes a force multiplier rather than technical debt.