How to Build a Scalable Content Engine

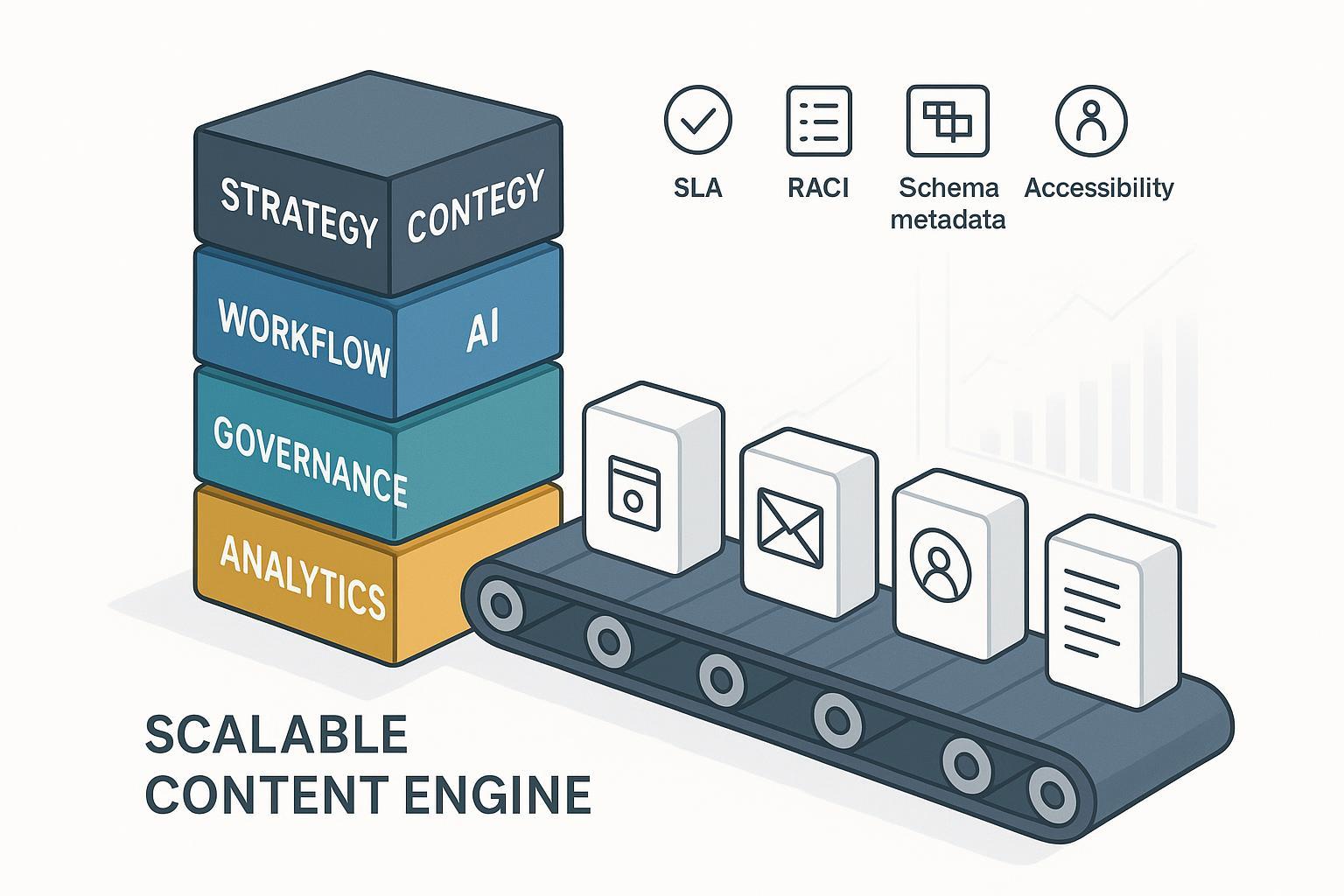

A scalable content engine is the system that turns strategy into a steady flow of high‑quality, multi‑channel assets—on time, on brand, and tied to outcomes. Done right, you get predictable velocity, sharper quality, and measurable impact without burning out the team. This ultimate guide shows how to design the operating model, workflows, governance, and measurement loops that make that possible.

1) Strategy First: What Your Engine Produces and Why

Start with a content mission tied to business outcomes. Define the jobs your content solves for your audience (evaluation, onboarding, expansion). Translate that into 4–6 editorial pillars and a topic taxonomy that becomes your single source of truth. Your calendar should align to revenue moments—launches, seasonal demand, sales plays—so inputs are prioritized by impact, not opinions.

Bake search quality and trust into your strategy. Google’s official guidance emphasizes people‑first content, original insight, and clear sourcing; abusing automation to manipulate rankings violates spam policies. For an authoritative baseline on what “helpful” means and how E‑E‑A‑T signals are judged, see Google’s note on AI‑generated content and Search (2023) and the helpful content documentation in Search Central: both clarify that quality expectations apply regardless of authorship and that unhelpful pages should be improved or removed. Anchor your brief template to those expectations: audience/job, search intent, unique angle, sources to cite, experts to quote, and a distribution plan. References: Google’s guidance on AI‑generated content in Search and Helpful content documentation.

Performance and UX standards belong in strategy, too. In 2024, Interaction to Next Paint (INP) replaced First Input Delay as the responsiveness Core Web Vital; a “good” INP is under 200 ms. Set Core Web Vitals budgets (LCP/CLS/INP) as pre‑publish gates. For the transition and thresholds, see the web.dev announcement: INP becomes a Core Web Vital.

2) Pick an Operating Model You Can Run

Two models dominate at scale: a centralized Center of Excellence (CoE) and a hub‑and‑spoke structure. Choose by governance maturity, business complexity, and contributor distribution.

| Model | Strengths | Risks | Use When |

|---|---|---|---|

| Center of Excellence (CoE) | Unified standards, tooling, QA, and analytics; economies of scale | Can bottleneck requests; distance from BU nuance | Early scale, heavy compliance, or when you need to fix quality fast |

| Hub‑and‑Spoke | Embedded marketers own execution; central team sets standards and shared services | Inconsistent quality without strong governance; duplicated tools | Diverse products/regions; heavy domain nuance needing proximity |

Whichever model you choose, make ownership explicit with a simple RACI per stage and SLAs for response times, reviews, and corrections. Responsibility matrices are recognized in project management practice, and service‑level targets borrow from service management norms (ITIL 4’s Service Level Management purpose is to set business‑based targets and measure delivery). See PeopleCert/Axelos’s overviews for definitions and scope.

3) Standardize the Workflow (End‑to‑End)

Codify one visible, end‑to‑end path for every asset type: ideation → brief → draft → edit → SME/legal → publish → distribute → monitor → refresh/retire. Then set service levels and handoff rules. For example, a brief is accepted within two business days or returned with clarifications; editorial review completes within three business days (faster for minor updates); SME/legal windows vary by risk tier with human‑in‑the‑loop signoff for sensitive claims.

Quality gates should be explicit before “publish.” Use an editorial scorecard (originality, sourcing, voice), a performance budget (LCP/CLS/INP), and accessibility checks bound to WCAG 2.2. The latest success criteria strengthen focus visibility and interaction guidance—build them into templates and checklists. See the W3C Recommendation: WCAG 2.2.

4) Resource and Capacity Planning

Your core unit is a cross‑functional pod: strategist, managing editor, writer(s), designer, SEO/analyst, and a project manager or ops lead. Add a shared pool of SMEs and regional reviewers if you operate globally. Keep strategy, governance, and voice‑critical editing in‑house; vendorize production sprints, localization, and design variants with documented SLAs.

Capacity math is pragmatic: map hours per stage, set utilization targets (often 70–80% productive, 20–30% overhead), then size your pipeline. In mature teams, long‑form net‑new often lands at one to two pieces per writer per month when balanced with updates and repurposing. Treat those as starting assumptions and baseline your own cycle times—reliable open benchmarks are sparse, so measure locally and adjust.

5) Tooling and Integrations

A scalable stack spans CMS (structured content and templates), DAM (with embedded rights and metadata), work management (to enforce SLAs and surface cycle times), analytics (Search Console, web analytics, CrUX field data), QA/accessibility (linters, link checkers, axe/Lighthouse), and an AI copilot layer governed by policy. Your metadata pipeline should auto‑generate and validate schema and preserve rights info through asset workflows. For structured data, consult Google’s introduction and Schema.org’s types; for general metadata, Dublin Core terms remain a lightweight, cross‑system vocabulary; for images, the IPTC Photo Metadata Standard covers captions, credits, and rights.

- Structured data overview and types: Google’s structured data intro and Schema.org Article

- Metadata standards: Dublin Core terms and IPTC’s Photo Metadata (spec and user guide)

6) AI‑Assisted, Human‑Governed

Think of AI as a copilot under strict rules. Use it where it’s low risk and high leverage—topic clustering, draft briefs, entity and metadata suggestions, headline variants, repurposing outlines. Keep humans firmly in charge of original research, nuanced claims, and anything regulated.

Anchor your program to recognized frameworks. The NIST AI Risk Management Framework outlines lifecycle functions—Govern, Map, Measure, Manage—that translate into marketing policies: defined accountability, risk tolerances, prompt/output logs, and documented human‑in‑the‑loop review for sensitive topics. See the official framework: NIST AI RMF 1.0. For enterprise assurance, ISO/IEC 42001:2023 establishes an AI management system with operational controls, transparency, and continuous improvement; consult the catalog entries for scope. Stay compliant on marketing claims: the U.S. FTC finalized a rule banning fake reviews/testimonials in 2024 and continues to warn against deceptive AI claims. Reference: FTC’s 2024 fake reviews rule.

7) Modular Content, Metadata, and i18n

To scale without reinventing the wheel, design for reuse. Think of your content like Lego bricks: modules that can be assembled, versioned, and localized. The OASIS DITA specification formalizes topic‑based authoring with maps for assembly, key references for variables, and conditional processing for variants—principles you can borrow even if you don’t adopt DITA tooling. See: OASIS DITA 2.0.

For internationalization, W3C’s guidance recommends designing for global use from the outset: declare language and direction (BCP 47), use UTF‑8, separate translatable strings, and prefer logical CSS properties. Following these basics accelerates localization and reduces errors. Reference: W3C i18n HTML authoring techniques.

Combine structured data (Schema.org Article) with consistent editorial metadata (Dublin Core) and preserve embedded rights captions on images per IPTC to improve search understanding and interoperability end‑to‑end.

8) Ship Fast, Ship Right: Quality and Performance Gates

Build a production checklist that catches defects before they ship: editorial quality (originality, clarity, sourcing, voice), performance budgets (LCP/CLS/INP—keep INP under 200 ms), accessibility conformance (WCAG 2.2 automated checks plus periodic manual reviews), and structured data/metadata validation (JSON‑LD, alt text, captions, credits, rights persistence).

9) Measure What Matters

Ladder your KPIs from production to business outcomes so you can diagnose bottlenecks and defend investment. Track production throughput and on‑time rate; quality via scorecards, SME signoff, defect rate and correction SLAs; performance via organic sessions, qualified traffic share, conversion by content type, assisted pipeline, and a reuse multiplier; and SEO/UX via entity coverage, internal link health, indexation, Core Web Vitals, and accessibility conformance. Set review rituals—weekly ops standups, monthly performance reviews with refresh/retire decisions, quarterly strategy reviews. For channel norms, lean on primary hubs like the Content Marketing Institute’s B2B Benchmarks and compare social engagement ranges with the RivalIQ 2025 benchmark report.

10) Scale Plays That Actually Work

Modular reuse sprints. Once a quarter, repurpose top‑performing pieces into formats your buyers use: executive summary, email sequence, social carousel, sales one‑pager, short video. Maintain a reuse multiplier metric to quantify output.

Systematic refresh. Monitor decay (traffic, rankings, product changes) and put candidates into a refresh queue with light/medium/heavy tiers. Tie refresh SLAs to impact.

Enablement outside marketing. Train SMEs, sales, and support to contribute safely: provide brief templates, a prompt library for idea generation (with governance), and a short QA checklist.

Localization at scale. Treat language variants as structured outputs, not one‑off projects. Use a terminology base, variables, and region‑specific proof points.

11) Change Management

Scaling is less about tools and more about habits. Publish playbooks (how we brief, how we review, how we distribute), run role‑based training, and set adoption metrics: percent of assets with complete briefs, SLA adherence, and prompt‑library usage for AI‑assisted stages. Add an escalation path for quality or compliance risks and celebrate defect‑prevention wins, not just production highs.

12) Your First 90 Days: A Pragmatic Rollout

Phase 1 (Weeks 1–3): Baseline and blueprint. Inventory content, map your current workflow, and define the target operating model (CoE or hub‑and‑spoke). Draft your brief template, editorial scorecard, and initial SLAs. Select a minimum viable tooling stack and agree on Core Web Vitals and WCAG gates.

Phase 2 (Weeks 4–6): Pilot the workflow. Run two content types through the full process with audits. Capture cycle times, defects, and handoff pain points. Introduce low‑risk AI assistance (briefs, metadata suggestions) under documented guardrails (HITL where needed).

Phase 3 (Weeks 7–9): Instrument and iterate. Build a lightweight dashboard for production, quality, and performance. Tighten SLAs, tune templates, and standardize schema/metadata generation. Start a small reuse sprint to prove the multiplier effect.

Phase 4 (Weeks 10–12): Scale with governance. Formalize RACI, publish playbooks, train contributors, and expand AI‑assisted stages with audit trails. Schedule monthly performance reviews and a quarterly refresh ceremony.

13) Pitfalls and Fixes

If you lack a single source of truth, establish a clear taxonomy, a centralized brief archive, and DAM metadata standards so teams stop guessing. If tool sprawl slows you down, consolidate around a CMS/DAM spine and ensure metadata persists across systems. If AI shows up without policy, implement NIST‑aligned guardrails, maintain logs, and define human‑review zones; ISO/IEC 42001 offers structure for an AI management system. If quality checks only happen at the end, shift QA left with template gates (brief completeness, schema, accessibility) and fast editor reviews. And if refresh debt piles up, set decay triggers and a monthly refresh queue with time‑boxed tiers.

14) Quick Checklist

- Finalize content mission, pillars, and taxonomy as your single source of truth.

- Choose CoE or hub‑and‑spoke and publish a one‑page RACI with SLAs.

- Standardize the workflow and add QA gates for editorial, performance (INP/LCP/CLS), accessibility (WCAG 2.2), and structured data.

- Stand up a minimal stack: CMS + DAM + work management + analytics + QA; wire metadata preservation.

- Launch AI‑assisted stages where low risk (briefs, metadata, variants) with NIST‑aligned guardrails and HITL for sensitive topics.

- Implement modular content patterns and variables; plan a quarterly reuse sprint.

- Ship a dashboard with production, quality, performance, and business outcome metrics.

- Schedule monthly performance reviews and a quarterly refresh ceremony.