The Role of Humans in Reviewing AI Output

The role of humans is crucial in ensuring AI output is accurate and trustworthy. In one real example, the role of humans improved AI results by up to 41% in challenging situations. Without human review, AI can make errors or act unfairly. Studies showed that 78% of incorrect AI information occurred when the role of humans in checking results was absent. Many business leaders are concerned about unfairness and lack of accountability, with 81% advocating for stronger AI regulations. The role of humans is essential to make sure AI is fair, accurate, and follows proper guidelines.

Key Takeaways

Humans have an important job in checking AI results. They look for mistakes and help make AI more correct and fair. Human review helps find unfair patterns in AI. It also helps make the data better. People trust AI more when they know humans check its work. People also trust it more when humans fix mistakes. When humans and AI work together, they do better in healthcare, money, school, and other areas. Reviewers need clear steps and good training. This keeps AI safe, fair, and something people can count on.

Why Review Matters

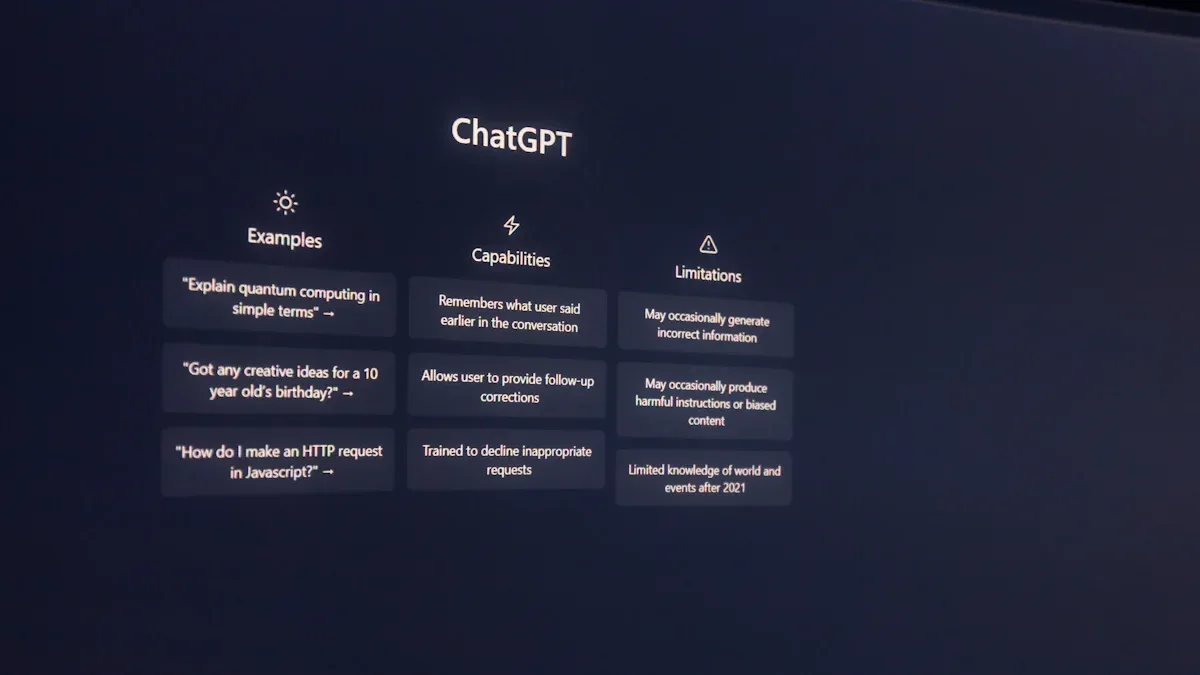

AI Limitations

AI can look at lots of data very fast. But it has trouble with tasks that need reasoning or context. Sometimes, Generative AI makes up things that are not true. These mistakes are called "hallucinations." AI makes these errors because it learns from patterns, not real life. For example, if AI trains mostly on male data, it may miss heart attacks in women. Sleep scoring tools also do badly with new patient groups.

AI can skip important details if its data is limited or biased.

Some AI tools cannot say why they made a choice, so people may not trust them.

AI does not get human feelings or reasons.

In law enforcement, AI can find patterns but cannot know intent or context.

Human reviewers are important for finding these mistakes. They use their skills to check AI work, find errors, and give feedback. This feedback helps guide AI and makes its results better. In healthcare and law enforcement, people’s judgment is needed for fairness and accuracy.

Building Trust

People trust AI more when they know humans check its work. Studies show trust goes up when people hear that humans help make decisions. In Japan, trust in nursing care rose by almost 9% when people learned humans reviewed AI care plans.

When people know how AI works and how humans watch over it, they feel safer using AI.

When humans fix AI mistakes, they help people trust technology again. Telling people about human checks and decisions builds trust. So, groups that use both AI and human review have better public relationships and less worry about automation.

Role of Humans in AI Review

Error Detection

AI can look at lots of data quickly, but it still makes mistakes. Sometimes, only humans can find these errors. Humans are very important for checking AI work before it causes problems. For example, doctors have fixed many AI mistakes in medical tests. They corrected 8 out of 10 wrong results. This shows how much humans help. People also give feedback to AI, which helps it get better over time. In language translation, users helped the AI improve its BLEU score from 0.65 to 0.72. This means the translations got better. The table below shows how human review helps AI do a better job:

Metric | Description | Example |

|---|---|---|

Error Reduction Rate | Shows how much AI errors drop after humans help | In medical tests, humans fixed 80% of mistakes (8 out of 10) |

Feedback Impact | Tells how much AI gets better after feedback | Language translation AI BLEU score went up from 0.65 to 0.72 after users gave feedback |

Confidence | Shows how much people trust AI advice | Financial AI guessed stock trends right 90% of the time when confidence was above 90% |

Adaptability Score | Shows how well AI changes after feedback | This score shows how much AI improves with human review |

Humans use their skills to check AI work, find mistakes, and give advice. This makes AI more correct and dependable. Humans help stop AI from making big mistakes in important areas like health, money, and school.

Bias Mitigation

AI can become unfair if it learns from bad or unbalanced data. Humans help find and fix these problems. They look at what AI does and check if it treats everyone the same. Humans use their judgment to spot patterns that could hurt some groups. For example, if AI only learns from adults, it might not work for kids. Humans can change the data or rules so the AI is fair for all.

Using tools like Label Studio, people see that human feedback is needed to make AI honest and good. Special templates and teamwork help make data labels better and more steady. Even though it is hard because people can be biased too, human feedback is still very important. Reinforcement learning from human feedback (RLHF) helps AI learn what people care about. Experts say human labelers are needed to find mistakes, give detailed answers, and make sure AI is fair. When humans and AI work together, AI learns from real-life knowledge and can change to fit different needs. Human help also makes AI less biased and helps build fair and clear systems.

Ethical Oversight

Humans play a big part in making sure AI follows the rules and is fair. They check if AI is making good choices and treating people right. Humans help companies stay responsible for their AI. They follow rules to keep people’s information safe and stop unfair treatment.

Humans use rules, checks, and laws to make sure AI is fair and open.

Ethical frameworks like the IEEE Global Initiative give rules for fairness and safety. Humans use these to make sure AI matches what society wants.

People do audits and checks to find and fix unfairness, making AI more open and fair.

Laws and rules, like privacy agreements, help humans keep AI fair and protect secrets.

Groups with experts watch over AI and make sure it is doing the right thing, not just leaving it to machines.

When humans and the public work together, they help include everyone and fix power problems in AI.

If someone is hurt by AI, humans help them get help and fix the problem.

Tools like explainable AI help humans understand why AI made a choice and check its actions.

Studies show that most AI problems come from bad data labeling. When humans work with AI, the data gets better and more correct. This helps stop bias and lets humans handle hard jobs that need thinking. For example, using lots of people to label data can help with big projects and stop one person’s bias. Doing labeling in steps and using active learning has helped in things like medical pictures and self-driving cars. Humans make sure AI stays safe, fair, and trustworthy.

Human-AI Synergy

Collaboration Benefits

When humans and AI work together, they can do more. This teamwork helps in many areas and brings real improvements.

In healthcare, AI helps doctors find diseases sooner. Doctors feel more sure about their choices. This means patients get better care and tests are more correct.

In finance, AI looks at lots of data to find patterns. Human experts use their own judgment to make smart choices.

In education, AI watches how students do in class. It suggests ways to help them learn. Teachers give support and explain things, so lessons work better.

In factories, teams use AI to spot broken products fast. This saves money and makes things better.

AI does boring jobs, so people can do creative work. This helps people get more done and enjoy their jobs.

Numbers show these changes are real. Tests are more correct, mistakes go down, and jobs finish faster. People can get 20% more done, and costs can drop by 15%. New ideas, like AI helping workers learn, help people learn quicker and like their jobs more. Studies say new workers learn 30% faster and are 25% happier at work. These facts show humans are needed to help and guide AI.

Teams that use both human skills and AI often get more trust, new ideas, and better results.

Challenges

Working with AI is not always easy. Companies have to learn new things when they start using AI. Workers need more training, and their jobs might change. Mixing AI speed with human thinking can make work harder and more stressful.

One study in healthcare found AI sometimes says no to pictures if they are not clear. This gives staff more work and makes patients wait longer. These problems show that looking only at AI’s test scores is not enough. Real work needs better ways to see how humans and AI work together.

The ways we check AI now do not show everything. They look at one tool, not the whole team. Experts say we need new tools to check both humans and AI at the same time. This helps teams see how to make their work with AI better.

Best Practices

Effective Workflows

Teams that check AI work need clear steps to do well. Good workflows help people find mistakes and fix them. They also help keep AI fair for everyone. Experts say teams should use a plan that covers every part of the review. The table below lists the main parts of a strong workflow:

Component | Key Implementation Elements |

|---|---|

Documentation | Write down every time a person changes something. Include the time, reason, and what happened. This helps everyone stay responsible. |

Version Tracking | Keep records of which AI model was used. Track any changes to settings or training data. |

Oversight Roles | Make sure everyone knows their job. Some people check first, others check again, and some give final approval. |

Performance Metrics | Watch for how many mistakes happen and how correct the results are. Also, check how fast reviews are done, how much they cost, and if rules are followed. |

Team Feedback | Get team opinions in meetings, forms, or anonymous ways. Use these ideas along with numbers to see how things are going. |

Teams should use both numbers and team opinions to see if the workflow is good. They can check:

How quick and correct the reviews are

How many times people need to help

How well people and AI work together

How happy and skilled the team is

A good workflow has clear steps, keeps track of changes, and listens to the team. This helps everyone get better and keeps things fair.

Training Reviewers

Reviewers need special lessons to check AI work well. Training starts by teaching experts about AI and how it is used in their jobs. Trainers show reviewers how to give feedback that is clear and useful for real problems.

Important steps for training are:

Teach experts about AI and how it helps their work.

Show how to give feedback that helps AI do better.

Use real-life examples so reviewers see how AI works.

Check AI results with trusted sources to make sure they are right.

Help experts, developers, and data scientists work together.

Remind reviewers to be fair and keep secrets safe.

Give enough time for careful checking.

Reviewers who learn these skills help AI improve and keep results safe and fair. Ongoing training and feedback help the team handle new problems.

Challenges and Limits

Scalability

Making human checks work for big AI systems is hard. When AI does more jobs, we need more people to check its work. Reviewers can make mistakes if they get tired or bored. People sometimes trust AI too much, even when it is wrong. This is called "automation bias." Other times, people like human choices more than AI, even if AI is right. This is called "algorithm aversion."

Reviewers might miss mistakes if they trust AI too much.

Some people do not have the training or energy to check hard AI systems.

Being tired, bored, or stressed can make checks worse.

There are no clear ways to measure how well human checks work for big AI systems.

Studies show that teams with humans and AI do not always do better than just one person or AI alone. Sometimes, the team does worse. If people cannot tell when to trust AI, the results get worse. For example, in finding fake hotel reviews, if people are less right than AI, the team does not do well. These facts show that when there is more work, reviewers may have trouble keeping up. This can make AI results less good.

Subjectivity

Human judgment makes AI review more complicated. People use their own skills, feelings, and past when checking AI work. This means different people can give different answers for the same AI result. The table below shows how these personal things change how people judge AI:

Aspect | Evidence Summary |

|---|---|

Human evaluators' accuracy | People are only a little better than guessing when telling AI from human writing, even if they feel sure. |

Individual differences | Skills like being smart, careful, or kind change how well people judge AI work. |

Algorithm aversion | People often like things made by humans more, which changes how they rate and share AI results. |

Linguistic qualities | AI writing sounds more logical and happy, which can change what people think. |

Impact on misinformation | It is hard to tell AI from human writing, so wrong information can spread. |

People think AI is smart but has no feelings. These ideas change if they accept or reject AI choices. How much people trust AI can change with the situation and their own traits. Because of this, even with human checks, AI results might not be fair or right for everyone.

The role of humans is very important for good AI. People help make sure AI is honest, correct, and follows rules. Human checks help find mistakes, stop unfairness, and guide right choices. Many jobs now use both people and AI to get better results.

A new survey says 85% of people want to know more about how AI works before it is used. As technology gets better, groups should help people and AI work together. This will help make safer and smarter systems for everyone.

Public Opinion/Regulation Statistic | Description |

|---|---|

AI Benefits Perception | 78% think generative AI is more helpful than risky. |

AI Safety Confidence | 39% feel current AI is safe and secure. |

Support for AI Regulation | 85% want the country to make AI safe and secure. |

Demand for Transparency | 85% want companies to explain how they check AI before selling it. |

AI Assurance Spending | 81% think companies should spend more to make sure AI is safe. |

AI's Primary Purpose | 64% say AI should help and support people. |

Generational Differences | Younger people are more open to using AI every day than older people. |

FAQ

What does a human reviewer do when checking AI output?

A human reviewer checks AI results for mistakes, bias, or unclear answers. They use their own knowledge to spot problems. Reviewers give feedback to help improve the AI.

Why is human review important for AI in healthcare?

Human review helps catch errors that AI might miss. Doctors use their training to make sure AI results are safe for patients. This keeps care accurate and fair.

Can humans remove all bias from AI?

Humans can reduce bias, but they cannot remove it completely. They check data and results for fairness. Regular review helps, but some bias may still remain.

How does human feedback help AI learn?

Human feedback shows AI what is right or wrong. Reviewers correct mistakes and explain why. This helps the AI improve future answers.

Who should review AI output?

Experts in the field should review AI output. For example, doctors check medical AI, and teachers check educational AI. This ensures the results are correct and useful.

See Also

A Complete Guide To Achieving SEO Success With Perplexity AI

Writesonic AI And QuickCreator Battle For Content Creation Supremacy

Exploring The Design And Technology Behind Large Language Models

Key SEO Trends And Predictions To Watch For In 2024

Strategies For Content Analysis To Beat Your Competition Effectively