Real-Time AI Campaign Optimization and Audience Segmentation: How They Transform Content Marketing ROI (2025)

Most teams don’t fail because their ideas are bad; they fail because signals arrive faster than their workflows can react. In 2025, the winning content marketing programs are built on two capabilities: real-time AI optimization and dynamic audience segmentation. When those are wired into your stack, ROI compounds through better targeting, smarter creative, and continuous budget reallocation.

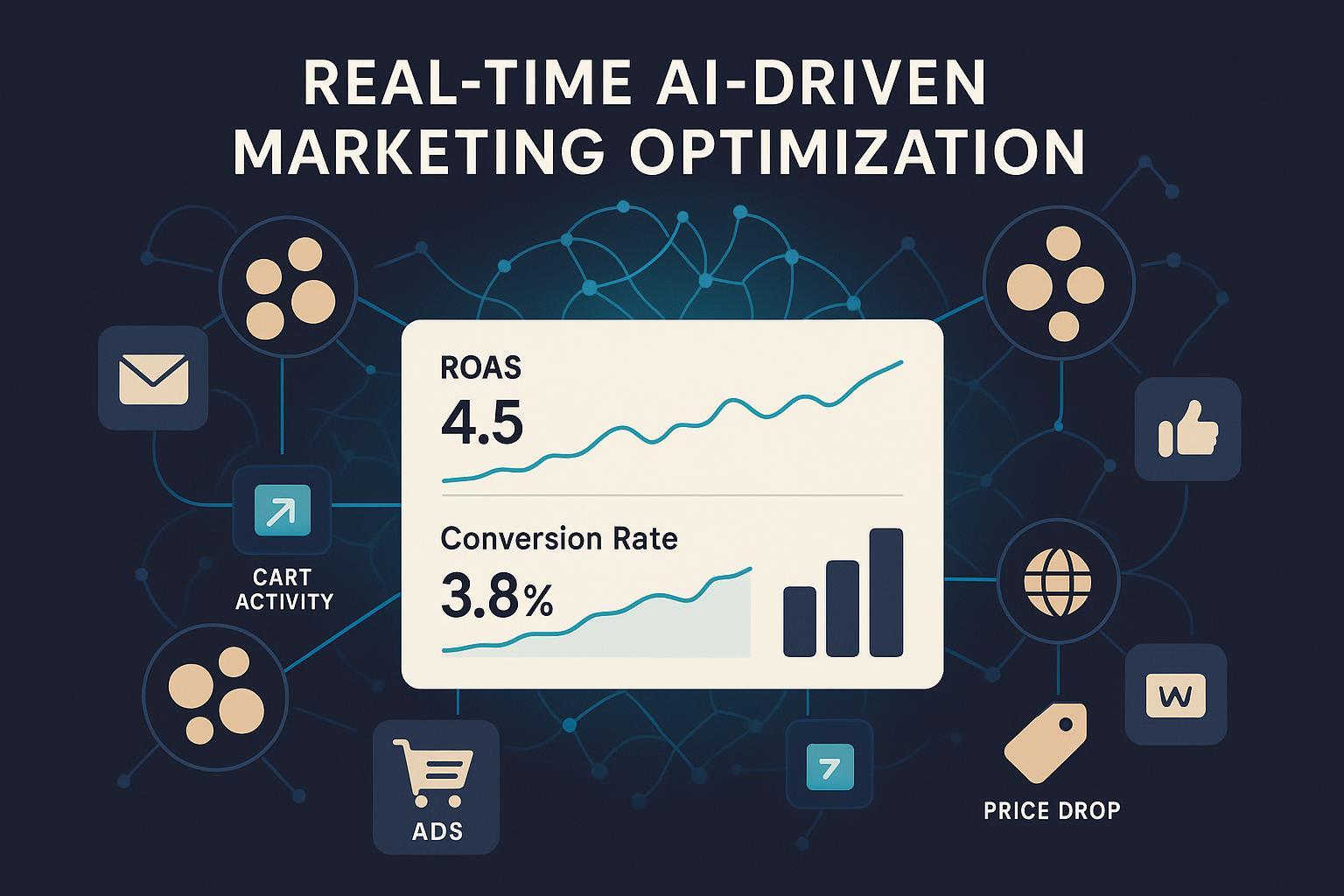

According to the Nielsen marketing mix modeling analysis of Google’s AI-powered campaigns in 2025, advertisers saw clear efficiency gains—such as Performance Max delivering roughly 8% higher ROAS versus standalone Search, and Broad Match driving about 15% higher ROAS versus other match types—when budgets and targeting were orchestrated by AI across surfaces (see the Nielsen “The ROI of AI” Google MMM case study, 2025) (Nielsen 2025 Google MMM case study). These are paid media examples, but the same principle applies to content-led programs: fresher signals + dynamic audiences + rapid optimization loops = measurable lift.

A practitioner’s workflow for real-time ROI

Below is the end-to-end flow I’ve used with teams to scale outcomes without overcomplicating the stack. It is tool-agnostic; use your CDP/CRM and orchestration layers of choice.

- Set objectives, constraints, and learning agenda

- Define business-aligned KPIs: conversion rate, CAC/CPA, ROAS, revenue, CLV, and segment-level incremental lift.

- Establish guardrails: brand safety rules, frequency caps, channel-specific limits, offer rotation policies.

- Draft a 4–6 week learning agenda: which hypotheses you’ll test, how you’ll measure, and what decisions you’ll make from results.

- Unify real-time data into a single view of the customer

- Stream web/app events, email interactions, ad platform metrics, and commerce/CRM data into a CDP/warehouse.

- Resolve identities (anonymous to known) and maintain consent flags at the profile level.

- Treat data quality as a product: establish schemas, event naming, and validation to prevent model drift.

- Dynamic audience segmentation that actually moves metrics

- Start simple but meaningful: RFM cohorts for value, lifecycle stages (new, active, lapsing), and key behavioral clusters (content categories, recency of engagement).

- Layer predictive scores: purchase/churn propensity and content propensity (topic/format likelihoods). Salesforce’s machine learning overview articulates how marketers operationalize these scores for activation (Salesforce ML in marketing).

- Reserve uplift modeling for budget-critical programs: target persuadables, not sure-things; validate with holdouts.

- Trigger logic and omnichannel orchestration

- Event-driven triggers: cart abandonment, category re-browse, price drop, back-in-stock, and cooldown exit.

- Channel routing heuristics: email for low urgency; push/SMS/WhatsApp for time-sensitive or high intent; paid social/search for reacquisition and reach.

- Cadence discipline: limit interruptive channels; respect consent and frequency caps.

- Creative and offer optimization in near real time

- Use generative variants within brand prompts; pre-approve modular copy and visual blocks to accelerate iteration.

- Run short-cycle multivariate tests; focus on learning velocity more than “winning variants.”

- Enforce human-in-the-loop reviews for regulated claims and sensitive segments.

- Measurement, attribution, and incrementality

- Budget planning with MMM; in-flight channel and journey optimization with MTA where permitted.

- Run causal lift tests (geo-split or PSA) to measure true incremental impact of key segments and triggers; if you’re new to this, see our primer on causal lift (geo/PSA): measuring true marketing impact.

- Weekly readouts for tactical moves; monthly budget reallocation for strategic shifts.

- Governance and privacy-by-design

- Activate only on consented data and keep sensitive categories off-limits.

- Minimize data collection; maintain data lineage; support user deletion.

- The California Attorney General’s guidance on CPRA clarifies “Do Not Sell or Share” and minors’ opt-in requirements; ensure your CMP and workflows respect these rules (California AG CPRA/CCPA page).

Short, real-world product example (workflow execution)

When teams need to turn real-time segmentation into publish-ready content quickly, a lightweight editor with AI and analytics saves cycles. QuickCreator enables marketers to generate and adjust multi-language blog content, align it with live SERP/topic signals, and publish instantly across owned channels while tracking segment-level performance. Disclosure: QuickCreator is our product.

Note: Keep the example objective. The principle is: content velocity plus segment fit beats isolated “big-bang” campaigns.

The segmentation playbook that scales ROI

I’ve found the most durable gains come from three complementary methods. Use them together, not in isolation.

- RFM for value orientation (foundation)

- What it does: Scores users by Recency, Frequency, and Monetary value, giving you VIPs, loyalists, and at-risk cohorts.

- How to operationalize: Refresh on transaction and key engagement events; tailor content depth, offers, and cadence per cohort. For example, VIPs get deeper product/solution content and early-access drops; lapsing users get friction-removal content and re-entry offers.

- Trade-offs: RFM is descriptive, not predictive. Use it as the base layer, then enrich with behavioral and predictive features.

- Propensity scoring for likelihood of action (scaling)

- What it does: Estimates probability of purchase, churn, or content engagement within a time window.

- How to operationalize: Translate scores into decile bands; route high-propensity users into higher-cost channels (e.g., paid retargeting) only if margin allows; route low/medium into nurture with informative content.

- Trade-offs: Correlational. Avoid overfitting and leakage; recalibrate with fresh data and track calibration curves.

- Uplift modeling for incremental impact (precision)

- What it does: Predicts who will be persuaded by treatment versus control, cutting waste on sure-things and non-responders.

- How to operationalize: Design with randomized holdouts or robust causal methods; dedicate budget to persuadables and exclude do-not-disturb segments.

- Trade-offs: Higher data and design complexity; needs continuous validation. McKinsey’s guidance on advanced personalization underscores this precision targeting approach in enterprise programs (McKinsey personalization frontier).

Real-time triggers that consistently produce lift

From repeated tests across B2C and B2B, these triggers tend to deliver reliable uplift when content and timing match intent:

- Price drop or back-in-stock: Pair utility content (e.g., comparison guides, FAQs) with the alert.

- Category re-browse: Serve educational content addressing objections and alternatives, not just product pushes.

- Onboarding streaks and stalls: Reward streaks with advanced tips; rescue stalls with short, single-ask content.

- New feature/content releases: Segment by content propensity (topic/format) so readers get what they actually consume—video vs. long-form vs. checklist.

An omnichannel case from 2025 shows the power of orchestration: Insider reports that Vogacloset achieved a 30x ROI with unified behavioral data triggering WhatsApp price-drop alerts and personalized recommendations (Insider omnichannel examples, 2025). Don’t copy the channel blindly; copy the principle: one profile, clear intent, timely trigger, right channel.

Creative and offer optimization without the chaos

To avoid creative sprawl while maintaining speed:

- Pre-approve modular content blocks: headlines, intros, CTAs, benefit bullets, and image templates. This keeps generative variants on-brand.

- Set a learning cadence: 7–10 day cycles for copy/creative, with a focused hypothesis per cycle.

- Guardrails: prohibited phrases, claim substantiation requirements, brand tone prompts, and compliance checks.

- Use short-run multivariate tests to discover signal, then lock in winners and expand tests to new segments.

Think with Google’s modern measurement and profitable growth playbooks (2024–2025) reinforce the need for disciplined test-and-learn loops tied to clear business outcomes, not vanity metrics (Think with Google Modern Measurement playbook).

Measurement rigor: how to prove incremental ROI

Most disputes about “what worked” vanish when you standardize measurement.

- MMM for budget allocation: Quarterly or semiannual models inform channel mixes and macro shifts. The 2025 Nielsen MMM analysis with Google illustrates how AI-mixed portfolios can outperform single-channel bets (Nielsen 2025 Google MMM case study).

- MTA for journey insights: Where privacy permits, use path data to optimize sequences and content touchpoints; be explicit about identity rules and consent.

- Causal lift tests for truth serum: Plan geo-split or PSA designs for your biggest levers (e.g., uplift-modeled segments). If you need a practitioner primer, our guide to causal lift (geo/PSA) walks through design and pitfalls.

- Dashboard discipline: At minimum, track segment-level conversion rate, CPA/CAC, ROAS, revenue, CLV, and incremental lift. Show both overall and per-segment trends.

Evidence cadence:

- Weekly: creative/offers, triggers, outlier diagnostics, and quick reallocations up to ±10%.

- Monthly: model refresh, budget reallocation, segment reshaping, and new test queue.

- Quarterly: MMM readout, strategic rebalancing, and roadmap updates.

Governance and risk management you can live with

Nobody wants to explain a privacy incident to the board. Bake protections into the system:

- Consent-aware activation: Segment and trigger logic must check consent state before firing. Respect CPRA’s “Do Not Sell or Share” and minors’ opt-in. Confirm your CMP and backend flows align with the California Attorney General’s published requirements (California AG CPRA/CCPA page).

- Data minimization: Drop fields you don’t use, and justify each feature in your models.

- Model governance: Monitor drift, fairness, and stability. Keep fallback rules (e.g., pause a segment if lift turns negative or complaint rates spike).

- Transparency: Explain personalization in your privacy policy and preference center. Offer easy opt-outs and frequency controls.

For cultural alignment, I often quote Scott Morris, CMO of Sprout Social (2025): he argues that embedding AI into daily operations—not as a side project—drives durable efficiency and growth, a theme echoed in Sprout’s marketing priorities discussion (Sprout Social 2025 marketing priorities).

Troubleshooting: what breaks and how to fix it

- Flat segments with noisy features: Prune inputs; prefer fewer, higher-signal features. Rebuild with time-decay weighting.

- Over-personalization fatigue: Cap frequency by segment; rotate value content (education, community, social proof) between offers.

- Attribution whiplash: Triangulate MTA with MMM and causal lift; define decision rules for conflicts.

- Creative drift: Centralize prompts and block libraries; add human QA at segment entry.

- Model decay: Schedule monthly recalibration; alert on AUC/calibration dips; keep a simple baseline (e.g., RFM-only) as a fallback.

If you’re tuning cross-functional workflows, our guide on best practices for content workflows that win with humans and AI (2025) covers collaboration patterns and handoffs.

Example weekly operating rhythm (90 minutes total)

- 15 min: Segment health review (size, recency, lift indicators).

- 25 min: Trigger performance (fire rates, conversions, opt-outs, complaints).

- 25 min: Creative/offer learning (variant performance vs. hypothesis; next tests).

- 15 min: Budget tweaks (±5–10% reallocations based on incremental lift and ROAS).

- 10 min: Risks & governance (privacy flags, deliverability, brand safety incidents).

Tooling notes without vendor lock-in

- Data & identity: CDP/warehouse + CRM that supports real-time profiles and consent fields.

- Orchestration: Journey builders that can read events, evaluate rules, and trigger cross-channel messages.

- Optimization: DCO/generative systems integrated with approval flows; experimentation platform for lift tests.

- Measurement: MMM partner or in-house econometrics; attribution and experimentation tools aligned with privacy constraints.

As adoption ramps, keep in mind that McKinsey’s AI research (2024–2025) reports substantial enterprise uptake and consistent gains when personalization is embedded end-to-end—though specific lift ranges vary by industry and maturity (McKinsey State of AI). Treat external benchmarks as directional; your context determines your ceiling.

Implementation checklist you can start this week

- Define 3 KPIs (business-level) and 3 guardrails (brand/privacy) for the quarter.

- Ship a minimal event schema: page_view, content_view(category, topic), signup, purchase, unsubscribe.

- Stand up 3 foundational segments: RFM tiers, lifecycle stage, and content propensity (topic-level).

- Activate 2 high-intent triggers: category re-browse and price drop/back-in-stock.

- Pre-approve 10 modular content blocks and 5 images for rapid assembly.

- Launch 1 geo/PSA lift test on your most material segment.

- Commit to a weekly 90-minute optimization ritual and a monthly reallocation meeting.

The bottom line

Real-time AI optimization and dynamic segmentation aren’t “nice to have” anymore—they’re the operating system of high-ROI content marketing in 2025. The playbook is repeatable: unify data, segment dynamically, trigger with intent, iterate creative fast, measure incrementality, and protect privacy. Do this consistently, and you’ll find the room to scale budgets with confidence.

If you’re aligning your content with AI search surfaces as part of this journey, consider tightening your approach to answer optimization; our explainer on LLMO in marketing is a practical primer.