Why predictive content performance analytics are replacing traditional A/B testing in modern marketing campaigns (2025)

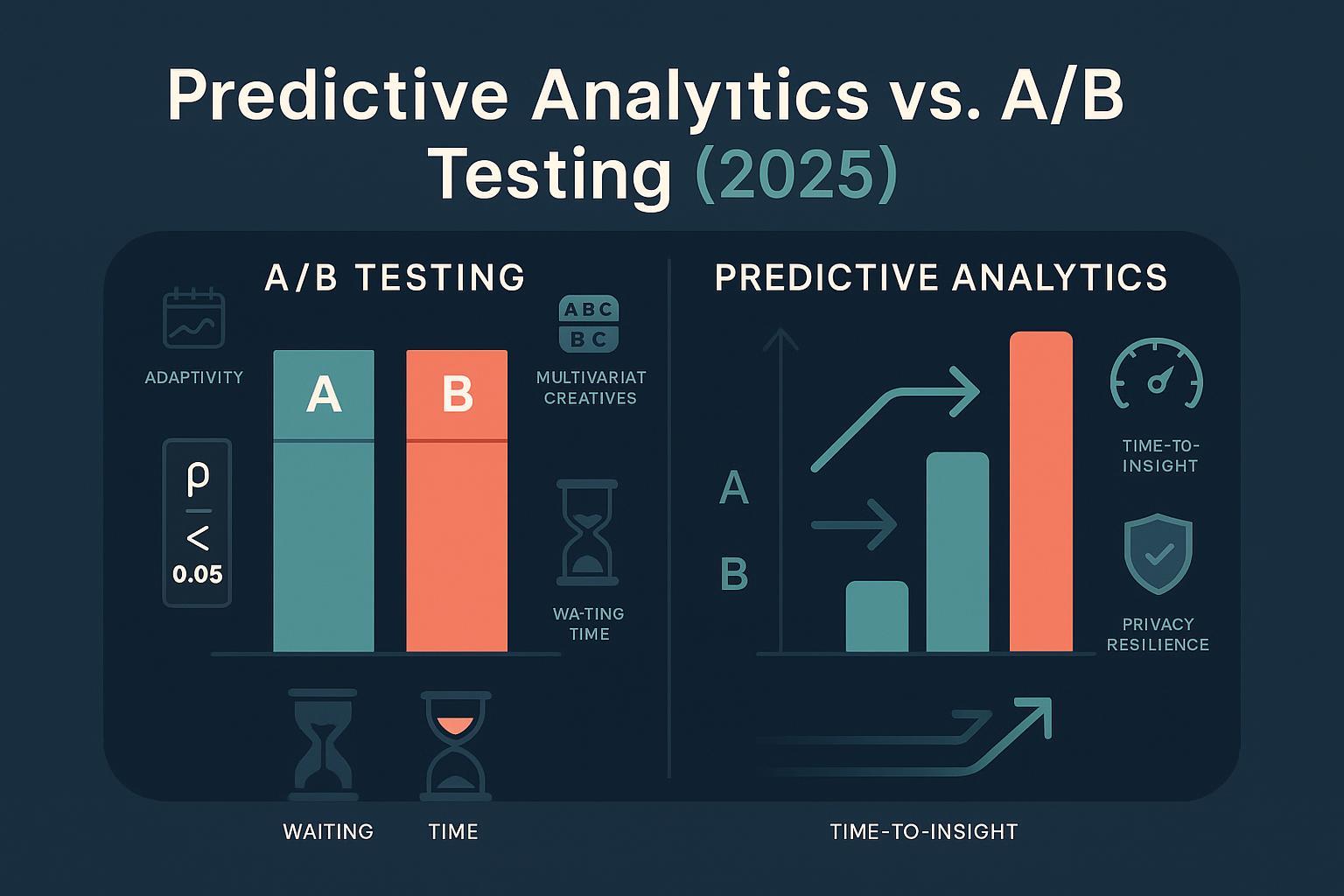

Modern marketing teams are optimizing under tighter timelines, fragmented identifiers, and an explosion of creative variants. In that environment, predictive content performance analytics—often powered by multi-armed bandit algorithms—are displacing traditional fixed-split A/B testing for many campaign decisions. The reason isn’t hype; it’s practical: faster time-to-insight, better use of scarce traffic, greater adaptability to change, and improved resilience to evolving privacy constraints. That said, A/B testing still has a crucial role when you need high-confidence causal estimates. This article explains when and why predictive approaches dominate, where classical A/B remains the right tool, and how hybrid frameworks bring the best of both worlds.

Quick definitions: A/B vs predictive/bandit approaches

- Traditional A/B testing: You split traffic (often 50/50) between two or more variants and wait for enough sample to reach statistical significance. It’s straightforward and yields clean causal estimates but can be slow and “wasteful” while serving underperforming variants until the end.

- Predictive content analytics and bandits: Algorithms like Thompson Sampling or UCB balance exploration (learning) with exploitation (serving likely winners), dynamically reallocating traffic toward better-performing variants mid-flight.

If you want a gentle refresher on optimization context, see this overview: A/B testing and optimization within an AI-driven content platform.

Decision speed and time-to-insight

Fixed-split A/B tests require waiting for a predetermined sample size to reach significance, which is a good guard against false positives but slows decisions—especially painful for short-lived promos. In contrast, bandits and predictive allocation make earlier, useful decisions by shifting traffic to promising creatives as evidence accumulates.

- As explained in the Amplitude 2024 overview of bandits vs A/B, dynamic allocation can reach actionable outcomes faster because it continuously updates probabilities rather than waiting for a single end-of-test verdict.

- The VWO 2025 primer on multi-armed bandits similarly outlines why marketers rely on bandits when speed and cumulative conversions matter more than perfectly precise final estimates.

Illustrative example: You’re testing three headlines (A, B, C) for a 10-day promo with ~30,000 impressions. By day 3, B is trending ahead. A bandit shifts more traffic toward B while still sampling A and C enough to keep learning. A classic A/B (or equal-split A/B/C) continues 33/33/33 until significance—useful for inference, but potentially slower to benefit from B’s advantage during the promo.

Sample efficiency and opportunity cost (regret)

Marketers care about conversions achieved during the experiment, not just after. Static A/B testing “wastes” traffic on weak variants until the test ends. Bandits minimize this regret by throttling losers and maximizing cumulative conversions.

- Contentful’s 2025 bandit explainer describes how dynamic allocation focuses spend where it performs, reducing the opportunity cost of waiting.

- The concept is formalized in decision theory, where bandits aim to reduce cumulative regret. A clear, accessible perspective appears in Stanford GSB’s 2024 feature on bandit-inspired experiments, which highlights how adaptive methods can deliver more payoffs in practical experimentation settings.

Numeric illustration: Suppose variant B’s click-through rate (CTR) stabilizes around +12% relative to A after the first 3,000 impressions. A bandit might shift to 60–70% of subsequent traffic to B while keeping some exploration. A fixed 50/50 A/B would keep sending half of the traffic to A until significance, increasing the cumulative “regret” (missed clicks) during the test window.

Adaptivity to shifting behavior and multi-variant sets

User behavior isn’t static—seasonality, news cycles, and cohort composition change. Fixed-split A/B tests are static by design. Bandit frameworks adapt by continuously updating allocation as observed performance moves.

- The Amplitude 2024 guidance notes that bandits are valuable when behavior changes mid-flight, because they blend exploration and exploitation.

- Contentful (2025) points out that bandits are particularly useful with many variants; they avoid starving plausible winners and accelerate toward the best-performing creative.

Privacy resilience (as of 2025-10)

Privacy rules and platform changes affect how we measure and attribute. Predictive analytics that rely on aggregated first-party signals and modeled outcomes are generally more resilient than approaches that depend heavily on granular third-party identifiers.

- On April 22, 2025, Google outlined its next steps for Privacy Sandbox and tracking protections in Chrome. Third-party cookies continue in Chrome for now, but Sandbox APIs (like Attribution Reporting) are active, and tracking protections remain in focus. This environment favors approaches that can work with aggregated, privacy-preserving signals.

- On iOS, Apple’s App Tracking Transparency (ATT) and SKAdNetwork documentation continue to limit deterministic identifiers; modeled and probabilistic attribution are standard practice in 2025.

In content marketing, leaning into first-party analytics and predictive planning helps. For example, using AI-powered topic suggestions backed by search intent and performance signals can guide creative choices without relying on third-party cookies. Predictive models can aggregate on-site engagement, email metrics, and contextual signals to score content while respecting user privacy norms.

Operational complexity and governance

Predictive allocation is powerful but requires more maturity:

- Explainability: Stakeholders may find classical A/B easier to understand. Document model logic, guardrails, and decision thresholds.

- Monitoring and drift: Behavior changes; models drift. Plan for periodic recalibration and holdouts.

- Skills and processes: You’ll need instrumentation, first-party pipelines, and experimentation ops.

Industry movement supports this trajectory. Optimizely’s recent development cycle brought contextual bandits to web experimentation, illustrating how mainstream platforms are operationalizing adaptive methods; see Optimizely’s 2025 web experimentation release notes for a vendor example and timeline.

When to prefer which method

Prefer predictive/bandit approaches when:

- Short-lived promos (days to weeks) demand quick learning and cumulative gains.

- Limited traffic and many creatives (3+) make sample efficiency critical.

- Environments where user behavior shifts (seasonality, cohort changes) benefit from adaptivity.

- Privacy constraints discourage reliance on device-level identifiers; first-party modeled signals are available.

Prefer classical A/B when:

- High-stakes product or policy changes require precise, causal estimates.

- You need clean post-test analysis across full performance profiles.

- Your organization prioritizes simplicity and auditability over speed and cumulative gains.

For readers exploring exploration vs exploitation and how it impacts SEO visibility measurement, this explainer provides context: how a search visibility score is calculated and why exploration/exploitation matters.

Hybrid experimentation: a practical workflow

Most teams benefit from combining both methods:

- Seed: Run a short, equal-split A/B (or A/B/C) to check baselines and catch defects.

- Exploit: Switch to bandits (e.g., Thompson Sampling) to maximize cumulative conversions while keeping some exploration.

- Holdouts: Maintain a randomized control group to estimate incremental lift and guard against model bias.

- Recalibrate: Periodically rerun A/B or adjust model priors to detect drift or seasonality.

This approach mirrors patterns described across industry sources, including Optimizely’s ecosystem guidance and the broader adaptive experimentation literature, such as the Amplitude and VWO primers cited above.

Side-by-side comparison (at a glance)

| Dimension | Predictive/Bandit Analytics | Traditional A/B Testing |

|---|---|---|

| Decision speed | Dynamic allocation delivers earlier, actionable insights | Waits for significance; slower decisions in short windows |

| Sample efficiency | Minimizes regret; more traffic goes to likely winners mid-test | Equal split until the end; “wasted” traffic on underperformers |

| Adaptivity | Adjusts to shifts and supports many variants effectively | Static allocation; less responsive to seasonality/behavior changes |

| Privacy resilience (2025-10) | Works well with aggregated first-party and modeled signals; aligns with Sandbox/ATT constraints | Often designed around deterministic identifiers; feasible but less resilient without adaptation |

| Operational complexity | Higher: instrumentation, monitoring, explainability, governance | Lower: simple to explain, audit, and train |

| Best-fit scenarios | Short promos, multi-variant creative sets, limited traffic, dynamic environments | High-confidence causal decisions, product/policy changes, compliance-heavy contexts |

Also consider: tooling to operationalize predictive content workflows

If you are looking for a platform to help operationalize predictive content planning and analytics, consider QuickCreator for AI-assisted content creation, optimization, and first-party analytics workflows. Disclosure: QuickCreator is our product.

Getting started checklist

- Clarify goal: cumulative conversions vs causal certainty.

- Inventory data: identify first-party events, content engagement, and privacy constraints.

- Start small: run a brief A/B to validate variants and instrumentation.

- Switch to adaptive allocation: use bandits for exploitation in short-lived or multi-variant campaigns.

- Maintain governance: holdouts, guardrail metrics, documentation, and periodic recalibration.

- Evolve measurement: integrate privacy-preserving attribution (e.g., Chrome Attribution Reporting; SKAdNetwork on iOS) and modeled outcomes.

Sources and further reading

- Amplitude (2024): Multi-Armed Bandits vs. A/B Testing — foundational comparison and trade-offs.

- VWO (2025): Multi-armed bandit algorithm in experimentation — practical marketer guidance.

- Contentful (2025): Leveraging AI for smarter experimentation: bandits — dynamic allocation context for digital content.

- Stanford GSB (2024): Bandit-inspired experiments reduce inefficiency — adaptive experimentation perspective.

- Google Privacy Sandbox (2025-04-22): Next steps for tracking protections in Chrome — current state and implications.

- Apple Developer Docs: SKAdNetwork overview — privacy-preserving attribution on iOS.

- Optimizely (2025): Web Experimentation release notes: contextual bandits — industry implementation example.