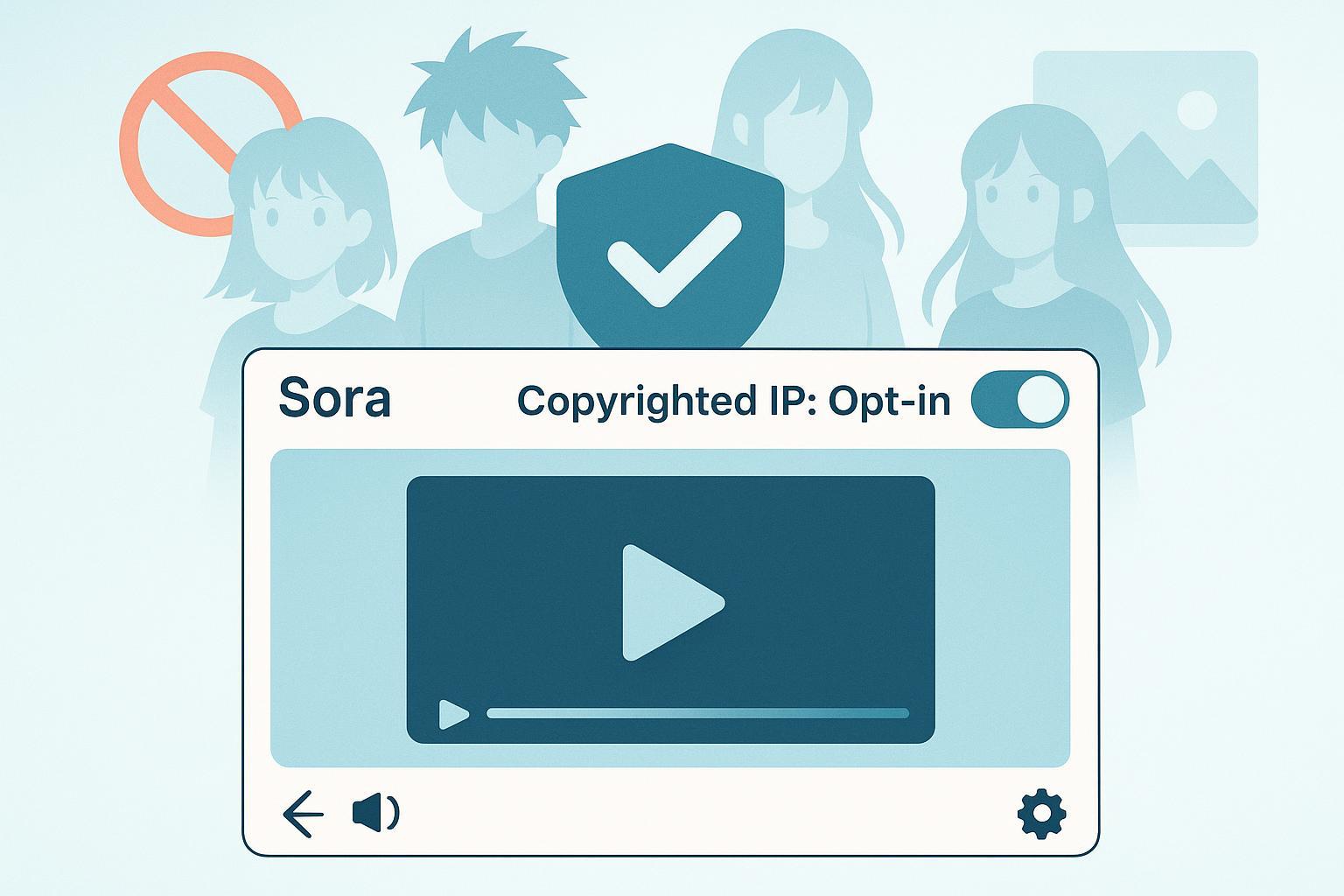

OpenAI’s Sora pivots to opt-in for copyrighted IP after anime backlash (2025)

OpenAI is shifting Sora’s approach to copyrighted characters from “opt-out” to a permission-first, opt-in model, following days of viral videos featuring famous IP and a swift backlash from anime studios and other rights holders. In early October 2025, OpenAI signaled “granular” controls for rights holders and even floated a revenue-sharing idea for those who choose to participate. Policies are still evolving, but the direction is clear: if a studio hasn’t opted in, Sora should prevent generation of its characters.

Below, I unpack what changed, why it matters for marketers and creators, and how to adapt your workflows without tripping legal or platform-policy wires.

What changed—confirmed vs. evolving details

- Confirmed shift and rationale: In an October 2025 note, Sam Altman said OpenAI will give rights holders “more granular control” and move to an opt-in standard for copyrighted characters, while also attempting a revenue-share for those who permit usage, as stated in Sam Altman’s “Sora update #1” (2025).

- Press corroboration: Reporting that OpenAI is backing away from an opt-out posture and emphasizing a permission-first model appears across top-tier outlets, including TechCrunch’s Oct 4, 2025 coverage and Business Insider’s Oct 4, 2025 report.

- Evolving specifics: The exact tooling for rights-holder controls (e.g., registry, API, publisher dashboards) and the revenue-share mechanics (percentages, eligibility, audit) remain unspecified. Even OpenAI acknowledges there will be “edge cases” where unauthorized generations slip through, per Altman’s 2025 post.

- Context for the pivot: Widespread, viral clips used popular anime and other trademarked characters, fueling criticism. A widely referenced example underscores the point, as documented in PC Gamer’s Oct 5, 2025 write-up on the backlash wave.

Why this matters for creators, marketers, and publishers

- Legal and brand safety: A permission-first baseline reduces risk but does not eliminate it. Implementation details and enforcement thresholds are still in flux, so you should plan for false positives and misses alike. Legal analysts are framing this as a significant backtrack driven by pressure from rightsholders, with open questions about execution, per Copyright Lately’s Oct 5, 2025 analysis.

- Distribution risk: Platforms like YouTube and TikTok have their own synthetic media rules; even compliant Sora outputs could face takedowns if platform policies are breached. Align your content disclosures and intent with host-platform rules, not just Sora’s.

- Operational friction vs. clarity: Opt-in adds steps (rights checks, documentation) but also brings clarity for brands and agencies craving predictable, defensible workflows.

A compliance-by-design workflow you can implement today

Use this practical, repeatable process to reduce risk and keep publishing velocity high.

- Pre-prompt IP scan

- Decide: Are you touching any identifiable IP—names, logos, distinctive trade dress, signature phrases, or unmistakable character traits?

- If yes: Assume opt-in is required unless you have written permission. If not available, redesign the concept toward original, non-infringing assets.

- Rights clearance and guardrails

- Seek written permission from the rightsholder (or confirm they’ve publicly opted in once OpenAI publishes a verified list). Specify scope: which characters, contexts, geographies, and distribution channels.

- Add prompt guardrails: explicitly avoid trademarked names and protected visual motifs if permission is not secured.

- Documentation and traceability

- Maintain a shared folder with: prompts/seed files, permission emails/contracts, links to official policy pages, and a live change-log of OpenAI updates impacting your project.

- In this stage, a neutral content ops tool can help. For example, QuickCreator can be used to record change-log entries alongside your drafts, attach source links for policy updates, and publish compliant updates across languages without touching code. Disclosure: QuickCreator is our product.

- Platform policy mapping

- Map each asset to the destination platform’s AI/synthetic media policies and disclosure requirements. Ensure your captions, descriptions, and metadata state what’s AI-generated and how likenesses are handled.

- Distribution QA and labeling

- Before publishing: Verify disclosure labels, check for any unintentional IP references, and confirm permissions match the final cut. Export and store a compliance summary for audit.

- Post-publish monitoring and escalation

- Monitor comments, DMs, and platform notices within the first 24–72 hours. Prepare a takedown/appeal playbook with contact points and a prewritten response.

Aligning with platform rules without slowing down

Even if Sora enforces an opt-in registry, your content still lives or dies on host-platform rules.

- YouTube: Expect stricter monetization on obviously synthetic or “mass-produced” content and clearer disclosure responsibilities. For a practical overview of creator-facing expectations and labeling workflows, see our guide, AI-Generated Content on YouTube: Everything You Need to Know.

- TikTok: Disclosure of AI-generated content and restrictions around impersonation continue to tighten. Treat likeness usage as high risk without explicit consent, and assume rapid takedowns for violations.

Tip: Maintain a one-page matrix that pairs each video with its platform, required disclosures, and links to the latest official rules. Update it alongside your Sora change-log.

Ethical creativity under opt-in constraints

If you don’t have permission, lean into originality and transparency.

- Build original character bibles: Define visual motifs, backstories, and movement styles that are unmistakably yours. Avoid “lookalikes” of famous IP.

- Avoid trade dress and signature elements: Small changes to a famous outfit, colorway, or silhouette can still infringe.

- Label and explain: Tell audiences what’s AI-generated and why. For a broader framework on transparency and accountability in AIGC workflows, see Ensuring Ethical AI: The Importance of Transparency and Accountability in AIGC Processes.

Monitoring, documentation, and contingency planning

- Change-log hygiene: Track OpenAI policy updates, editor/UX changes inside Sora, and any published lists of opted-in rights holders. Note dates, links, and impact on your current projects.

- Metadata and disclosures: Keep a standard template for video descriptions that summarizes AI use, rights status, and links to relevant policies. For teams that work across multiple AI tools and platforms, a clear read on T&Cs reduces surprises; this primer is helpful: The Ultimate Character AI Terms and Conditions Guide.

- Takedown playbook: Pre-write responses, define who signs off on appeals, and document evidence of permissions. Assume some legitimate videos may still be flagged while enforcement systems mature.

Quick platform-and-policy snapshot (as of early Oct 2025)

- Sora shift: Opt-in required for copyrighted characters; rights-holder “granular” controls planned; revenue share proposed but undefined, per Altman’s 2025 note and corroboration by TechCrunch (Oct 4, 2025) and Business Insider (Oct 4, 2025).

- Backlash context: Viral use of anime and other IP accelerated the policy pivot, as recapped by PC Gamer (Oct 5, 2025) and platform coverage in eWeek’s Sora 2 overview (2025).

- Uncertainties to watch: The rights-holder portal/API, the specific enforcement stack, and revenue-share terms. Legal commentators urge caution until formal OpenAI documentation is published, as noted by Copyright Lately (Oct 5, 2025).

Mini change-log

- Updated on 2025-10-06: Added confirmations from TechCrunch and Business Insider; cited Altman’s Oct 2025 note; clarified that revenue-sharing is proposed and details are pending; emphasized that enforcement “edge cases” are expected during rollout.

Final thought

Opt-in is a necessary correction—and an opportunity. Teams that build compliance-by-design muscles now will ship faster with fewer takedowns as policies stabilize. Treat permission, documentation, and transparent labeling as your base stack. Use originality as your creative advantage, and keep a disciplined change-log so your future self (and your legal team) can see exactly why each decision was made.