How to Use AI to Multiply Content Output

If you want more content without more chaos, you don’t start by installing new tools—you start by redesigning the workflow. Here’s the deal: AI multiplies output when it has clear jobs, humans own judgment, and quality gates keep both honest.

1) Start with outcomes, not tools

Pick a narrow slice to win first. Tie business outcomes to content types and cadence—e.g., two SEO articles and one newsletter per week aimed at demo signups or email growth. Prioritize topics by search intent and potential impact, not by which prompts feel cool. Set baseline metrics (current cycle time, error rate, and traffic) so you can prove lift later.

2) The hybrid human–AI pipeline that actually scales

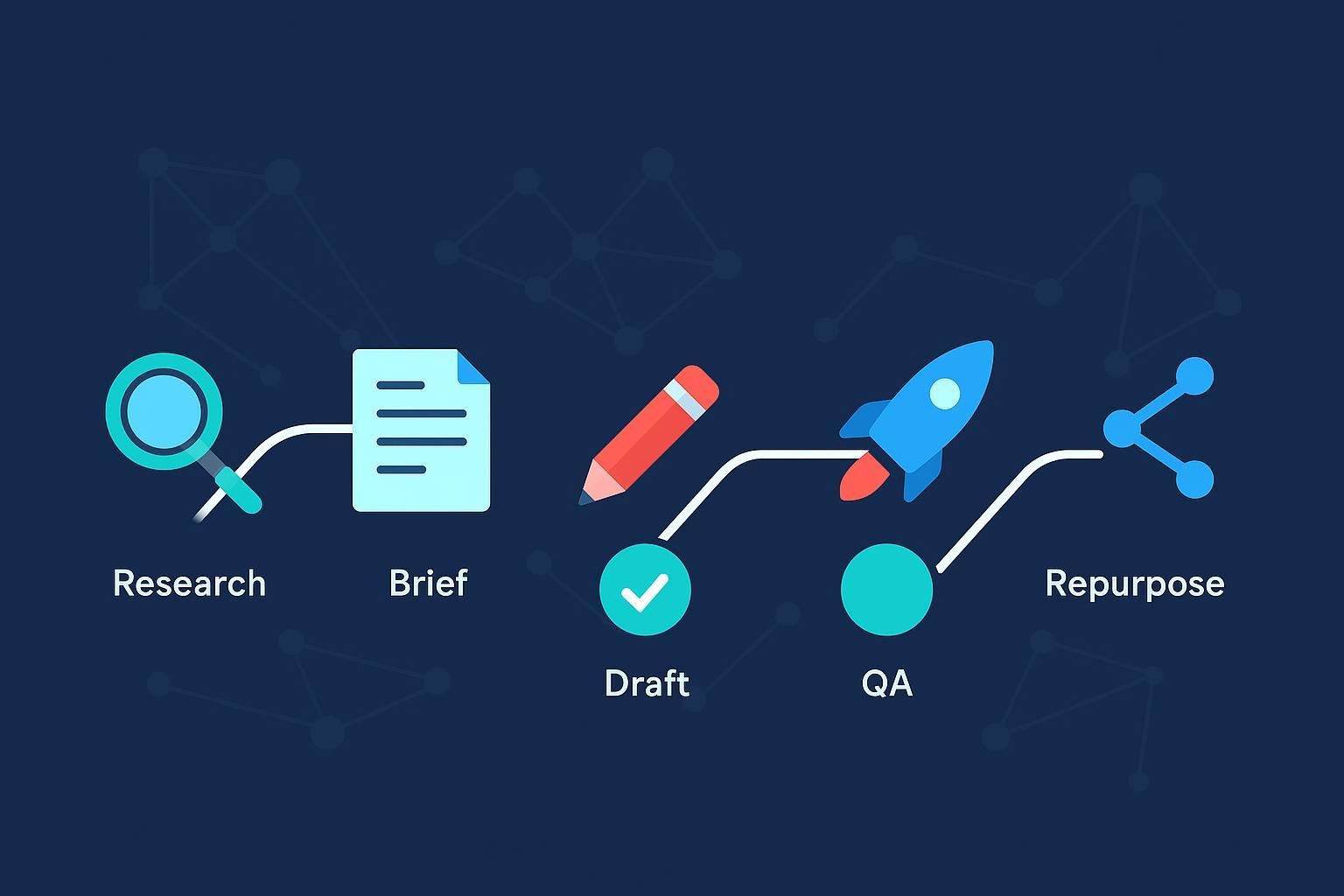

Think of the pipeline like a relay: AI accelerates research and first drafts; humans guide strategy, experience, and quality. Each stage has a single owner and a QA gate.

| Stage | Primary Owner | AI’s Job | Human’s Job | QA Gate |

|---|---|---|---|---|

| A. Research & Ideation | Strategist | Cluster entities, summarize SERP patterns, mine FAQs | Validate intent, business value, and angles | Topic–intent fit approval |

| B. Brief Creation | Editor | Draft outline, collect entities/questions, compile source pack | Finalize scope, add differentiation notes | Brief completeness sign-off |

| C. Drafting (First Pass) | Writer | Produce structured draft from approved brief with source notes | Ensure voice constraints and no unsourced claims | Structure/readability checks |

| D. Expert + Editorial Edit | SME + Editor | Suggest rewrites from checklists | Inject lived experience, examples, and corrections | Plagiarism/fact checks; brand voice pass |

| E. Final QA & Compliance | Managing Editor | Generate meta ideas, link suggestions | Approve metadata, accessibility, schema | Accuracy/originality/compliance checklist |

| F. Publish & Distribute | Publisher | Create snippets for social/email variations | Schedule releases, verify technicals | Publishing checklist (indexability, performance) |

| G. Post-Publication Optimization | SEO Lead | Suggest entity gaps and internal link targets | Prioritize refreshes and enrich content | Improvement tickets created and tracked |

Google is explicit that how content is created isn’t the ranking criterion—helpfulness and policy compliance are. See Google’s guidance on using generative AI for content (Search Central, 2024–2025) in the page titled Using generative AI content. For visibility in AI-enhanced experiences, Google also outlines success factors in Top ways to ensure your content performs well in AI-enhanced Search (May 2025).

3) Briefs that reduce rewrites by half

A tight brief prevents meandering drafts. Include: goal, audience and stage, search intent, angle, outline with H2/H3s, must-cover entities and questions, source pack with links and quotes to verify, examples to include, internal link targets (when available), schema type, and what to exclude.

Prompt pattern to generate and iterate a brief:

“Using the following research pack and target intent, propose a content brief. Include: H1/H2 outline, target entities/definitions, FAQs to answer, 3–5 authoritative sources to cite with notes on why, on-page requirements (snippet guidance, schema), differentiation ideas, and an exclusion list. Ask three clarifying questions if information is missing. Then provide a final brief after I answer.”

Have an editor approve every brief before drafting. If a brief can’t explain why a reader should pick your page over what’s already ranking, it’s not ready.

4) Draft fast, then add experience

First passes should be quick and controlled. Require the model to respect the brief, refuse unsourced claims, and insert placeholders where expert input is needed (quotes, proprietary data, screenshots). Then invite the SME to layer in lived experience: “We tested X; here’s what actually happened,” or a short case example. That’s your E-E-A-T backbone.

To minimize hallucinations, ground the model on vetted sources (a lightweight RAG approach) and insist on in-line citations for factual claims the editor will verify. Industry research shows that techniques like retrieval grounding and reasoning prompts reduce unsupported assertions; treat them as guardrails, not silver bullets.

5) Quality, compliance, and E-E-A-T guardrails

Quality is a system, not a vibe. Anchor your standards to Google’s guidance and your own policies:

- Google’s Search docs stress people-first content and policy compliance; see Using generative AI content and the AI-enhanced Search guidance (May 2025).

- For on-page snippets and meta descriptions, follow Google’s guidance on snippets and meta descriptions to keep snippets unique, descriptive, and reflective of page content.

- For governance—disclosure, provenance, privacy, and bias controls—adopt principles from Google’s Responsible AI Progress Report (Feb 2025) and adapt them to your editorial SOPs.

Final QA sign-off checklist (adapt to your CMS):

- Sources verified; in-line citations added with descriptive anchors.

- Originality scan passed; quotes and third-party visuals cleared.

- Experience added (examples, tests, screenshots) and labeled clearly.

- On-page hygiene: clear headings, unique snippet, alt text, and schema.

- Accessibility and legal checks; no sensitive data in prompts or outputs.

6) On-page SEO and eligibility for AI-enhanced Search

Make the page easy to understand for both readers and systems.

- Snippets: Write unique, descriptive meta descriptions that reflect the page content; avoid repetition or manipulative patterns (see Google’s snippet/meta description guidance).

- Structure: Use clear HTML headings, descriptive anchors, and concise paragraphs. Where appropriate, add HowTo/FAQ schema and validate before publish.

- Distribution cadence: Avoid mass publishing spikes that look like thin-content automation. Stagger releases and ensure unique value per URL.

Want a reality check? In a July 2025 study, Pew Research noted users may click fewer links when an AI summary appears. Use compelling titles/snippets and on-page value to earn the click; see the Pew analysis on AI summaries and clicks (2025) for context.

7) Repurpose quickly without sounding robotic

Repurposing multiplies distribution, not just word count. Keep the hook and promise consistent; tailor format and tone to the channel.

Compact prompt for social/email/video derivatives:

“Given the approved article and these brand voice rules, create: (1) a LinkedIn post (200–300 words, professional tone, 1 question to spark comments), (2) an X post (≤280 characters with one strong hook), (3) an Instagram caption (100–150 words, visual-first hook + CTA), (4) a newsletter blurb (150–250 words, scannable, one CTA), and (5) a 30–45s vertical video script (hook → 3 bullets → CTA). Flag any claims that require inline citations or visuals I should capture.”

For platform-specific best practices and cadence planning, practitioner playbooks are useful; see Sprout Social’s guidance on repurposing content for social channels and adapt to your audience.

8) Post-publication improvement loop

The work starts at “publish.” Instrument the page, then iterate.

- Entity enrichment: Ensure core entities are defined consistently and linked internally where relevant. Expand missing subtopics and FAQs discovered in search behavior.

- Internal linking: Add contextual links from relevant, high-traffic pages to your new piece and vice versa. Use descriptive anchors, not generic “read more.”

- Refresh cadence: Update stats and examples, extend sections readers linger on, and refine structure based on SERP shifts. Validate schema after major edits.

9) Instrumentation: measure speed, quality, and outcomes

You can’t multiply what you don’t measure. Start with a compact KPI set and track it at the stage level.

| KPI | Definition | Formula | Starter Target |

|---|---|---|---|

| Throughput | Pieces published per period | Count / time window | +25–50% vs. baseline after 6–8 weeks |

| Cycle time | Days from assignment to publish | Mean(publish − assign) | −30–40% with stable quality |

| Editor rejection rate | % of AI-assisted drafts needing major rework | Rejected / total AI-assisted drafts | <15% after prompt/brief tuning |

| Factual error rate | % of reviewed pieces with inaccuracies | Error pieces / total reviewed | <5% with source logging |

| Engagement proxy | Avg engaged time or scroll depth | Analytics measure | Upward trend per refresh cycle |

| Outcome proxy | Conversions per piece (e.g., signups) | Conversions / piece | Flat or rising as scale grows |

At the macro level, McKinsey estimates substantial productivity value from generative AI, especially in marketing and sales. Treat this as directional context—not a promise. See the McKinsey State of AI (2025) for the range and caveats.

10) Pitfalls and quick fixes

- Mass-producing undifferentiated pages: Pause. Tighten briefs with a clear “why us.” Add lived examples before hitting publish.

- Hallucinations and hidden errors: Ground drafts on a source pack; require in-line citations and a fact-check pass.

- Voice drift across derivatives: Encode voice rules in prompts and run an editor pass for tone before scheduling.

- Shipping spikes that look spammy: Smooth the release curve and ensure each URL adds unique value.

- Chasing tools, not outcomes: Re-center on business goals and the KPI table above; prune steps that don’t move the numbers.

Where to start this week

- Pick one content type and ship an AI-assisted piece end to end using the pipeline.

- Log time at each stage, run the QA checklist, and capture editor feedback.

- Repurpose once into two channels.

- Review metrics after two weeks; adjust prompts and briefs; then scale.

Two parting thoughts. First, small, steady wins compound—don’t flip a giant switch. Second, think of AI as your speed layer; your edge is still your judgment, experience, and taste. Let’s dig in and build the system that proves it.