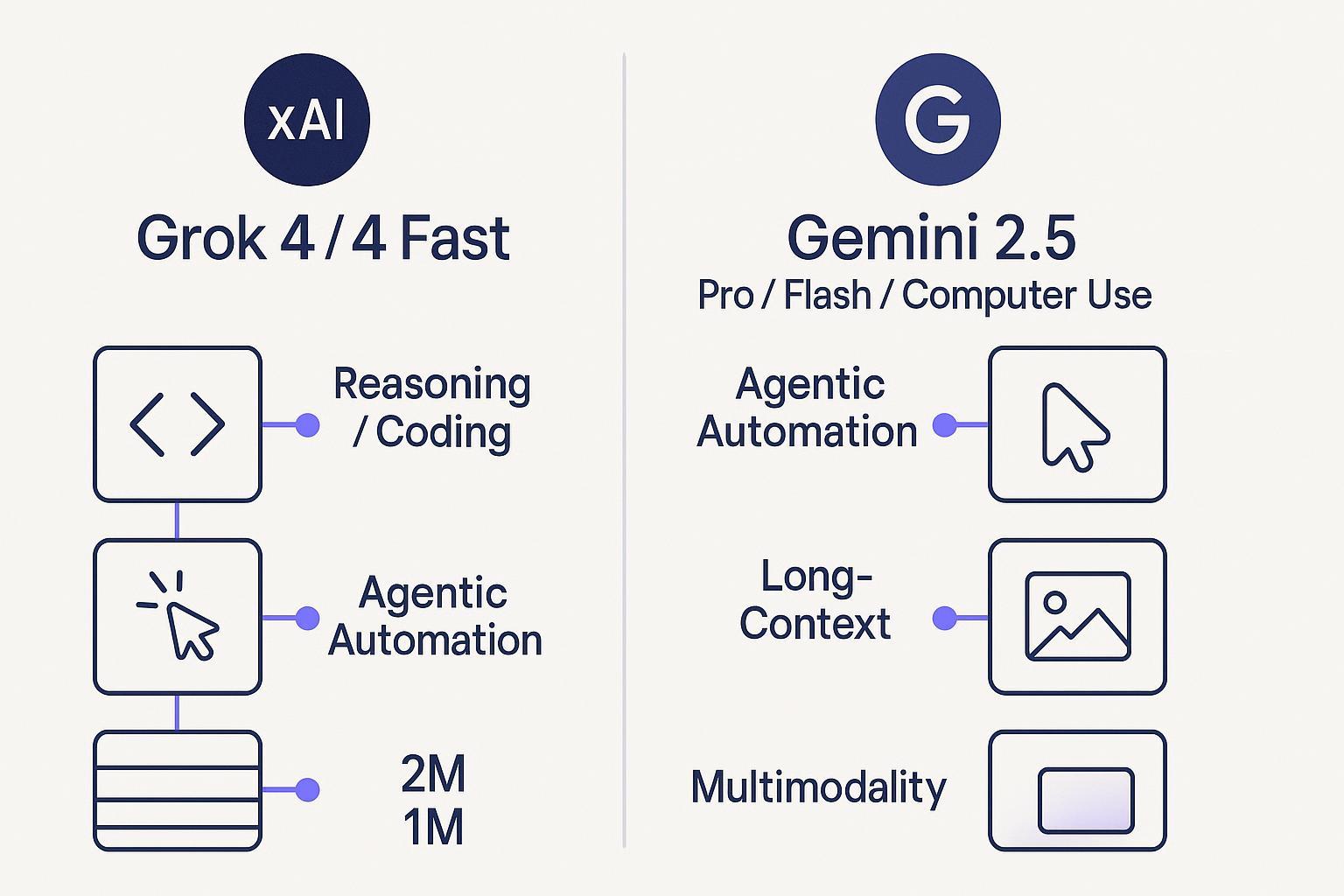

Grok 4 vs Gemini 2.5 (2025): Which model fits coding, agents, long context, and multimodal?

If you’re deciding between xAI’s Grok 4 family and Google’s Gemini 2.5 family for real products—coding copilots, browser agents, long-context RAG, or multimodal extraction—this guide distills what matters. We time-stamp specs and link to first-party docs because these models change fast.

As of October 13, 2025, here’s a current snapshot and the trade-offs that actually move cost, reliability, and delivery timelines for teams.

Quick snapshot (as of Oct 13, 2025)

Dimension | xAI Grok 4 family | Google Gemini 2.5 family |

|---|---|---|

Representative models | Grok 4; Grok 4 Fast (reasoning & non-reasoning) | Gemini 2.5 Pro; Gemini 2.5 Flash; 2.5 Computer Use (preview) |

Context window | Grok 4 commonly cited ~256K (API/marketplace); Grok 4 Fast up to 2M | Up to 1M on 2.5 Pro/Flash variants |

Agentic/browser control | Native tool-use and real-time search integration | First-party UI automation via Computer Use (preview) |

Multimodal | Text and images (per model docs) | Text, images; mature vision features; Computer Use leverages screenshots |

Pricing examples | Grok 4: Input ~$3/M, Output ~$15/M (marketplace snapshot); Grok 4 Fast: Input ~$0.20/M, Output ~$0.50/M for smaller requests (xAI API) | 2.5 Pro: tiered per tokens; 2.5 Flash: lower price per 1M; billed per Gemini pricing page |

Enterprise routes | Direct xAI API; Azure AI Foundry (Grok 4) | Gemini API and AI Studio; Vertex AI (incl. Computer Use preview) |

Status notes | Grok 4 Fast new (Sep 2025); some pricing tiers vary by length | Computer Use is preview; Pro/Flash GA via Gemini API/Vertex |

Sources: xAI Grok 4 announcement (2025-07-09) and API/models, Azure AI Foundry availability for Grok 4, Google’s Gemini pricing and Computer Use blog/docs. Specific citations appear in sections below.

1) Reasoning and coding performance

Both families target high reasoning competency, but their emphases differ.

Grok 4 (and especially Grok 4 Fast reasoning variant) is pitched as cost-efficient reasoning with native tool-use. xAI states Grok 4 “includes native tool use and real-time search integration,” enabling programmatic action loops inside tasks, according to the xAI Grok 4 announcement (2025). Grok 4 Fast adds efficiency and larger context, with model variants for reasoning vs non‑reasoning listed on the xAI API models page (2025).

Gemini 2.5 Pro is Google’s flagship reasoning model delivered via the Gemini API and Vertex AI; pricing tiers (and implicit “thinking tokens”) are documented on the Gemini API pricing page (2025). In practice, developers choose Pro for tough coding/debugging or complex planning, and Flash when they need throughput and lower cost.

What this means in practice

If your coding workload needs consistent multi-step reasoning and tool orchestration against APIs or search, Grok 4 Fast (reasoning) is attractive due to efficiency and action tooling.

If you’re already on Google’s stack or need tight integration with downstream services (Vertex AI, monitoring, guardrails), Gemini 2.5 Pro is the safer “default” for enterprise fit; Flash covers high-volume suggestions and refactors.

2) Tool use and agentic automation (Computer Use vs tool-use RL)

xAI’s approach focuses on native tool-use and search, which you can wire into browsers or external systems via your own controller. The advantage is flexibility and cost; the trade-off is you own more of the orchestration, retries, and safety checks. See model capabilities on the xAI API models page (2025).

Google offers a first-party agent for UI control: Gemini 2.5 Computer Use. It runs observe → decide → act loops to click/type/scroll in a browser or on mobile, with confirmation for sensitive operations. This reduces the amount of glue code you need for RPA‑like web tasks and brings stronger built-in guardrails. Learn more in the Google DeepMind Computer Use introduction (2025-10-07) and the Gemini Computer Use developer docs (2025).

Practical implication

Choose Computer Use when you want a managed path for browser automation with clear safety prompts and auditability. For broader tool ecosystems (custom REST tools, search, code execution) where you control the loop, Grok’s native tool-use is lean and flexible.

Tip: If you’re designing marketing or ops automations, this overview of agentic AI workflows for marketing automation explains how to stage tasks, confirm critical steps, and add governance.

3) Long-context (100K–2M)

Grok 4 Fast advertises a 2,000,000-token context window in xAI’s September 2025 announcement; variants are listed on the xAI API models page (2025). That makes it suitable for huge dossiers, multi-repo codebases, or multi-document legal reviews—provided you manage retrieval and grounding carefully.

Grok 4 is commonly cited around 256K context in API/marketplace entries. Marketplace snapshots vary, so confirm in your channel.

Gemini 2.5 Pro/Flash sit in the up-to-1M context class per Google’s model family documentation and updates; pricing tiers are defined on the Gemini API pricing page (2025).

Operational guidance

Long context alone doesn’t guarantee recall or citation integrity. You’ll still want chunking, embeddings, and retrieval checks. For pipeline design and cost control in long-context content ops, this primer on generative AI content workflows (2025 framework) is a useful start.

4) Multimodality

Gemini 2.5 family has mature multimodal inputs (text, images) and leverages screenshots in Computer Use loops for UI grounding. This is helpful for chart reading, invoice parsing, or visually guided automation.

Grok 4/4 Fast support text and images per xAI’s docs footprint; for image-to-structured extraction, expect to engineer parsing prompts and post-processing either way. If your workload leans heavily on vision, Gemini 2.5 generally offers richer first-party examples in docs and Studio.

5) Latency and throughput

Official P50/P95 latency under load is not generally published by either provider. Plan to measure your own P50/P95 and timeout policies per scenario:

Use short “probe” prompts to detect transient slowdowns and autoscale your concurrency.

Separate latency-sensitive prompts (e.g., code completion) from heavy reasoning/prompts.

Cache prompts when your provider supports it (xAI lists cached input pricing on the xAI API models page (2025)).

6) Cost and token efficiency

Treat prices as of Oct 13, 2025 and confirm before deployment:

Grok 4 pricing on marketplaces is often listed around Input $3.00/M and Output $15.00/M; see the OpenRouter Grok 4 listing (2025). Grok 4 Fast lists Input ~$0.20/M and Output ~$0.50/M for smaller requests, plus discounted cached input on the xAI API models page (2025). Longer prompts may hit higher tiers.

Gemini 2.5 pricing is published on Google’s official page; 2.5 Pro uses tiered pricing by prompt length and includes “thinking” in output costs, while 2.5 Flash is tuned for price-performance. See the Gemini API pricing page (2025).

Effective cost per solved task matters more than per-token price. In practice:

For iterative coding and RAG where you can keep prompts short and cache inputs, Grok 4 Fast can drive down effective costs.

For high-volume assistive tasks or UI automation where Flash’s price-performance suffices and Computer Use reduces glue code, Gemini often wins total cost of ownership.

7) Ecosystem and integration paths

Grok 4 is available directly from xAI’s API and via Azure AI Foundry for enterprise use. Microsoft announced availability in late September; see the Azure AI Foundry blog (2025-09-29). This route helps teams standardize on Azure governance and networking.

Gemini 2.5 is accessible via the Gemini API/AI Studio and Vertex AI. If you’re standardizing on Google Cloud, Vertex gives you billing, logging, guardrails, and enterprise policy in one place.

Gateways and marketplaces

OpenRouter and similar gateways provide additional routing and burst capacity, but pricing and rate limits can change; treat them as integration options, not canonical pricing.

8) Governance, safety, and reliability

Gemini 2.5 Computer Use includes explicit confirmations for sensitive steps and protections against common prompt-injection patterns, per Google’s 2025 updates in the Computer Use introduction and docs. This reduces risk for UI automations that touch accounts and forms.

Grok’s native tool-use lets you enforce your own guardrails—e.g., hard-coded allowlists for domains/actions, and human-in-the-loop for high-risk steps. It’s flexible but shifts responsibility to your team.

9) Data privacy and enterprise controls

Google: Using Vertex AI, you can configure enterprise controls like project isolation and zero-data-retention modes, with billing/observability inherited from Google Cloud services. Pricing for Pro/Flash follows the Gemini API pricing page (2025); Computer Use is a preview capability with quotas and confirmations per docs.

xAI: xAI publishes privacy and enterprise data processing terms; large enterprises may prefer Azure AI Foundry routing for Grok 4 to align with existing network and compliance baselines, as indicated in Microsoft’s Azure AI Foundry blog (2025).

10) SEO/content quality workflows

For content production, both models can be constrained to style guides and on-page structures. The practical differences show up in cost-per-article and fact-grounding:

Large-topic briefs or multi-source dossiers favor Grok 4 Fast’s 2M context if you truly need to park vast source material in-context—but retrieval and citation checks remain essential.

If your stack is on Google, Gemini 2.5 Flash can be a cost-effective engine for scalable drafting and updates, with 2.5 Pro reserved for hard research or synthesis.

For governance and scaling content pipelines, see this guide on best-practice content workflows that blend human and AI.

Decision matrix: When to pick which

Choose Grok 4 / Grok 4 Fast when:

You need cost-efficient reasoning at scale and can structure tool-use yourself.

Your workloads rely on very large contexts (dossiers, multi-repo code) and you can manage recall/citations with retrieval checks.

You want Azure AI Foundry as an enterprise channel (for Grok 4) while keeping optional direct API and marketplace routes.

Choose Gemini 2.5 (Pro/Flash + Computer Use) when:

You want first-party browser/mobile UI control with built-in confirmations and safety.

You’re standardizing on Google’s ecosystem (Gemini API/AI Studio/Vertex AI) and prefer integrated governance and monitoring.

You need a mix of advanced reasoning (Pro) and high-throughput price-performance (Flash) and want mature multimodal supports.

Implementation quick-starts

Below are minimal Python examples that reflect typical authentication and a simple call path. Always check quotas, preview vs GA status, and retry/backoff policies in the official docs.

xAI Grok 4 Fast (Python)

import os

import requests

API_KEY = os.environ["XAI_API_KEY"]

MODEL = "grok-4-fast-reasoning"

payload = {

"model": MODEL,

"input": "Summarize these docs and propose a 3-step action plan.",

"tools": [{"type": "web_search"}],

}

resp = requests.post(

"https://api.x.ai/v1/chat/completions",

headers={"Authorization": f"Bearer {API_KEY}", "Content-Type": "application/json"},

json=payload,

timeout=60,

)

resp.raise_for_status()

print(resp.json()["choices"][0]["message"]["content"]) # handle tool calls if present

Check model IDs, pricing tiers, and cached input options on the xAI API models page (2025).

Google Gemini 2.5 Pro (Python)

import os

from google import genai

client = genai.Client(api_key=os.environ["GEMINI_API_KEY"]) # via ai.google.dev SDK

model = "gemini-2.5-pro"

resp = client.responses.create(

model=model,

input="Explain the algorithm and provide a Python example for Dijkstra’s shortest path.",

)

print(resp.output_text)

For price tiers and availability (Pro vs Flash, preview notes), see the Gemini API pricing page (2025). For UI automation agents, review the Gemini Computer Use developer docs (2025).

Final verdict

Grok 4 Fast is compelling when you want scalable, cost-conscious reasoning and extremely large contexts, especially if you’re comfortable owning tool orchestration.

Gemini 2.5 is a strong default if you want first-party UI automation (Computer Use), mature multimodal support, and integrated enterprise controls via Vertex AI.

Most teams will trial both: run the same coding, RAG, and browser workflows with identical prompts, log tokens and latency, and tally effective cost per solved task. Revisit pricing and limits monthly; preview→GA transitions often change quotas and cost.

Also consider

If your goal after model selection is to operationalize SEO content workflows, you might layer a platform that handles research, drafting, optimization, and publishing. QuickCreator is one such option. Disclosure: QuickCreator is our product.

Sources and recency notes

xAI: Grok 4 capability claims and availability were published in the xAI Grok 4 announcement (2025-07-09). Grok 4 Fast variants, pricing rows, and 2M context are reflected on the xAI API models page (2025).

Azure: Microsoft announced Grok 4 availability in Foundry on the Azure AI Foundry blog (2025-09-29).

Google: Pricing and model availability for Gemini 2.5 Pro/Flash are maintained on the Gemini API pricing page (2025). Computer Use was introduced in October 2025 on the Google DeepMind Computer Use blog (2025-10-07) with developer details in the Gemini Computer Use docs (2025).

Marketplaces: Grok 4 pricing snapshots can be viewed on the OpenRouter Grok 4 listing (2025). Marketplace numbers change; rely on official docs for billing-critical decisions.