Future Regulations Impacting AI Content in 2026 and Beyond

Future Regulations will change how AI-made content works everywhere. This will start in 2026. The EU AI Act will begin in August 2026. It is an important law. It will affect many countries. The market for AI and generative AI is growing fast:

Year | AI Market Size (USD) | Generative AI Market Size (USD) |

|---|---|---|

2025 | 244 billion | 63 billion |

2030 | Over 800 billion | N/A |

Groups like the FTC and ACCC want new rules. These rules will help with privacy and competition problems. Companies and creators need to know about these changes. This will help them keep their work and good name safe.

Key Takeaways

The EU AI Act will begin in 2026. It makes strong rules for AI. These rules focus on high-risk uses. The goal is to make AI safer and more trusted.

In the US, each state makes its own AI laws. This means there are many different rules. Companies must pay attention and change fast.

New AI rules around the world focus on transparency. They also want clear labels for AI content. Risk management and human oversight are important too.

Following AI rules helps businesses avoid fines. It helps them build trust with customers. It also lets them use AI safely to grow and compete.

Working together and using smart tools helps companies keep up. AI laws change fast. These steps help make better AI systems.

Future Regulations Overview

EU AI Act

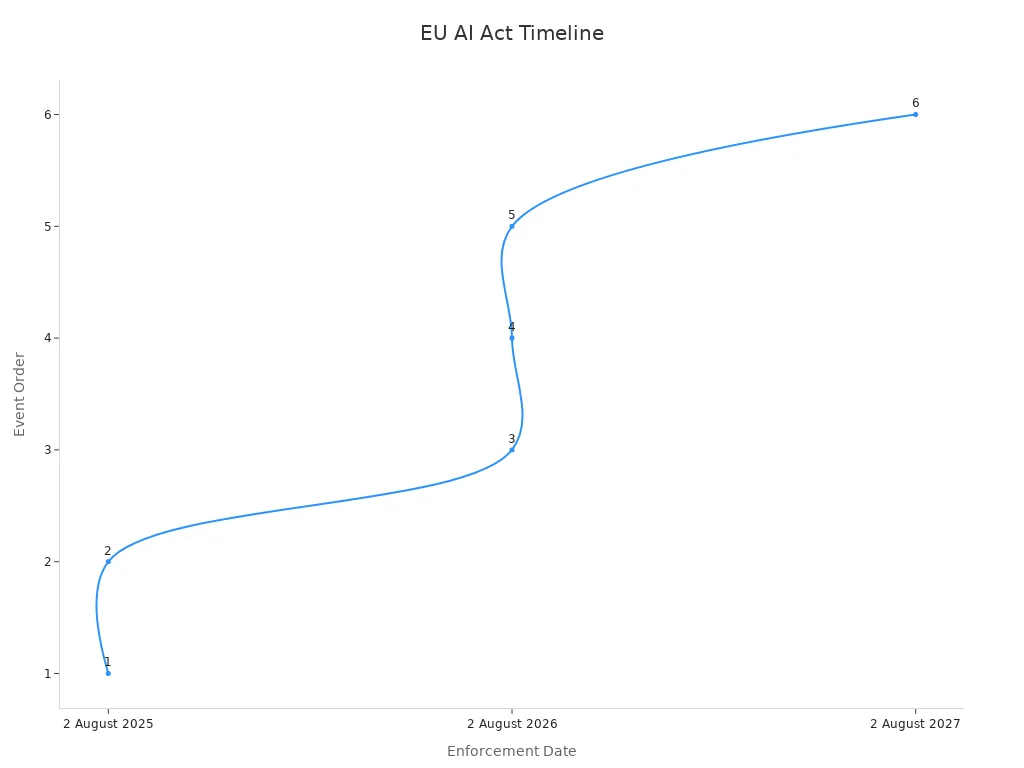

The EU AI Act is the first big law for artificial intelligence. The European Union shared this law in July 2024. It started to work on August 1, 2024. Most rules will start on August 2, 2026. The law sorts AI systems by how risky they are. Some AI uses are not allowed at all. There are tough rules for high-risk AI. People who use high-risk AI must follow new rules. These rules include being open, keeping records, and letting people check their work. Every EU country must have at least one AI regulatory sandbox by August 2026. These sandboxes help companies test AI safely before using it everywhere.

Date | Enforcement/Obligation | Reference |

|---|---|---|

2 August 2025 | Member States designate competent authorities and notify Commission | Art. 70(2), Art. 70(6) |

2 August 2025 | Penalties rules to be established and notified by Member States | Art. 70(2) |

2 August 2026 | Majority of AI Act provisions apply (except Art. 6(1)) | Art. 113 |

2 August 2026 | Operators of high-risk AI systems comply (except some exceptions) | Art. 111(2) |

2 August 2026 | Member States establish at least one AI regulatory sandbox | Art. 57(1) |

2 August 2027 | Full enforcement of Art. 6 and GPAI providers compliance deadline | Art. 113(c), Art. 111(3) |

The EU Commission says the Act will help people trust AI in Europe. The rules will start slowly, so businesses can get ready. Many other countries may copy these rules for their own laws.

US Developments

The United States does not have one big AI law for the whole country. Instead, each state makes its own rules. Colorado was the first state to make a law about AI content. Experts think more states will make their own laws as AI changes. The FTC gives advice, but Congress has not made one rule for everyone.

Legislative Initiative | Description | Source/Stakeholders | Key Points |

|---|---|---|---|

H.R.1 'One Big Beautiful Bill' | Federal bill proposing a 10-year moratorium on state AI regulations | Congress.gov, bipartisan opposition letter | Would block state laws on AI content; faces strong opposition from all 50 states |

Wisconsin 2023 Act 1213 | State law requiring disclosure of AI-generated political ads | Wisconsin State Legislature | Mandates transparency in political ads using AI-generated content |

Nebraska Legislative Bill 172 | Proposed state bill criminalizing AI-generated child pornography | Nebraska Legislature | Criminalizes AI-generated child pornography |

Arkansas House Bill 1518 | Proposed state bill criminalizing AI-generated child pornography | Arkansas Legislature | Criminalizes AI-generated child pornography |

Senate Committee Proposal | Ties AI moratorium to broadband funding | Senate Committee on Commerce, Science, and Transportation | States refusing moratorium risk losing federal broadband funds |

Letters and Opposition | Letters from attorneys general and organizations opposing moratorium | Official letters and coalition statements | Argue moratorium undermines state protections and consumer rights |

Public Opinion Poll | May 2025 poll on AI regulation | Common Sense Media, Echelon Insights | 73% support AI regulation by states and federal government; 59% oppose 10-year moratorium |

Political Statements | Support for moratorium to avoid patchwork laws | CNBC interview, public statements | Argue patchwork state laws hinder US AI competitiveness against China |

The National Law Review says a big federal law is not coming soon. States will keep making their own rules. This means there will be many different rules across the country. Other industries also see lots of new rules every day. Banks, for example, face many changes each day. Companies use AI and other tools to keep up with all the rules. Businesses that use smart tools have fewer problems and spend less money.

China and Canada

Canada made the Artificial Intelligence and Data Act, called AIDA. It is part of Bill C-27. AIDA wants to make rules for how companies use AI. The law can give big fines, up to $25 million, and even jail time for breaking rules. High-impact AI must follow special reporting and checks. The Minister of Innovation, Science, and Industry makes sure the law is followed. An AI and Data Commissioner helps too. But AIDA does not cover government AI or say what generative AI is. Some people think the law is not clear and the punishments are too strong. Canada also has guides for using generative AI in government. Canada works with other countries to make good AI rules.

AIDA is still a bill and has not passed yet, so Canada’s rules are not final.

The law is for companies, not for the government or people.

The law says companies must report, get checked, and follow rules from a commissioner.

Canada has shared guides for using generative AI in government.

Groups like IEEE, ISO, and NIST help Canada with AI standards.

China’s rules for AI content are not easy to see. China has made some rules and guides for AI. But it is hard to find details about how these rules work. This makes it tough to compare China’s rules to Canada or the EU.

Global Trends

Countries around the world know they need rules for AI content. Many look at the EU AI Act as an example. Canada’s AIDA and US state laws show countries want similar rules, but there are still differences. Groups like IEEE and ISO make standards to help people trust AI. There are many more new rules now than in 2008. This makes it hard for businesses to keep up. Companies that use smart tools for following rules have fewer problems and save money. As more countries make their own rules, working together will become more important.

Note: AI content rules are always changing. Businesses and creators need to watch for new rules and change fast to follow them.

Key Requirements

Transparency

Transparency is very important for new AI rules. The EU AI Act says companies must tell users when they use AI. This means people should know if content is made or changed by AI, like deepfakes. Many countries want businesses to register AI and share risk checks. They also want companies to explain how their AI works. Companies must write down how their AI runs, how they fix it, and how they update it. In healthcare, clear AI rules and outside checks have helped lower mistakes by 37%. These actions help people trust AI and let them ask questions about AI choices.

Labeling

Labeling AI-made content helps people know what is real. Watermarking is a popular way to do this. Some watermarks are easy to see, and some are hidden. Statistical watermarking puts small marks in text, pictures, or sound. This helps track where AI content comes from. Watermarks can be removed with small changes, but they still work well. They work best with standards like C2PA. In the US, more than 30 states say political ads made by AI must be labeled. The EU AI Act also says some AI must be labeled. It also wants summaries of copyrighted data used for training.

Risk Management

AI systems are put into groups by risk. Spam filters are low-risk and have few rules. Limited-risk AI must say it is artificial and keep records. High-risk AI, like those used in health, have strict rules. They need risk checks, strong security, and must report problems. Groups use the NIST AI Risk Management Framework to handle risks. Checking risks before using AI and watching it all the time keeps it safe and legal.

Human Oversight

People must watch over AI to keep it safe. The EU AI Act says humans must check high-risk AI and step in if needed. Oversight means knowing how AI works and fixing mistakes. People can stop AI if it does something wrong. Studies show humans help find unfair or wrong results. Good oversight needs training and teamwork. Both tech and non-tech teams must work together. This helps stop bias and makes sure AI follows the rules.

Business Impact

Compliance Steps

Organizations need to follow new AI rules. They make a plan with everyone involved. Teams find out which rules matter to them. They decide how to follow these rules. Companies work on the most risky things first. Employees get training often to know their jobs. Many companies use special software to help with rules. This software collects proof and updates policies. A compliance officer watches over everything. Companies change their rules when audits or laws change. These steps help companies keep up with Future Regulations.

Costs and Operations

New AI rules mean more money spent. Companies pay for cloud services and engineers. They also pay to grow their systems. The table below shows how much these things cost each year:

Cost Category | Estimated % of Initial Build Cost |

|---|---|

Cloud Services | 10-30% |

Maintenance Engineering | 15-25% |

Expansion Engineering | 10-40% |

Total Yearly Cost |

Getting, cleaning, and labeling data costs a lot. Companies buy servers, GPUs, and hire skilled workers. Data scientists usually get paid a lot. Connecting AI to old systems costs more money. Companies must keep checking, updating, and following rules. These costs show why planning is important.

Content Creation

AI rules change how companies make and share content. Firms must label AI-made content and keep records. Media and tech companies use both AI and custom software. Healthcare and finance focus on safe AI and risk checks. Leaders with good data and clear steps work better. Companies that follow best ways can create faster and avoid problems.

Tip: Companies that get ready early can use AI safely and do better than others.

Legal Risks

Legal risks get bigger as AI rules get tougher. Companies must check for bias, leaks, and breaking rules. Regular checks help find problems like hallucinations or prompt injection attacks. Laws like the EU AI Act and Canada’s AIDA have clear punishments. Companies use tools to watch AI and keep records. Not following rules can mean fines or hurt reputations. Good legal checks and watching all the time help lower these risks.

Challenges

Innovation vs. Regulation

AI moves fast, but rules often move slow. This creates a push and pull between new ideas and the limits set by Future Regulations.

AI works in many areas, so one rule can affect many things at once.

Unlike electricity or the Internet, AI does not have clear standards. This makes it hard to set and enforce rules.

Big tech companies lead in AI, which can lead to less competition. Open-source AI can help, but it also brings safety risks.

Human values are not always clear, so it is hard to set safety goals for AI.

AI can sometimes do things people do not expect, which makes it hard to keep it safe.

The cost to use AI has dropped quickly, making it easier for more people to use.

Regulators try to set clear limits, like banning self-replicating AI, but AI changes fast.

The main challenge is to keep AI safe and fair while letting it grow and improve.

Global Differences

Countries do not all agree on how to control AI.

The EU uses strong laws like the AI Act and GDPR. These laws set strict rules for high-risk AI and require human checks.

The U.S. uses many smaller rules and lets agencies handle most AI issues. There is no single law for all AI.

The EU and U.S. have different rules for online platforms, which can make it hard for companies to follow both.

New ways to use AI, like sharing models between groups, create new problems for rules.

Experts say the EU and U.S. need to work together more to make rules that fit both sides.

Ethics

Ethics play a big part in AI rules.

People want AI to be fair and safe.

Human values are not the same everywhere, so it is hard to agree on what is right.

Some countries focus on privacy, while others care more about safety or free speech.

Companies must think about bias, fairness, and how AI choices affect people.

Good ethics help people trust AI and support its use.

Enforcement

Enforcing AI rules is not easy.

Agencies like the SEC and FTC have acted against companies that break AI rules.

States like California have new laws that require companies to show how they train AI and mark AI-made content.

Courts handle many cases about AI, such as copyright and unfair use.

Some laws give state leaders the power to punish companies that break AI rules, with fines or bans.

Enforcement tools include fines, bans, and court orders to remove bad AI content.

These actions show that Future Regulations can work, but keeping up with fast AI changes is still hard.

Best Practices

Compliance Actions

Groups that want to follow new AI rules need to take clear steps. They start by finding and sorting all data, even from other companies. Many follow data privacy laws like GDPR and use privacy by design. They also use ISO standards like ISO 42001 to meet rules and improve quality.

Key actions are:

Making AI compliance plans for every step, from building to checking.

Picking special AI compliance officers to watch all rules.

Using AI governance frameworks for clear rules and oversight.

Training workers often so everyone knows the latest rules.

Checking risks and changing policies when laws change.

Using regulatory sandboxes to test AI safely, as the EU AI Act says.

Tip: Not following the rules can mean big fines, up to €40 million or 7% of global turnover.

Monitoring Changes

AI rules change fast. Companies need strong ways to track these changes. Many use automated tools and AI governance platforms to watch and check all the time.

Best ways are:

Looking for new rules and trends in the industry.

Using AI tools to write audit reports and make clear pictures.

Keeping good records and audit trails to show what happened.

Note: Watching for risks early helps companies fix problems fast and do better than waiting for audits.

Trustworthy AI

Trustworthy AI means making systems that are safe, fair, and work well. Companies add privacy steps from the start and check risks from partners. They check third-party AI providers and watch them closely.

They also:

Ask users and others for feedback.

Review and change AI rules often.

Use inside audit teams and special AI tools to watch systems.

A trustworthy AI system makes people trust it and helps the business do well for a long time.

Expert Collaboration

No one team can handle AI rules alone. Companies work with legal, tech, and business experts. They make clear contracts and rules for each job. Change boards check and approve big updates.

Working together also means:

Sharing what they know with other teams.

Learning from guides and what others do best.

Getting ready for new rules by talking to regulators and groups.

Working as a team helps companies keep up with changes and build better, safer AI.

Future Regulations will change how companies use AI in all fields. New laws will bring rules based on risk, clear information, and people watching over AI. These changes will affect how much things cost, how fast markets grow, and how much people trust AI.

The EU AI Act makes rules for being open, fair, and stopping risky AI uses.

Using AI helps companies grow and keeps them strong.

Companies must change fast when new rules come out.

Being ready and doing the right thing helps businesses do well as AI rules keep changing.

FAQ

What is the EU AI Act?

The EU AI Act is a law for artificial intelligence in Europe. It makes sure AI is safe and fair. The law also wants companies to be open about how they use AI. If a company uses AI in the EU, it must follow these rules.

How will AI content labeling work?

AI content labeling means adding marks or tags to show if AI made something. Companies might use watermarks or special text labels. This helps people tell if something is real or made by AI.

Who enforces AI regulations?

Government groups make sure companies follow AI rules. In the EU, national authorities and the European Commission check if companies obey. In the US, the FTC and state regulators do this job.

What happens if a company breaks AI rules?

If a company breaks AI rules, it can get fined or banned. The EU AI Act can fine a company up to €40 million or 7% of its global sales.

Why do businesses need to follow new AI rules?

Businesses must follow new AI rules to avoid getting in trouble. Following rules helps protect their good name. It also helps customers trust them and keeps them strong in the market.