Ethical Personalization: A Practitioner’s Playbook for Trustworthy, High‑ROI Content Experiences

Executive summary: Personalize boldly, respect privacy rigorously

Ethical personalization is not a compliance tax—it’s a trust and performance engine. Teams that design for consent, transparency, and fairness consistently see fewer complaints, more sustainable data access, and better conversion lifts. The stakes are real: California’s Attorney General fined Sephora $1.2M for failing to disclose data “sales” and honor Global Privacy Control signals, underscoring that opt-outs must work in practice according to the 2022 case detailed in the AG’s announcement (California AG Sephora settlement).

On the regulatory front, marketers must respect the right to object to profiling and the limits on solely automated decisions with significant effects set out in GDPR Articles 21–22 (EUR‑Lex). In the U.S., CPRA/CCPA rules require clear notices, opt-out for sale/share, and honoring universal signals like GPC, as confirmed by the California AG’s CCPA guidance and CPPA regulations (PDF). The EU AI Act (2024 Official Journal) adds transparency duties for limited-risk AI such as recommendation systems.

Beyond risk reduction, privacy-first approaches correlate with stronger trust and business outcomes. The Cisco 2025 Data Privacy Benchmark Study reports higher consumer empowerment where privacy regimes are understood, and Deloitte notes that well-executed hyper-personalization can generate meaningful sales lift and high ROI when grounded in first‑party data, as discussed in Deloitte’s loyalty and AI-powered growth analyses (2024). Treat these as directional, contingent on execution quality and ethical design.

This playbook distills field-tested steps to personalize responsibly—what to do, when, and how to validate it.

Foundational principles that actually scale

-

Consent-first for personalization

- Under IAB Europe’s TCF v2.2, consent—not legitimate interest—is required for personalization purposes. Configure your CMP accordingly and document purposes and vendors per IAB TCF v2.2 guidance and FAQ (2023–2024).

-

Transparency and user control

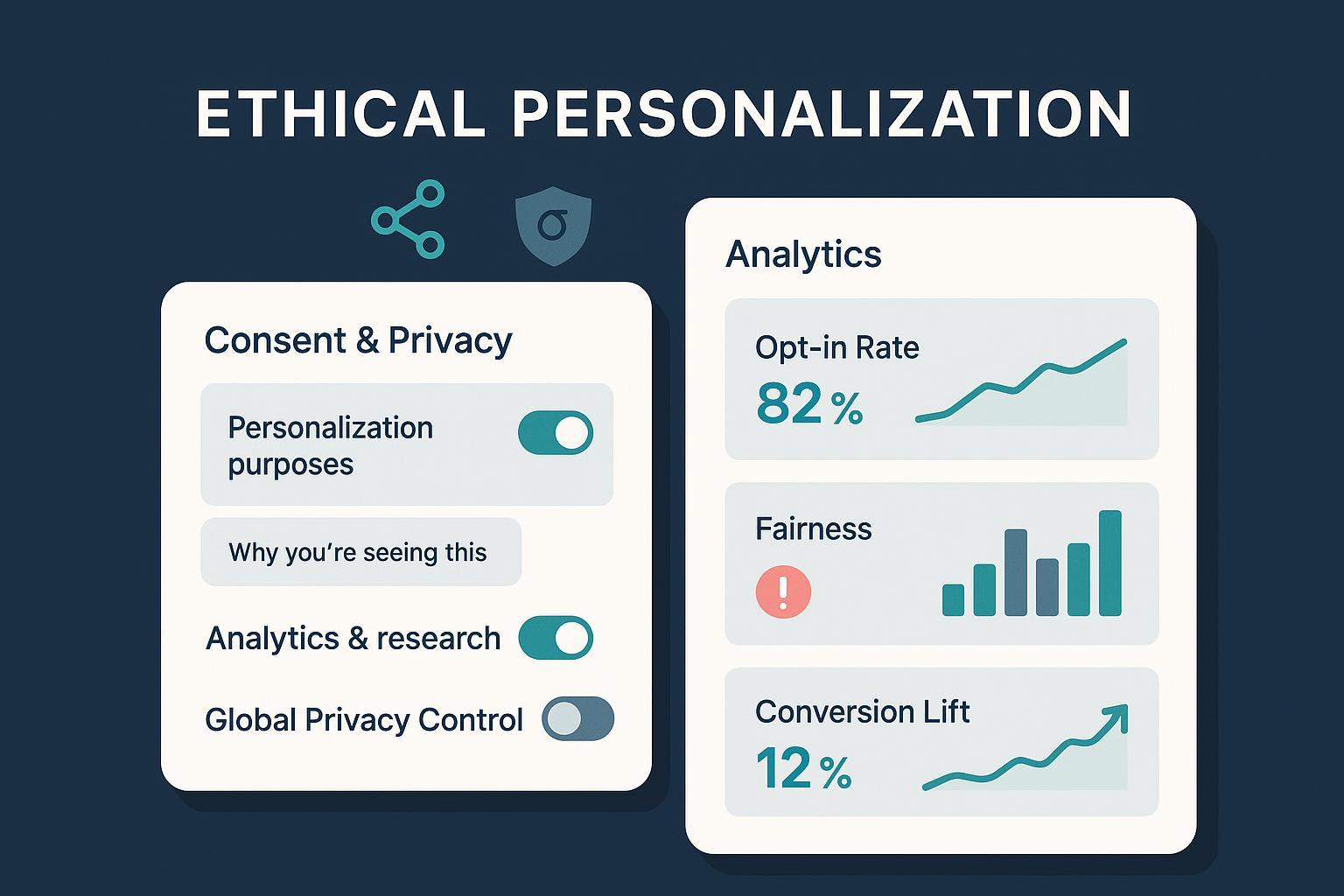

- Offer plain-language explainers (“Why you’re seeing this”), easy toggles, and instant opt‑out. Honor Global Privacy Control as required by California’s CCPA guidance.

-

Data minimization and purpose limitation

- Collect only what’s necessary for specified personalization goals, aligned with CPRA’s data minimization principles as framed in the CPPA regulations and GDPR’s core tenets.

-

Privacy by design (and by default)

- Integrate privacy controls into system architecture and UI, taking cues from ISO 31700 (privacy by design) and implementing them via product rituals (gates, reviews) rather than policy PDFs alone.

-

Fairness and explainability

- Measure and mitigate disparate impacts, and provide meaningful information about personalization logic consistent with GDPR and regulator guidance (see ICO AI guidance hub for explainability and fairness themes).

Applicability and trade-offs: In low-stakes content contexts (e.g., recommended blog posts), lighter-weight governance may be suitable; for sensitive domains (finance, health) or where outcomes materially affect people, elevate reviews and documentation.

The implementation playbook (role- and step-based)

1) Plan and govern (Product + Legal + Data)

- Map obligations and risks using the NIST AI Risk Management Framework (Govern, Map, Measure, Manage).

- Define approval gates: DPIA/AIPD triggers for personalization with significant effects; maintain model cards and data sheets for each algorithmic component.

- Establish an AI ethics committee with clear escalation paths for sensitive attributes or proxies (e.g., ZIP code as a potential proxy for protected classes).

2) Consent UX and transparency (Marketing + UX + Legal)

- CMP configuration

- Implement purpose- and vendor‑level toggles, multi-language notices, and vendor lists per IAB TCF v2.2. Keep an auditable history of TC Strings and policy versions; avoid unnecessary re‑consent except on material changes per TCF policy amendments (2024).

- Honor universal signals

- Process Global Privacy Control and provide a frictionless “Do Not Sell or Share” flow, reflecting enforcement seen in the Sephora CCPA settlement (2022).

- Transparency microcopy

- Place “Why you’re seeing this” near personalized modules; disclose key factors and how to opt out. Avoid dark patterns flagged in the FTC’s 2024 Privacy and Data Security Update.

3) Data practices and privacy‑enhancing technologies (Data + Engineering)

- Prefer on-device or federated approaches where feasible; if you must aggregate telemetry, add differential privacy to reduce re‑identification risk. Production deployments by Google demonstrate federated learning with formal DP guarantees for on‑device language models as described in Google Research on FL+DP (ongoing program). Apple details local DP budgets and transmission safeguards in Apple’s Differential Privacy Overview (PDF).

- Implement least privilege access, encryption at rest and in transit, and retention tied to purpose.

4) Bias auditing and explainability (Data Science + Risk)

- Establish baselines using open toolkits like AIF360 and Fairlearn to measure disparities (e.g., exposure or conversion) across relevant cohorts.

- Mitigate with re‑weighting, re‑sampling, adversarial debiasing, or post‑processing thresholds; document trade-offs.

- Provide user-facing explanations proportional to impact; align with the ICO AI explainability guidance and GDPR expectations for “meaningful information about the logic.”

5) Cross-channel and sector constraints (Marketing Ops + Legal)

- Keep consent state and preferences synchronized across web, email, in‑app, and ads. Avoid sensitive inferences (e.g., health, sexuality) unless explicit consent and sector rules permit.

- In regulated sectors, check additional gates: for example, marketing uses of PHI usually require prior authorization under HIPAA; see HHS HIPAA Privacy Rule. For financial services, align with GLBA sharing restrictions and emerging data rights under CFPB’s Section 1033 rulemaking (2024).

Toolbox: Ethical personalization stack (selection criteria by context)

- QuickCreator Features: Block‑level content personalization with real‑time analytics and hosting controls suitable for SMBs and content teams; supports privacy‑first setups (per product documentation). Disclosure: We build QuickCreator.

- Adobe Target: Enterprise‑grade testing and personalization with robust governance and integrations; fit for large organizations with complex stacks and mature compliance needs.

- Dynamic Yield: Strong retail/ecommerce focus with privacy‑first guidance and advanced decisioning; good for multi‑catalog complexity and merchandising‑driven journeys.

Selection tips: choose based on team size, governance maturity, data residency needs, and channel footprint. Pilot with one critical use case and privacy KPIs alongside performance KPIs.

Practical workflow example: Launching a privacy‑first blog personalization pilot

Objective: Increase engagement on your resource hub without collecting sensitive attributes.

- Prepare: Configure your CMP for explicit personalization consent and honor GPC. Define disallowed signals (e.g., health inference).

- Build: Use a platform that supports block-level personalization to swap recommended articles and CTAs based on on‑site behavior and declared preferences. For SMB teams, QuickCreator Features can do this through content blocks and real‑time analytics; enterprise teams may prefer Adobe Target or Dynamic Yield orchestrations.

- Explain: Add “Why you’re seeing this” next to the module, linking to a preference center.

- Measure: Track opt‑in rate, CTR lift, conversion lift, opt‑out rate, and complaints. Run a fairness check comparing exposure and CTR across major audience cohorts using AIF360/Fairlearn offline.

- Iterate: Remove any feature that increases complaints or disparity beyond your pre‑set thresholds; document changes in a model card.

Bias mitigation and explainability checklist (ready to implement)

- Define protected attributes or reasonable proxies for your context; decide fairness criteria (e.g., equal opportunity for exposure).

- Establish baselines with AIF360 fairness metrics and Fairlearn’s assessment dashboard; save versioned reports.

- Select and apply mitigation: re‑weighting, re‑sampling, thresholding, or post‑processing; record performance/fairness trade‑offs.

- Produce model cards and data sheets summarizing intended use, limitations, and known risks; align with NIST AI RMF documentation practices.

- Set monitoring cadences (e.g., pre‑launch, 30 days post‑launch, then quarterly) and regression gates.

- Provide layered user explanations consistent with GDPR Articles 21–22 and the ICO AI guidance.

Metrics that prove privacy helps performance

Track both trust and growth metrics to validate ethical personalization:

-

Trust and compliance

- Opt‑in rate to personalization; opt‑out rate and GPC honor rate

- Complaint rate; DSAR time‑to‑close; policy version coverage

- Evidence logs for consent, vendor disclosures, and honors of “Do Not Sell/Share”

-

Performance and fairness

- CTR and conversion lift for personalized modules vs. control

- Retention or subscription uplift; content depth/return visits

- Fairness disparity indices (exposure and conversion) across cohorts

Why it matters: The Cisco 2025 Privacy Benchmark associates privacy investment with tangible business benefits (e.g., reduced sales friction, strengthened loyalty). Meanwhile, Deloitte’s research suggests that personalized experiences can unlock substantial ROI when anchored in first‑party data and responsible design, per Deloitte’s AI‑powered growth brief (2024). Treat these as directional; validate in your own environment.

Sector playbook notes

-

SaaS/B2B content

- Prioritize first‑party declared preferences and in‑product behavior; avoid sensitive inferences; implement clear opt‑outs in app and email.

-

Retail/ecommerce

- Balance merchandising relevance with fairness checks for exposure; ensure cookie consent is properly captured for ads and personalization under IAB TCF v2.2.

-

Healthcare

- Treat any PHI‑adjacent personalization as high risk; confirm HIPAA marketing authorization requirements with HHS HIPAA guidance.

-

Financial services

- Align with GLBA privacy notices and safeguards; watch evolving portability and consent expectations under CFPB’s 1033 rule.

-

Global operations

- Localize consent and disclosures for GDPR, LGPD, PDPA, etc.; update vendor lists and purposes by market.

Common failure patterns (and how to fix them)

-

Ignoring universal opt‑out signals

- Symptom: Users report seeing personalized content after setting GPC. Fix: Test GPC handling, log server‑side decisions, and audit CMP integration—lessons reinforced by the Sephora enforcement case (2022).

-

Dark patterns in consent flows

- Symptom: Confusing toggles or “accept all” bias. Fix: Adopt layered notices, equal‑weight choices, and plain language aligned with the FTC 2024 update’s guidance on deceptive design.

-

Unaudited algorithmic bias

-

Over‑collection and purpose creep

- Symptom: Expanding trackers without explicit justification. Fix: Re‑run DPIA/AIPD, prune data to purpose, and apply retention limits; verify alignment with GDPR profiling safeguards.

-

No human in the loop where stakes are high

- Symptom: Automated decisions with significant effects and no appeal. Fix: Introduce manual review channels and user contestation consistent with GDPR Article 22 and the transparency ethos in the EU AI Act (2024).

-

Learning from enforcement in ad delivery

- The U.S. DOJ’s action against Meta over housing ad delivery required the retirement of Special Ad Audiences and development of a monitored Variance Reduction System; see the DOJ v. Meta case page (2022–2024). Lesson: build fairness controls into delivery systems and monitor continuously.

Next steps (start small, measure, scale)

- Run a 60‑day pilot on one high‑impact module (e.g., related articles), with explicit consent, explainers, PETs where feasible, a fairness baseline, and joint KPIs for trust and performance. If you need a fast way to prototype block‑level, privacy‑first content personalization, platforms like QuickCreator can help.

Appendix: Quick reference checklists

-

Pre‑launch

- DPIA/AIPD screening complete; legal basis documented

- CMP configured for TCF v2.2; GPC honored; disclosures localized

- Data minimization verified; PETs evaluated (on‑device/FL, DP where applicable)

- Fairness baseline computed; thresholds set; model card drafted

- Transparency microcopy added; preference center linked

-

Post‑launch (30/90 days)

- Opt‑in/opt‑out and complaint trends reviewed; DSAR time‑to‑close within target

- CTR/conversion lift measured; fairness metrics within thresholds

- Audit log exported (consents, vendor list, GPC decisions); iterate or roll back as needed