CTV Incrementality: What It Means, How to Measure It, and Why It Matters

Connected TV (CTV) gives marketers the precision of digital with the storytelling power of TV. But a question persists: beyond impressions and completion rates, how much business impact did those CTV ads actually cause? That’s the job of CTV incrementality.

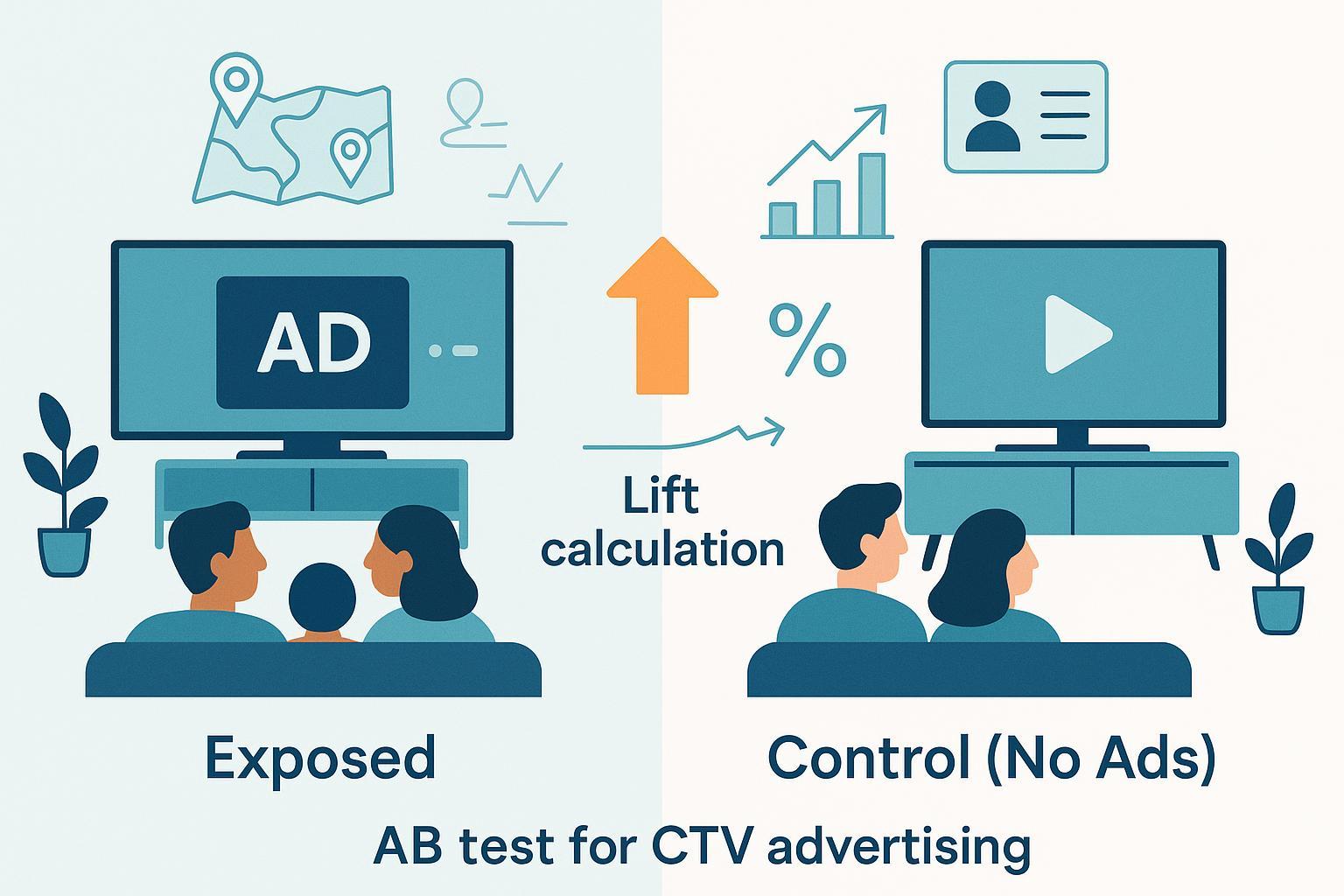

In plain English, CTV incrementality is the causal lift your CTV ads create—what changed because people saw your ads—compared to what would have happened without them. Think of two similar households: one sees your ad (exposed), the other doesn’t (control). If the exposed group buys more, the difference is your incremental lift.

Key takeaways

- Incrementality measures causal impact, not just exposure or correlation. It’s different from incremental reach.

- Clean test vs. control design (randomization and exclusion integrity) matters more than fancy modeling.

- Choose a method you can execute well on CTV (platform holdouts or geo-experiments are common).

- Report uncertainty, not only point estimates; plan enough time and scale for power.

- Use incrementality to optimize frequency, creative, and channel mix—not just to “prove” ROI.

What CTV incrementality is—and isn’t

- Working definition: Incrementality is the measured, causal lift in outcomes (sales, conversions, revenue, or brand metrics) due to CTV advertising over the counterfactual with no ads. This causal framing aligns with industry usage, e.g., Nielsen’s description of incremental lift as “the lift in sales or KPIs caused by marketing efforts above native demand” (2019) in their explainer on incremental lift.

- What it is not:

- Not incremental reach. Incremental reach is an audience metric—how many unique viewers CTV adds beyond other channels—distinct from causal sales lift as highlighted in IAB’s outcomes guidance (2024).

- Not attribution alone. MMM/MTA assign credit; incrementality testing establishes causality via control vs. exposed designs. Google’s modern measurement guidance (2023–2024) explicitly positions experiments alongside MMM/MTA for this reason.

Authoritative context and standards help set the stage for valid CTV testing, including IAB Tech Lab’s digital video & CTV ad format guidelines (2022) and IAB Europe’s CTV targeting and measurement guide (2022).

- See: “the lift in sales or KPIs caused by marketing efforts above native demand” in Nielsen’s explanation of incremental lift (2019).

- See: IAB’s distinction between audience expansion (incremental reach) and outcomes/lift in its 2024 retail media outcomes explainer.

- See: Google’s Modern Measurement Playbook (2023) on how incrementality testing complements MMM and MTA.

- See: IAB Tech Lab’s Digital Video & CTV Ad Format Guidelines (2022) and IAB Europe’s CTV Targeting & Measurement Guide (2022) for delivery/measurement foundations that underpin valid tests.

Why CTV makes incrementality tricky

CTV blends app ecosystems, devices, and household co-viewing. That creates measurement challenges:

-

Identity fragmentation: multiple devices per home, app-level IDs, and limited person-level identifiers complicate clean holdouts and cross-device controls. Nielsen has documented the need for consistent, cross-platform measurement in its recent analyses.

-

Walled gardens and privacy constraints: platform-run experiments may be required; cross-publisher deduplication is hard.

-

Exposure quality and deduplication: valid lift depends on accurate exposure qualification and SIVT filtration under standards like the MRC’s cross-media audience measurement (2019).

-

Frequency management: it’s easy to over-serve ads without increasing lift—frequency distribution matters more than averages.

-

See: Nielsen’s discussion of fragmentation and the need for consistent cross-platform measurement (2023–2024).

-

See: MRC’s Cross-Media Audience Measurement Standards for Video (2019) on exposure quality and deduplication requirements.

Methods to measure CTV incrementality

Choose based on scale, data access, and platform constraints. In all cases, predefine your hypothesis and primary KPI.

- Randomized audience holdouts (user/household-level): Randomly exclude a control cohort from ad delivery; measure difference in outcomes. Precise and causal when identity and exclusion are clean. Google and platforms describe lift experiments and how they sit alongside MMM/MTA in modern stacks.

- Platform RCTs/withholding (e.g., conversion lift): The ad platform randomly withholds bids to form a control group on decisioned media, a pattern documented in The Trade Desk’s best practices for conversion lift (2023).

- Geo-experiments (matched markets): Treat comparable regions as test vs. control and estimate lift with time-based regression or paired designs. Google Research details geo methods suitable for privacy-safe, cookie-independent testing (2017).

- Synthetic control/predictive counterfactuals: Use modeled controls when true holdouts aren’t feasible. Useful but relies on transparency and validation against experiments.

- PSA/placebo ads: Show unrelated ads to control groups. Helps benchmark exposure effects but costs more.

- On/off time-series tests: Alternate campaign periods. Simple but sensitive to seasonality and external shocks.

Methods at a glance

| Method | When it fits | Strengths | Watch-outs |

|---|---|---|---|

| Audience holdout (RCT) | Clean ID/household exclusion possible | Strong internal validity; precise | Requires robust identity; avoid contamination |

| Platform withholding (lift) | Buying via platforms that support random withholding | Efficient; platform-integrated | Platform-dependent; audit transparency |

| Geo-experiment | Large budgets; multiple comparable markets | Privacy-safe; no user IDs needed | Regional confounders; planning complexity |

| Synthetic control | Always-on campaigns; holdouts impractical | Flexible; continuous | Model bias; transparency and validation needed |

| PSA/placebo | Budget for control media | Benchmarks exposure effect | Additional spend; creative neutrality |

| On/off time-series | Early directional read | Simple to execute | Seasonality and shocks can bias results |

- See: Google Research on geo-experiments (2017) for time-based regression methods; The Trade Desk’s conversion lift best practices (2023) for platform withholding designs; Google’s Modern Measurement Playbook (2023) for experiment role in the stack.

What to measure (KPIs) and how to compute lift

For sales/conversion outcomes:

- Incremental Conversions (absolute) = Conversions_exposed − Conversions_control.

- Relative Lift (%) = (Incremental Conversions ÷ Conversions_control) × 100.

- Incremental Conversion Value = Value_exposed − Value_control.

- Incremental ROAS (iROAS) = Incremental Conversion Value ÷ Ad Spend.

- Incremental CPA = Ad Spend ÷ Incremental Conversions.

Google’s lift documentation (updated through 2024) describes these reporting conventions and emphasizes running long enough for statistical power and reporting uncertainty.

Brand outcomes (via brand lift studies): ad recall, awareness, consideration, intent. Treat these as causal brand effects when the study uses valid controls.

Media hygiene (diagnostics, not proof of causality): reach, frequency distribution, completion rate, viewability and SIVT filtration under MRC standards.

- See: Google Ads Help on conversion lift and geography-based lift (2024) for KPI definitions and statuses.

- See: MRC Outcomes and Data Quality Standards for outcomes study quality and disclosure requirements.

Step-by-step: designing a CTV incrementality test

- Frame a clear hypothesis and primary KPI. Example: “CTV prospecting will increase new-customer conversions by at least 5% (relative lift) at iROAS ≥ 1.5.”

- Pick an executable design. If your platform supports lift with random withholding, start there; otherwise consider geo-experiments with matched markets.

- Ensure identity integrity and exclusion. Use household-level exclusion where possible; leverage device graphs or clean rooms to minimize contamination and to deduplicate outcomes across devices.

- Power and duration. Estimate required sample size and budget; avoid over-targeting that limits reach and power. Pre-register your stopping rules.

- Run and monitor hygiene. Track reach/frequency, viewability, and invalid traffic filtering; maintain creative consistency across test/control if relevant.

- Analyze with uncertainty. Compute absolute and relative lift, confidence intervals, iROAS, and incremental CPA; inspect heterogeneity by audience and frequency tiers.

- Translate to decisions. Reallocate budget toward audiences, publishers, and frequency ranges with positive iROAS; fix waste (e.g., high frequency with flat lift).

- See: IAB Tech Lab’s Identity Solutions Guidance (2024) and Data Clean Room Guidance (2023) for identity and privacy-safe data joining; Google’s lift docs (2024) for power and reporting conventions.

Common CTV pitfalls—and how to avoid them

-

Selection bias in observational reads: Comparing “exposed vs. unexposed” without randomization or strong synthetic controls is not causal. Favor RCTs or geo designs.

-

Contamination across devices/households: A control device in the same home as an exposed device can see spillover. Prefer household-level randomization/exclusion where possible.

-

Underpowered tests: Too-short durations or narrow targeting produce inconclusive results. Plan for adequate reach and time.

-

Frequency mismanagement: More impressions don’t guarantee more lift. Examine lift by frequency tier and cap accordingly.

-

Misreading incremental reach as incremental sales: Both matter, but they’re different metrics. Keep definitions straight in reporting.

-

See: Think with Google on experiment rigor and roles alongside MMM/MTA (2023); MRC and IAB standards on exposure quality and deduplication.

Choosing the right approach (quick decision guide)

- You have platform support for lift with random withholding and solid household IDs → Use platform RCT/withholding.

- You lack user-level IDs but have sizable budgets across multiple comparable regions → Run a geo-experiment.

- You need always-on reads and can’t form true holdouts → Consider synthetic control, validated against periodic experiments.

- You need a placebo benchmark and have budget → PSA/placebo control.

- Everything is blocked and you need a directional read → On/off time-series (control for seasonality rigorously).

Worked mini-example (numbers)

- Setup: You run a 6-week CTV campaign with platform withholding. Control cohort: 500,000 eligible households; Exposed cohort: 1,500,000 households.

- Outcomes: Control converts 10,000 times; Exposed converts 33,000 times. Incremental Conversions = 33,000 − 10,000 = 23,000.

- Relative Lift (%) = 23,000 ÷ 10,000 = 230%.

- If average order value is $40, Incremental Conversion Value = 23,000 × $40 = $920,000.

- If CTV ad spend was $600,000, iROAS = $920,000 ÷ $600,000 = 1.53. Incremental CPA = $600,000 ÷ 23,000 ≈ $26.09.

- Decision: If your goal iROAS is ≥1.5, this passes. Next, inspect lift by audience and frequency to refine buys.

Note: Report confidence intervals and power diagnostics; platform reports and geo frameworks provide guidance on significance and data sufficiency as of 2024–2025.

How incrementality complements MMM and attribution

Use incrementality tests to ground-truth and calibrate MMM and MTA. Google recommends a stacked approach: plan budgets with MMM, validate causal effects with experiments, and optimize in-flight with attribution—each method filling different roles in a privacy-first environment.

- See: Google’s Modern Measurement Playbook (2023) and incrementality testing articles (2023) for how to integrate experiments, MMM, and MTA.

Related concepts—what to use when

| Concept | What it answers | Primary data | Strengths | Limitations |

|---|---|---|---|---|

| Incrementality (lift test) | Did CTV ads cause additional outcomes? | Experimental or quasi-experimental | Causal inference; actionably tied to spend | Needs controls, adequate power; limited granularity if small |

| Incremental reach | How many new unique viewers did CTV add? | Deduplicated audience measurement | Cross-channel audience expansion view | Not causal for sales; exposure-only |

| MMM (media mix modeling) | How do channels contribute over time? | Aggregated time-series | Strategic budgeting; channel trade-offs | Coarse-level; requires calibration |

| MTA (multi-touch attribution) | Which touches correlate with conversions? | User-level paths | Granular, near real-time | Correlational; ID loss; can misassign credit |

| Brand lift | Did the ad shift awareness/intent? | Controlled surveys/panels | Measures upper-funnel impact | Not direct sales; survey limits |

- See: IAB Europe’s CTV measurement guide (2022) for audience concepts; Google (2023) for MMM/MTA roles alongside experiments.

Real-world references and case notes

-

Platform best practices for conversion lift and randomized withholding on decisioned media are documented by The Trade Desk (2023), providing a practical blueprint for CTV lift tests.

-

Geo-experiment methods are peer-reviewed and documented by Google Research (2017), supporting privacy-safe CTV testing without user-level IDs.

-

Vendor case studies report outcome lifts on CTV—for instance, a PMG quick-service restaurant case measured incremental sales using InnovidXP and Affinity Solutions (2024). Treat vendor-reported results as directional and context-dependent.

-

See: The Trade Desk’s best practices for better conversion lift (2023); Google Research on geo experiments (2017); Innovid’s PMG case study (2024).

FAQs

- Is incremental reach the same as incrementality? No. Incremental reach is about unique audience added; incrementality is causal outcome lift. IAB’s outcomes materials (2024) draw this line clearly.

- How long should I run a lift test? Long enough to achieve statistical power; platforms often provide status indicators (“not enough data”). Google’s lift documentation (2024) outlines data sufficiency guidance.

- Can I do lift testing without user-level IDs? Yes—use geo-experiments with matched markets and appropriate regression frameworks (see Google Research, 2017).

- What if my brand only cares about awareness? Run a brand lift study with valid controls; treat results as causal for brand metrics, and connect to sales with MMM where appropriate.

- How do I prevent contamination? Randomize at the household level when possible and use identity/clean-room solutions to deduplicate and enforce exclusions.

Summary

CTV incrementality tells you what your CTV ads truly caused—not just what they touched. Start with a clear hypothesis and KPI, pick a method you can execute cleanly (platform lift or geo-experiments are great defaults), ensure identity integrity, and plan for enough power. Report lift with uncertainty, translate it to iROAS and incremental CPA, and use the results to refine reach, frequency, creative, and mix. Done well, incrementality testing becomes a reliable decision engine rather than a post-campaign report card.

—

References (selected, canonical)

- “the lift in sales or KPIs caused by marketing efforts above native demand” in Nielsen’s incremental lift explainer (2019): https://www.nielsen.com/insights/2019/the-importance-of-incremental-lift/

- IAB/MRC – Retail Media Measurement Guidelines Explainer (2024): https://www.iab.com/wp-content/uploads/2024/01/IAB-MRC-Retail-Media-Measurement-Guidelines-Explainer-January-2024.pdf

- IAB Tech Lab – Digital Video & CTV Ad Format Guidelines (2022): https://iabtechlab.com/wp-content/uploads/2022/03/Ad-Format-Guidelines_DV-CTV.pdf

- IAB Europe – CTV Targeting & Measurement Guide (2022): https://iabeurope.eu/wp-content/uploads/2022/01/IAB-Europe-Guide-to-Targeting-and-Measurement-in-CTV-2022-FINAL.pdf

- Think with Google – Modern Measurement Playbook (2023): https://www.thinkwithgoogle.com/_qs/documents/18393/For_pub_on_TwG___External_Playbook_Modern_Measurement.pdf

- Google Ads Help – Conversion Lift (2024): https://support.google.com/google-ads/answer/12003020?hl=en

- Google Ads Help – Geography-based conversion lift (2024): https://support.google.com/google-ads/answer/14102986?hl=en

- Google Research – Geo experiments TBR framework (2017): https://research.google/pubs/estimating-ad-effectiveness-using-geo-experiments-in-a-time-based-regression-framework/

- MRC – Cross-Media Audience Measurement Standards for Video (2019): https://mediaratingcouncil.org/sites/default/files/News/General-Announcements/MRC%20Issues%20Final%20Version%20of%20Cross-Media%20Audience%20Measurement%20Standards%20For%20Video%20-%20FINAL.pdf

- MRC – Outcomes and Data Quality Standards (Final): https://mediaratingcouncil.org/sites/default/files/Standards/MRC%20Outcomes%20and%20Data%20Quality%20Standards%20(Final).pdf

- The Trade Desk – Best practices for better conversion lift (2023): https://www.thetradedesk.com/resources/best-practices-for-better-conversion-lift

- IAB Tech Lab – Identity Solutions Guidance (2024): https://iabtechlab.com/wp-content/uploads/2024/05/Identity-Solutions-Guidance-FINAL.pdf

- IAB Tech Lab – Data Clean Room Guidance (2023): https://iabtechlab.com/blog/wp-content/uploads/2023/06/Data-Clean-Room-Guidance_Version_1.054.pdf

- Innovid – PMG case study (2024): https://www.innovid.com/resources/case-studies/pmg-taps-innovid-to-measure-consumer-transaction-outcomes-proving-roas-for-qsr