Winning Visibility and ROI in AI-Powered Search (2025): Best Practices for Strategic SMB Marketers

1) The shift: why stable rankings can still mean less traffic in 2025

If your keyword rankings look fine but traffic is sliding, you’re not alone. As AI-powered results expand, users get answers above (or instead of) the blue links.

Prevalence: In March 2025, AI Overviews appeared on about 13% of U.S. desktop searches, with 88% of those being informational, per Semrush’s analysis of 10M+ keywords; Search Engine Land reported the same rate and rapid category spikes during the March core update (2025) (Semrush AI Overviews study; Search Engine Land 13% coverage; SEL March 2025 spikes).

CTR decline: Independent 2025 analyses show meaningfully lower click-through when AI summaries appear. Ahrefs found position-one CTRs are dramatically lower on AI Overview queries, and Pew Research (2025) observed users are less likely to click links when an AI summary shows (Ahrefs on reduced clicks; Pew Research 2025 on fewer clicks). Search Engine Land also highlighted BrightEdge data indicating impressions up but CTR down roughly 30% YoY in 2025, attributed to AI Overviews pushing organic lower (SEL summarizing BrightEdge 2025).

Sector impacts: Entertainment, restaurants, and travel saw AIO coverage surges during the March 2025 update, intensifying zero/low-click behaviors (SEL on category surges, 2025).

The takeaway: traditional rankings no longer equal visibility. To win, you need to be cited in AI answers and convert from those surfaces.

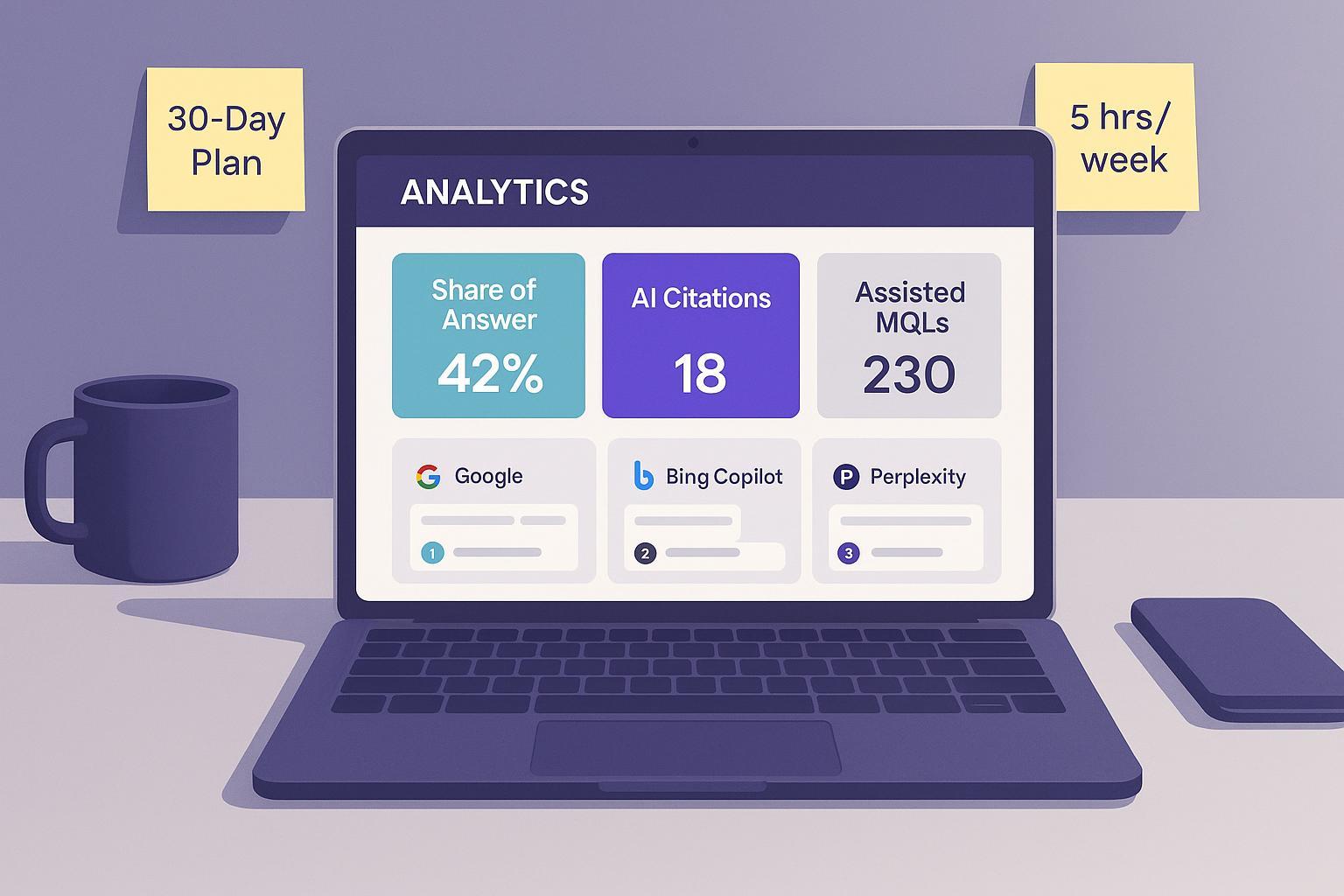

2) The new success metric: Share of Answer tied to assisted MQLs

Classic rank tracking misses what matters now: are you named, linked, and clicked from AI answers?

Definition: Share of Answer (SoA) is the percentage of target queries where your brand/page is cited in AI answers (Google AI Overviews/AI Mode, Bing Copilot, Perplexity). This KPI has been proposed and elaborated in 2024–2025 AEO/SGE discussions (see the 2025 perspective in Seobymarta on generative SEO KPIs and the methodology framing from Exposure Ninja on AI share of voice).

Formula: SoA (%) = (# of AI answers citing you ÷ # of AI answers analyzed for your target query set) × 100. Track by topic cluster, weekly.

Tie to pipeline: Pair SoA with “Assisted MQLs by Cluster”—the count (and rate) of Marketing Qualified Leads that touched AI-cited content. Use GA4 events + CRM fields to capture these touches.

Expect volatility: AI answers change often. That’s normal. Manage it with a routine, not one-off checks.

3) Measure what matters: a lightweight AI visibility + ROI stack

You don’t need enterprise software to get clarity. Here’s the SMB-ready setup.

Sources and cadence (weekly):

Manual checks: Pin 15–30 priority prompts across Google (AI Overviews/AI Mode), Bing Copilot, and Perplexity. Log whether you’re cited, which page, and who else appears.

Affordable tracker: Add one AI visibility tool if budget allows to reduce manual lift; validate outputs with spot checks.

Google Search Console: Watch emerging queries, Discover, and annotate algorithm changes and content updates.

Metrics to log:

SoA by cluster (core KPI)

Entity Coverage: number of subtopics/entities with at least one AI citation

AI-Cited Pages: count week-over-week

Gaps: queries where AI answers appear but you’re missing

Assisted MQLs/SQLs by cluster (CRM)

5-widget dashboard (one page):

SoA by cluster (bar or line, weekly)

AI-cited pages (count, WoW)

Queries with AIO presence where we’re missing (list)

Assisted MQLs by cluster (stacked)

Competitive SoA snapshot (top 3 rivals)

Tracking setup (1–2 hours):

UTMs: Standardize lowercase utm_source/medium/campaign/content. Maintain a single sheet and use a builder; GA4 recognizes these by default (MeasureSchool on GA4 UTMs; GA4 collection docs).

GA4: Configure content groupings by cluster; set generate_lead as a key event, and track scroll depth (e.g., 90%) via GTM (GA support: key events for leads).

CRM: Add fields—Content Touches (URL), Cluster (picklist), and First AI-Cited Touch (boolean). Map UTMs into contact records; use HubSpot or Salesforce workflows for association (HubSpot GA integration guide; Salesforce data integration overview).

Governance and cadence:

Weekly: Update SoA, identify gaps, review pages gaining/losing AI citations.

Monthly: Report assisted MQLs/SQLs by cluster, plus time-to-MQL for AI-cited journeys vs. non.

Decision rules: Double down on clusters where SoA and assisted MQLs are rising; refresh or consolidate pages with impressions but no AI citations after 6–8 weeks.

4) Build to be cited: the AI-friendly content workflow

What AI systems pick up is predictable when you structure content for extraction and prove credibility. Based on hands-on work, this end-to-end process consistently increases inclusion odds.

Research (intent-first):

Start with real questions from People Also Ask, community threads, support tickets, and sales calls. Map to journey stages.

Create an entity map: primary entity, related entities, synonyms. Keep names consistent across the cluster.

Plan a cluster: 1 hub (definitive guide) + 6–10 spokes (how-tos, comparisons, pitfalls, tools), each answering one sub-intent.

Briefing checklist:

Answer-first outline: TL;DR, numbered steps, decision tree, pros/cons, cost/time/tools, risks, examples.

Evidence plan: screenshots, original data, quotes from SMEs, fresh external citations with dates.

E-E-A-T scaffold: author credentials, org credentials, byline date, update log, references.

Creation principles:

Write for conversation: anticipate 5–10 follow-up questions; include an FAQ section to absorb them.

Structure for extraction: short paragraphs, lists, tables, and clear H2/H3s with direct claims.

Schema: Use Article with author/org, plus FAQPage and HowTo where applicable; validate with Google’s Rich Results tools (Google structured data: Article; FAQPage guidelines).

Internal links: Cross-link hub↔spokes with entity-rich anchors and clear next-step CTAs.

Optimization and publication:

Add jump links to answer-first sections; include a mini glossary of key entities.

Cite sources inline with dates for freshness; include author bio and update log for trust (E-E-A-T aligns with Google’s “helpful content” principles: Google 2025 guidance).

Technical quality: mobile-first, fast, HTTPS, sitemap, canonical, clean URLs, descriptive alt text (How Search works essentials).

Post-publication iteration:

Prompt tests: Mirror real user questions in Google AI Overviews, Bing Copilot, and Perplexity; log if/where your page is cited.

Close gaps: Expand FAQs and sections to cover missing sub-answers; update schema accordingly.

Notes and trade-offs:

Structured data helps understanding but doesn’t guarantee AI Overview inclusion (Google AI features doc, 2025).

If you must limit excerpting, page-level snippet controls (max-snippet, nosnippet) apply to AI features too—use sparingly and strategically (Google robots meta tag specs).

5) From queries to clusters: long-tail and conversational coverage

Long-tail hasn’t died; it’s evolved into conversational clusters. Users ask multi-step questions; answer engines stitch sources into a single overview. Your job is to cover the complete conversation efficiently.

Cluster composition (repeatable):

Hub: “What X is, who it’s for, when to use it, outcomes, pitfalls.”

Spokes: how-to, checklist/SOP, comparison/alternatives, pricing/cost factors, tools, implementation pitfalls, case examples.

FAQs: 10–20 questions that reflect follow-ups you see in PAA and sales calls.

Internal linking map:

Every spoke links to the hub and at least two other spokes; the hub links down to all spokes.

Use entity-focused anchor text consistently (your entity map is the source of truth).

Schema map:

Hub: Article + FAQPage where relevant.

How-tos: HowTo schema with steps, tools, and time estimates.

Comparisons: Article with clear tables; consider Product schema only if you meet guidelines.

This approach builds topical authority, which practitioners and Google emphasize over isolated keywords (Search Engine Land topical authority primer).

6) Fixing the drop: a 30-day recovery plan

A focused month is enough to stabilize and start growing again.

Week 1 — Measure and choose: Audit SoA across 15–30 priority prompts in AI Overviews/Copilot/Perplexity; set up UTMs, GA4 key events, and CRM fields; pick one high-impact cluster.

Week 2 — Ship first spoke: Publish a how-to with answer-first intro, screenshots, and FAQ. Add Article + HowTo + FAQPage schema; cross-link from a hub placeholder page.

Week 3 — Add comparison/alternatives: Publish a comparison with criteria, pros/cons, and decision checklist. Strengthen author bio and references; add glossary terms.

Week 4 — Fill gaps: Run prompt checks; expand FAQs and missing sub-answers; improve internal links; validate schema. Start tracking pages cited by AI week-over-week.

Success criteria by Day 30:

SoA > 10% in the pilot cluster (from 0%)

At least 1–3 pages cited in AI answers

Assisted MQLs attributable to the cluster begin to register in CRM

7) Mini case (composite, disclosed)

Disclosure: This is a composite SMB case built from multiple real projects with similar patterns; numbers are representative, not a single client.

Situation: A SaaS SMB saw -18% YoY organic despite stable top-3 rankings and had zero AI citations on money-intent questions.

Actions: Built 1 hub + 7 spokes; added answer-first intros, FAQs, HowTo + FAQ schema, author bios, original screenshots; tightened internal links; ran weekly prompt checks and filled gaps.

Results (8–10 weeks): SoA rose from 0% to 28% in the target cluster; AI-cited pages grew from 0 to 6; organic stabilized (+6% MoM); assisted MQLs from the cluster +35%; demo requests +22% with higher qualification rate.

These outcomes reflect the shift from rank-first to answer-first structures and consistent measurement.

8) The 5-hour-per-week plan for Alex (good-enough v1)

A sustainable operating rhythm you can actually keep.

Hour 1: Update the SoA sheet (15–30 prompts), note gaps, and pick this week’s sub-intent.

Hour 2: Draft or refine one answer-first section (TL;DR + steps + FAQs) for a spoke.

Hour 3: Add or validate schema (Article + FAQPage; HowTo where applicable); fix internal links.

Hour 4: Refresh E-E-A-T elements: author bio, update log, citations with dates, glossary entries.

Hour 5: Distribute with UTMs; review GA4 events; tag new CRM contacts with Cluster and First AI-Cited Touch if applicable.

Every 4 weeks: Publish at least two spokes and one comparison; review assisted MQLs and SoA trends; decide whether to double down or consolidate.

9) Toolkit: SMB-friendly options to execute faster

All-in-one accelerator: QuickCreator combines AI drafting with integrated SEO scaffolding, E-E-A-T cues, and managed publishing to reduce tool-switching for SMB workflows. Disclosure: We may have an affiliate or commercial relationship with QuickCreator; evaluate independently for fit.

Standalone optimizers: SurferSEO for on-page optimization depth and content scoring; MarketMuse for entity/topic modeling and content gap analysis.

AI visibility trackers: Orbitwise or Ahrefs Brand Radar AI (where available) to log AI Overview citations and competitors side-by-side.

Selection tip: If you need speed with minimal tooling overhead, an all-in-one helps. If your team already has a stable CMS and point tools, pick the best-in-class gaps.

Micro-example: shipping one spoke end-to-end (≈100 words)

Here’s a realistic way to publish a spoke in one working session. Use an all-in-one like QuickCreator to generate an answer-first draft (TL;DR + steps + pitfalls). Inject your first-hand screenshots and add an FAQ covering 6–10 follow-ups. Apply Article + HowTo + FAQPage schema, validate, and publish to a fast, mobile-first template. Cross-link from the hub and two related spokes using entity-rich anchors. Distribute with UTMs to your newsletter and community posts. In a week, run prompt checks (AI Overviews, Copilot, Perplexity) and log citations. Iterate the FAQ to close the gaps you’re seeing.

FAQs: practical questions marketers ask in 2025

How does Google decide which links appear in AI Overviews?

Google says eligibility follows overall quality/relevance principles; AI features leverage “query fan-out” to surface a diverse set of helpful links. There is no special disclosed AIO-only ranking factor (Google AI features overview, 2025; Google blog on AI in Search).

How do Bing Copilot citations work?

Copilot grounds answers with Bing Search or Custom Search, then composes an answer and includes linked citations and the underlying search query inside the chat thread (Microsoft docs on grounding; Copilot privacy/protections).

Can I block AI from quoting my pages?

You can limit snippets with page-level controls (max-snippet, nosnippet, data-nosnippet). Use cautiously; it can reduce classic snippet visibility too (Google robots meta tag specs).

What about Perplexity’s crawler?

There is no widely published official PerplexityBot technical spec as of late 2025; verify appearances through server logs and contact the vendor for details (Perplexity blog on agents vs bots).

Does structured data guarantee inclusion?

No; it helps search engines understand content, but inclusion depends on overall relevance and quality (Google AI features and your website).

Closing: your next sprint

Pick one cluster. Set up the SoA sheet, GA4 events, and CRM fields. Publish one spoke this week with answer-first structure, schema, and FAQs. In 30 days, you’ll have citations, momentum, and a story the business will care about.

Soft CTA: Want less tooling overhead? Try QuickCreator to ship clustered, AI-friendly content faster.