How AI-Powered Sentiment Analysis Is Revolutionizing Personalized Content Marketing in 2025

Why this shift matters right now

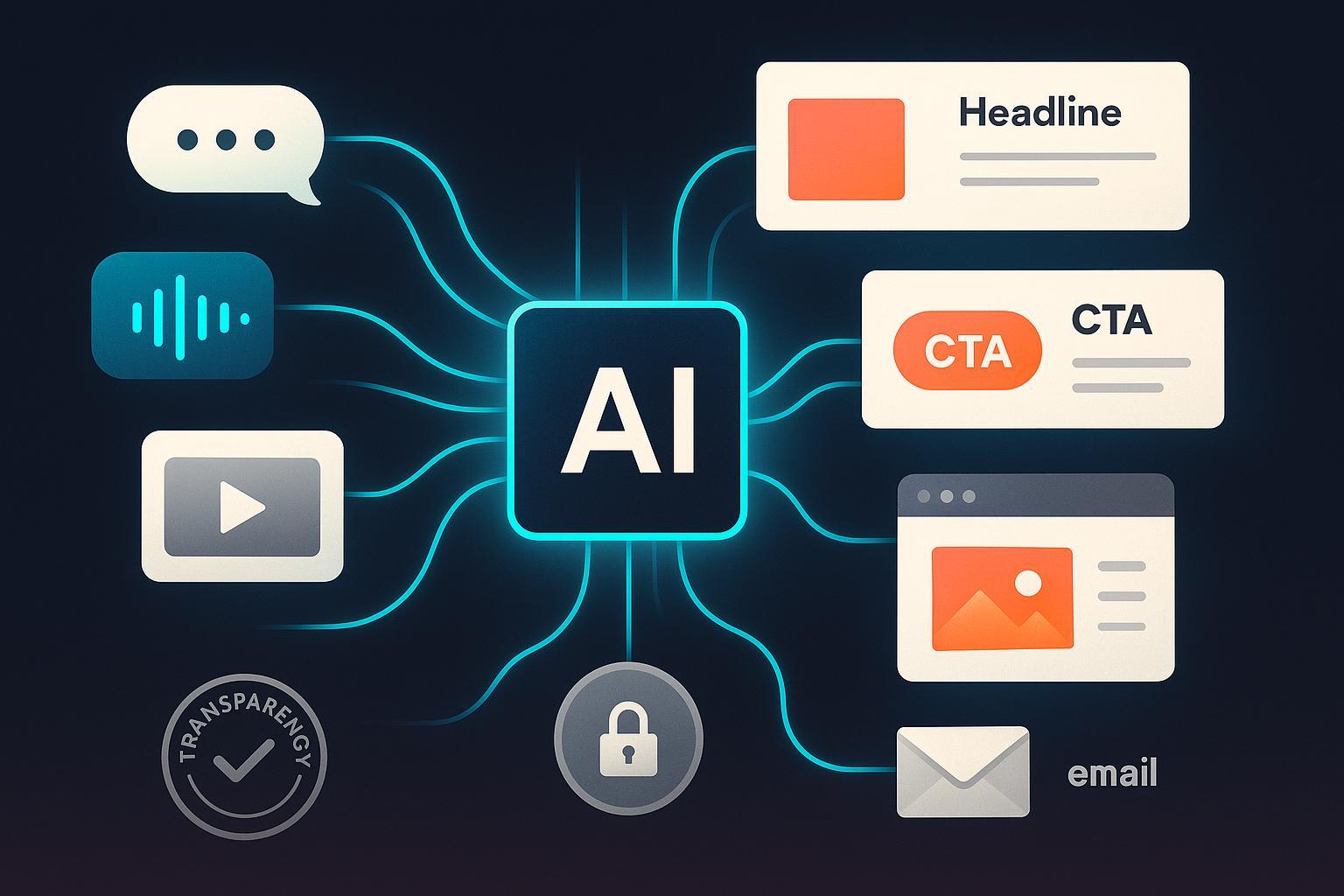

Two forces are converging in 2025 to reshape personalized content marketing: breakthroughs in AI-driven sentiment analysis and the maturing reality of privacy-first activation. Multimodal models that read text, voice, and visuals are getting better at recognizing not just overall polarity but the specific aspects people feel strongly about—shipping, price, UX friction, a feature you launched last week. At the same time, the post-cookie landscape rewards brands that can personalize using first-party and contextual signals without invasive tracking.

For marketing leaders, the takeaway is practical: when you can detect what a customer feels about a particular moment or feature, you can choose more relevant copy, creative, and help content—on the fly and within compliance guardrails. That’s the difference between dashboards that look smart and content experiences that drive measurable lift.

What changed in 2025: the tech and the rules

On the technical side, multimodal sentiment models have advanced meaningfully. A tri-modal dynamic attention approach on the CMU‑MOSEI benchmark reported accuracy and F1 gains over text-only baselines, showing how audio and video cues complement text for emotion/sentiment prediction, according to the arXiv 2025 dynamic attention study. Complementing that, a multi‑layer feature fusion framework demonstrated strong precision/recall for multimodal emotion recognition, underscoring the value of cross‑modal feature integration in 2025 peer‑reviewed work from Nature Scientific Reports (2025). These aren’t vendor demos; they’re part of a broader evaluation push that is improving robustness and explainability.

On the regulatory side, the EU AI Act began enforcing key provisions in 2025. Biometric emotion recognition is prohibited in sensitive settings like workplaces and schools, while text-only sentiment analysis on written content generally falls outside those bans; marketers should still observe transparency obligations when AI shapes user experiences, per the European Parliament’s AI Act explainer (updated 2025) and the European Commission’s early guidance summarized by WSGR’s prohibited practices note (Feb 2025). In parallel, Google’s 2025 pivot kept third‑party cookies available via user choice while continuing development of Privacy Sandbox APIs; marketers should treat Topics and Attribution as complementary, not replacements, as outlined in the Privacy Sandbox “Next steps” update (Apr 2025).

Bottom line: better models and clearer guardrails make it feasible to use sentiment for personalization—provided you design for privacy and measure rigorously.

Text vs. multimodal: a decision framework you can use

Not every use case needs video and voice. Use this simple matrix:

-

Start text-only when:

- You primarily analyze written sources (reviews, surveys, support tickets, on‑site feedback).

- You need fast deployment without new data collection.

- You operate in regions or contexts where biometric signals introduce risk or friction.

-

Consider multimodal when:

- You lawfully collect audio/video with explicit consent (e.g., call‑center QA or opted‑in research panels).

- Non‑text cues materially improve outcomes (e.g., detecting frustration in a support call that text alone misses).

- You can withstand added engineering and governance (secure storage, retention limits, DPIAs).

-

Upgrade to aspect-level sentiment (ABSA) in either path:

- Extract sentiments tied to specific aspects (e.g., “checkout speed,” “pricing transparency,” “refund experience”).

- Use LLM‑assisted ABSA to reduce labeling needs and scale to new categories/languages, building on 2025 research trajectories discussed in ACL venues.

From insight to activation: a cookieless-friendly workflow

Here’s a practical, compliance-aware playbook to turn sentiment into personalized content that performs—even without cross‑site identifiers.

- Ingest and classify

- Sources: first‑party text (on‑site feedback, CRM, chat logs, email replies), public reviews, and social listening feeds where terms of use and consent are clear.

- Modeling: start with text-based sentiment; add ABSA to map emotions to concrete aspects; evaluate multimodal only where consent and ROI justify it.

- Create modular content variants

- Build swappable blocks for headlines, proof points, FAQs, objection handlers, and help content aligned with common sentiment patterns and aspects. If your team needs a fast way to assemble and iterate modular content, the block-based editor and AI writing workflow in the QuickCreator AI – Comprehensive Review provides a helpful overview of how to structure reusable sections.

- First product mention and disclosure: Activate those variants directly in a CMS/editor. For example, QuickCreator can help teams generate sentiment-aligned variants and A/B tests without heavy engineering. Disclosure: QuickCreator is our product.

- Define decision rules (privacy-first)

- Real-time: if incoming signals indicate negative sentiment around “refund experience,” prioritize empathetic copy and clear policy guidance; if positive around “feature X,” surface advanced tips or social proof.

- Session-level (cookieless): swap on-page modules using first‑party, consented signals from the current session or account context—no cross‑site tracking required.

- Audience building: roll up aspect-level signals into first‑party segments (e.g., “power users praising feature Y,” “price-sensitive churn risk”) and activate via email, on‑site, and, where relevant, privacy-preserving interest signals through Topics or clean rooms.

- On-site and in-app: tailor benefit blocks, FAQs, and CTA tone to match sentiment clusters.

- Email/CRM: trigger nurturing paths for neutral/curious cohorts; send resolution content for negative service moments.

- Paid media: where allowed, use clean-room audiences or privacy APIs to align creative tone with dominant sentiment themes (avoid personal profiling in restricted contexts).

- Measure and learn

- KPIs by funnel stage: CTR, scroll depth/time-on-content; lead capture; conversion; 30/60/90‑day retention; CSAT/NPS deltas.

- Experiment design: A/B or multi‑armed bandits for tone/offer; use geo/holdouts for ad lift where platforms allow; validate with marketing mix modeling.

- Model guardrails: track false positives/negatives; set confidence thresholds for when automated swaps are allowed; review model drift monthly.

Evidence to keep your team honest

- Multimodal effectiveness: Cross‑modal attention and fusion consistently improve over text-only in 2025 studies, including the arXiv dynamic attention paper (2025) and Nature Scientific Reports’ 2025 multi‑layer fusion work. Treat vendor claims carefully and corroborate with your own A/B tests.

- ROI realism: Personalization drives value when measured with rigor. The McKinsey perspective emphasizes disciplined KPI frameworks and testing for revenue impact in 2025; use it as a guidepost for methodology rather than a source of one‑size‑fits‑all lift numbers, as discussed in McKinsey’s 2025 personalization insight.

- Privacy posture: With Google maintaining cookies via user choice and advancing Sandbox APIs, adopt a blended approach that leans on first‑party/contextual signals and treats Topics/Attribution as complementary tools per the Privacy Sandbox update (Apr 2025). In the EU, steer clear of biometric emotion recognition in prohibited contexts and maintain clear disclosures guided by the EU Parliament AI Act explainer (2025) and WSGR’s 2025 analysis.

Compliance and governance checklist (2025-ready)

- Lawful basis and consent

- Use first‑party text data with explicit consent where needed; document terms for any social/public data ingestion.

- Biometric caution

- Avoid emotion inference from facial, voice, or physiological data in prohibited EU contexts; conduct DPIAs for any multimodal pipeline.

- Transparency

- Add plain-language notices like: “We use AI to tailor content based on your feedback,” and provide an opt-out.

- Privacy by design

- Minimize data retention; prefer on‑device/in‑browser computation where possible; vet vendor subprocessors and cross‑border transfers.

- Bias and fairness

- Audit sentiment skew across languages, dialects, and demographics; include human-in-the-loop review for high-impact decisions.

- Documentation

- Keep a change-log for model updates affecting user experience; record evaluation metrics and error analyses.

What “good” looks like in activation

- A consumer electronics brand identifies aspect-level frustration around “setup complexity.” On the product page, users showing those signals see a simplified quick-start module plus a short video; neutral users see social proof; power users see advanced tips. The team observes improved time-on-page and a statistically significant lift in add‑to‑cart among the “frustration” cohort after two weeks.

- A B2B SaaS company finds strong positive sentiment around “time-to-value” in reviews. It swaps case study blocks to emphasize rapid deployment and adds a comparison table for the “evaluation” segment. Follow-up emails switch to action‑oriented CTAs rather than long nurture copy, improving reply rates and qualified demo conversions.

These examples are representative patterns, not vendor-attributed case studies. Treat them as starting templates for your own experiments.

Looking ahead: search and discovery will reward emotional relevance

As large language model–infused search and content experiences evolve, content that aligns with user intent and emotion will gain an edge. If you’re planning for this shift, you’ll want modular, high-quality assets that can be recombined to match sentiment and intent states. For additional context on how AI-driven discovery is changing visibility dynamics, see this overview of LLMO in marketing and why it matters.

Next steps for your team

- Scope a 6–8 week pilot: start with text-based ABSA on first‑party data; build three modular variants for your top two pages; define KPIs and a testing plan.

- Establish compliance guardrails: add transparency language, confirm consent flows, and set retention limits.

- Instrument measurement: set up A/B or bandit tests, confidence thresholds, and a weekly review rhythm.

- Tooling: If you need an efficient way to produce and test sentiment-aligned content modules without extra engineering, consider using QuickCreator’s block-based editor and AI workflow to accelerate production and iteration.

—

Updated: October 2025