AI-Powered Search Intent Mapping Guide

If your content plan still treats “keywords = pages,” you’re leaving opportunity on the table. Here’s the deal: modern SERPs—and AI-driven answer surfaces—telegraph intent in plain sight. This guide shows you a repeatable, AI-assisted workflow to decode those signals, label large keyword sets with precision, and ship content that satisfies real user jobs-to-be-done.

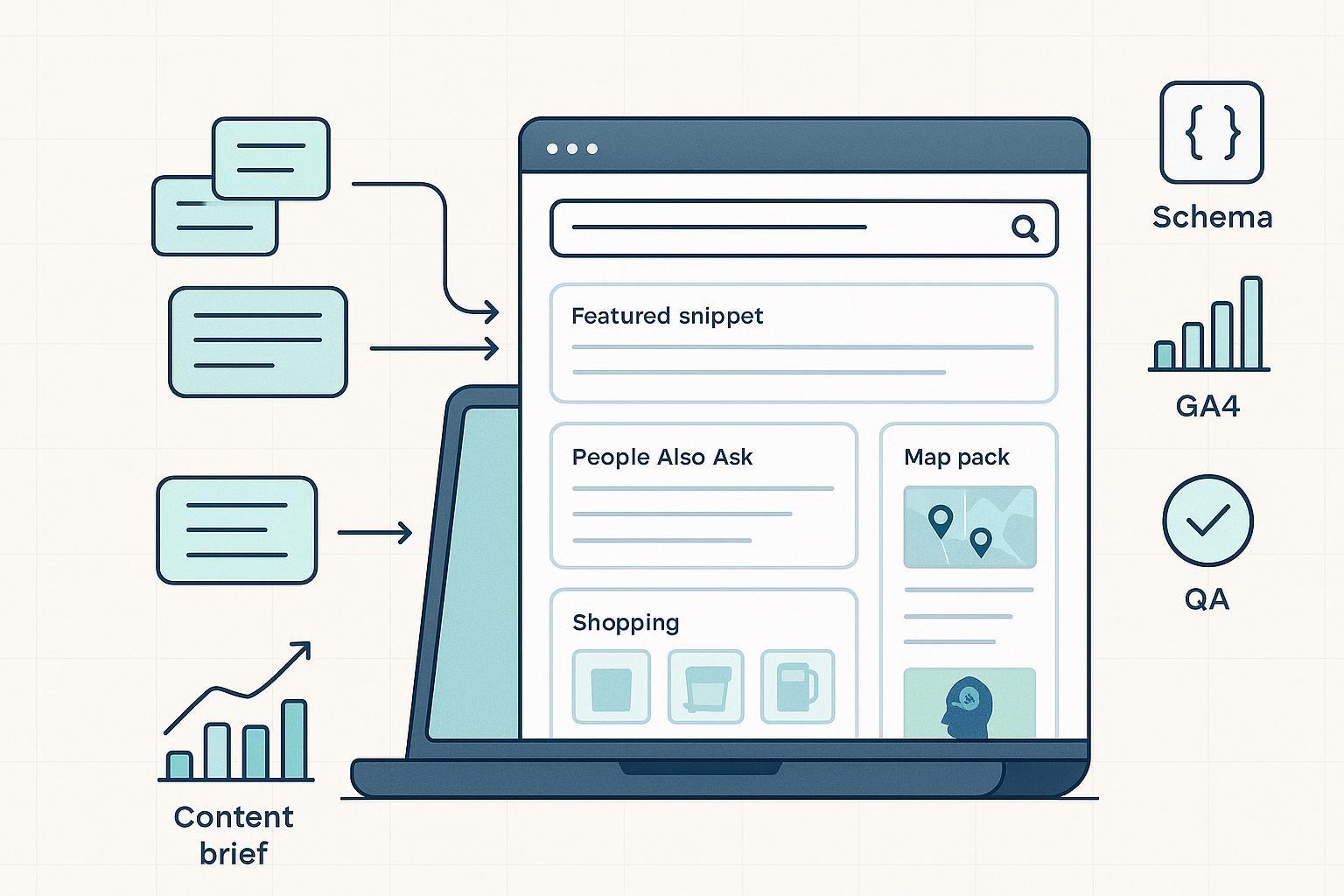

We’ll move from data intake to clustering, from split-or-combine decisions to content briefs and structured data, and we’ll close the loop with GA4/GSC measurement. Along the way, we’ll reference current guidance on succeeding in AI search from Google and recent studies on SERP features and engagement.

1) Intent that fits how SERPs actually work

Search intent still anchors on four buckets—informational, navigational, commercial investigation, transactional—but the SERP often blends them. The practical rule is simple: validate intent by reading today’s SERP, not a template. As a 2025 overview of SERP features explains, features like snippets, PAA boxes, reviews, and shopping modules are direct intent signals when you read them in context of the result types around them, not in isolation (see the feature taxonomy in Nightwatch’s 2025 overview of what SERP data reveals).

Mixed intent is normal. When should you ship one page versus multiple? Think of it this way:

- Combine when the SERP intermixes formats and a single, well-structured page can guide users from comparison to a gentle CTA without whiplash. Studies and practitioner guides note this pattern is common with tech SaaS and consumer electronics.

- Split when the SERP segregates formats—e.g., distinct “pricing” results versus “how-to” guides—or when the secondary intent is a different job entirely. Several operational guides recommend confirming the dominant intent via top-10 content type and angle before deciding to split or combine (see Surfer’s 2025 primer on search intent and page types).

2) Decode SERP signals (including AI Overviews)

SERP features are not just ornaments; they’re a blueprint for content type, format, and schema. Google’s 2025 guidance on AI features emphasizes unique, well-structured content, strong sourcing, and machine readability—clear sectioning, tables, definitions, and supported structured data improve parsing and the chance of citation in AI experiences (see Google’s note on succeeding in AI search).

Below is a practical mapping you can use during planning.

| SERP feature | Likely intent | Content format cues | Structured data to consider |

|---|---|---|---|

| Featured snippet, PAA | Informational | Clear definitions, step-by-step sections, concise Q&A blocks | Article, FAQPage, HowTo (where eligible and aligned to content) |

| Knowledge panel/entity | Navigational/brand | Canonical entity/about page, brand FAQs, trust signals | Organization, Website, sameAs links |

| Local pack/maps | Local transactional | Local landing pages, NAP accuracy, reviews, hours | LocalBusiness, Organization |

| Reviews/ratings | Commercial investigation | Pros/cons, UGC highlights, comparison sections | Product, Review, AggregateRating |

| Pricing/comparison modules, shopping | Commercial/transactional | Comparison tables, specs, availability | Product, Offer, Merchant listings fields |

| AI summaries/AI Mode elements | Multi-faceted info/commercial | Comprehensive resource with tables, definitions, citations | Article/Product + relevant, valid JSON-LD |

Two realities matter. First, AI summaries occupy a meaningful share of desktop queries in 2025 and often correlate with reduced clicks to traditional listings; the magnitude varies by cohort and study, but zero-click behavior rises when summaries appear. Second, local intent continues to surface local packs dominantly; AI elements may appear above on hybrids, but vertical-specific monitoring is essential.

3) Build the data pipeline (inputs you can trust)

Start with queries and enrich them before you ever write a line.

- Pull query data from Google Search Console and your preferred keyword tools. Add device and country where relevant. If you like SERP overlays in-browser for spot checks, you can configure them; if needed, here’s a neutral setup explainer for the SEOquake extension.

- Scrape or record SERP features and top-10 content types for each representative keyword. A lightweight crawler or manual sampling works; just log methods.

- Bring in GA4 engagement context by page type where available—engaged sessions, average engagement time, scroll depth, and key events are a solid baseline. This helps later when you assess intent fulfillment.

Use a compact schema to keep your rows auditable.

keyword,seed_intent,serp_features,top10_content_types,llm_intent,confidence,split_or_combine,page_type,recommended_schema,kpis

"best email tools","commercial","snippet|paa|reviews","listicles|comparisons|vendor pages","Commercial Investigation",0.82,"combine","comparison hub","Product|Review","engaged sessions, return rate, demo requests"

"mailchimp pricing","transactional","pricing|shopping","pricing pages|faq","Transactional",0.9,"split","pricing page","Product|Offer","click to pricing, conversion rate"

4) LLM-assisted clustering and labeling (with guardrails)

LLMs can cluster and label at scale, but you need thresholds and a human review loop. A common pattern is to group by semantic similarity and SERP overlap, label with dominant intent, then route low-confidence items to manual checks. In practice, teams set acceptance thresholds—e.g., SERP overlap ≥70%, similarity ≥0.75, and ≥60% dominant intent within a cluster—and send anything fuzzier to a human-in-the-loop pass. This mirrors 2025 playbooks for search teams operationalizing LLM outputs safely, emphasizing logs and re-evaluation against fresh SERP data every quarter.

Here’s a prompt pattern you can adapt.

Role: Senior SEO analyst.

Task: For each keyword, classify intent (Informational, Navigational, Commercial Investigation, Transactional). Infer the dominant intent from top-10 result types and SERP features. List 2 latent subqueries. Output JSON with fields: keyword, intent, rationale, latent_subqueries, confidence.

Constraints:

- If SERP signals conflict, default to the intent represented by the majority of top-10 results.

- Flag low confidence (<0.7) for human review.

- Never invent features or brands; base rationale on observed patterns.

Examples:

- "how to write a press release" → Informational; how-to guides dominate; latent: "press release template", "press release example".

- "[brand] login" → Navigational; brand domain + login pages dominate; latent: "password reset", "2FA".

Why the rigor? Because intention is contextual. Google’s 2025 AI search notes emphasize machine-readable structure and verifiable claims; your labeling needs to echo that precision at the query level so you build the right page types in the first place.

5) Worked mini-example: From cluster to brief

Disclosure: QuickCreator is our product.

Scenario: Your cluster “email marketing software” has 120 keywords. SERP checks show comparisons, reviews, and a few vendor pages in the top results, plus snippets and PAA. Your LLM labeling yields “Commercial Investigation” with an average confidence of 0.78; roughly 15% of the set leans transactional (pricing/discount) and gets flagged for splitting.

Action plan:

- Decision: Combine for the main comparison hub, split out a dedicated pricing page. The hub covers evaluation jobs; pricing handles decision-stage specifics.

- Page type: Comparison hub with tabled specs, pros/cons, and vendor-verified details. Include a short “Who this is for” per tool and gentle CTAs.

- Schema: Product and Review markup for the table entries where content is visible and compliant; Organization for the site entity.

- Outline: Definitions up top for “what to expect from email software,” a sortable comparison table, scenario-based picks, FAQs harvested from PAA, and transparent methodology.

Example output workflow: After clustering, generate a draft brief with an LLM. Many teams use a writing platform to centralize SERP-informed outlines and schema notes; for instance, teams can use QuickCreator to support SERP-inspired outlines and embed FAQs and tables, then export to their CMS. Keep it replicable: the same brief could be assembled in any editor if you preserve the data columns and the outline logic.

6) Structured data priorities that matter in 2025

Structured data won’t rank a page on its own, but it helps machines understand your content and can make you eligible for special features, including some AI experiences. Google’s 2025 updates also removed support for several legacy schema types; stick to what’s meaningful and supported, validate with the Rich Results Test, and mirror visible content. For commerce and review-heavy pages, ensure Product, Offer, and Review fields are accurate and public; for brand and navigation, keep Organization and Website clean and corroborated.

7) Measurement: Prove intent fulfillment

Measure whether the page solved the job, not just whether it ranked.

- GA4: Track engaged sessions, engagement time, scroll depth, and key events per intent cluster. Funnel Exploration helps you see drop-offs by content type.

- GSC: Monitor queries and pages by mapped intent; watch impressions, clicks, and position as you refine content. Tag your pages with cluster IDs in your data warehouse for easier joins.

- Satisfaction proxies: Engagement time, return rate to the SERP, and “last/longest click” style patterns are helpful heuristics when used carefully. Treat them as proxies for satisfaction to guide optimization, not as ranking mechanics.

What about AI summaries? As of late 2025, there isn’t a universally available GSC dimension that isolates AI Overview presence and clicks. Rely on manual tracking and pattern recognition; correlate performance shifts with observed AI elements on your priority queries.

8) QA and governance you can live with

Great teams don’t just ship; they supervise. Implement a light but firm governance layer:

- Sampling plan: Manually review 10–20% of low-confidence labels and a smaller slice of high-confidence ones to catch drift.

- Versioning: Store cluster versions with timestamps, thresholds used, and human edits. Re-run clustering quarterly or after major SERP shifts.

- SERP spot-check rubric: Codify how you read the top-10—content types, angles, and features—so reviewers assess consistently.

A final note: keep your outlines transparent. Unique, well-sourced content with clear structure consistently correlates with stronger visibility and engagement in both traditional and AI-enhanced search experiences.

9) Your reusable toolkit

Two assets you can copy into your workflow today.

- Brief generator seed prompt

Given: intent, SERP features, top-10 content types, and user jobs-to-be-done.

Produce: an outline with H2/H3s, a comparison table spec (columns + data), a 6–8 question FAQ aligned to PAA, and recommended JSON-LD types.

Rules: keep sections skimmable; include definitions up top; add a methodology section; note which claims require citations.

- KPI stack by stage

- Awareness (informational): impressions per cluster, engagement time, scroll depth.

- Consideration (commercial): return rate to the hub, internal path into comparisons, tool/calculator interactions.

- Decision (transactional): pricing clicks, demo requests/add-to-cart, conversion and assisted conversion share.

Want a hand pairing ideation with mapped intent? This explainer on AI-powered blog topic suggestions can help you move from topics to clusters without losing the user’s job-to-be-done.

10) Put it all together (and keep iterating)

You’ve got the pipeline: collect and enrich data, decode SERP signals, cluster and label with guardrails, decide when to split or combine, pick page type and schema, generate briefs, instrument measurement, and run your QA loop. Will every query behave neatly? Of course not—so let your thresholds route ambiguity to humans, and let your dashboards tell you when intent fulfillment is slipping. Ready to map a messy keyword universe into a clean plan you can defend?

If you want a central place to build SERP-informed outlines and ship consistently, you can use a platform like QuickCreator to support that workflow while keeping everything auditable and portable.