© Copyright 2023 Quick Creator - All Rights Reserved

Boost Conversion Rates with eCommerce A/B Testing & Site Optimization

Introduction

In today's fast-paced eCommerce industry, having a website with inefficient structure can be detrimental to any business. Many online businesses often face the challenge of low conversion rates due to poor site optimization. This is where A/B testing comes into play. By conducting A/B tests, businesses can compare two variations of their website and determine which one performs better in terms of engagement and conversions. However, not all eCommerce owners possess the necessary expertise or resources to carry out these tests effectively. Fortunately, there are now solutions available such as Quick Creator that provide easy-to-use tools for optimizing websites without requiring extensive technical knowledge.

As more consumers turn towards online shopping, it has become increasingly important for eCommerce sites to have an effective digital presence that offers a seamless browsing experience and encourages purchases. While many businesses may already offer quality products or services, they may still struggle with converting visitors into customers due to issues related to site design or performance.

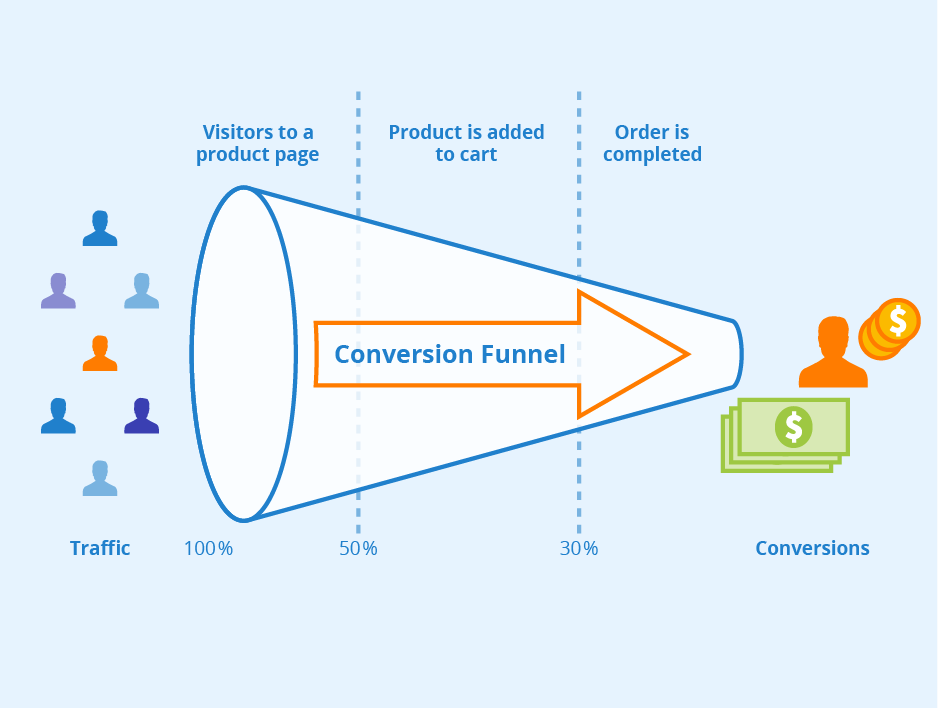

A common example is when potential customers abandon their shopping carts before completing a purchase due to difficulties navigating through the checkout process or finding relevant information about products on the site.

This is where A/B testing comes in handy - by comparing different versions of a web page against each other using real user data collected from visits (such as click-through rates), businesses can identify what changes need to be made in order increase conversions and improve overall customer satisfaction.

However, carrying out successful A/B tests requires technical skills beyond those possessed by many business owners who lack expertise in areas such as coding or analytics.

Thankfully there are now platforms like Quick Creator that make it easier for anyone regardless of technical know-how level create optimized websites through simple drag-and-drop interfaces while taking advantage of automatic testing features built-in tools keep them ahead of competition by delivering top-notch customer experiences at all times- even during peak periods when traffic volumes spike significantly!

What is A/B Testing?

A/B testing is a process of comparing two versions (A and B) of a webpage or an app to determine which one performs better in achieving the desired outcome. In eCommerce, A/B testing can be used to optimize conversion rates by testing different design elements such as headlines, images, colors, call-to-action buttons, and even page layout. By measuring user behavior through metrics like click-through-rates and bounce rates, businesses can gain insights into what works for their target audience.

Benefits of A/B Testing for eCommerce sites

One major benefit of A/B testing is that it enables online business owners to make data-driven decisions instead of relying on guesswork or assumptions. By experimenting with different versions of their website or app, they can identify what resonates with users and adjust accordingly. This not only improves conversion rates but also enhances overall user experience.

Another advantage is that A/B tests allow businesses to identify potential roadblocks in the customer journey that may be hindering conversions. For example, if visitors are abandoning their cart at checkout due to complicated forms or unclear payment options - these areas could be optimized through targeted A/B tests.

Additionally, eCommerce companies often have multiple product pages with varying levels of traffic; using A/B tests allows them to optimize each page individually based on its unique audience behaviors rather than making blanket changes across all pages.

Examples of testable elements for A/B testing

There are numerous design elements that can undergo an A/B test depending upon your business goals:

Headlines: Test variations in wording length or tone.

Images: Test different visuals size/color/positioning.

Call-to-actions (CTA): Experiment with CTA text/button color/location.

Forms: Simplify form fields/reduce required information/change label positioning.

Page Layouts: Try out various layouts/positions/designs for content blocks/menus/pop-ups etc.

Setting Up A/B Tests

A/B testing is an essential technique to optimize your eCommerce website and increase conversion rates. In this section, we'll discuss how to set up A/B tests for your online store.

Choosing Testable Elements

The first step in setting up A/B tests is choosing the elements that you want to test on your website. These elements can be anything from the color of the "Add to Cart" button to the placement of product images. When selecting testable elements, it's important to focus on those that have a significant impact on user behavior or sales conversions.

To identify which elements are worth testing, start by analyzing data from Google Analytics or other analytics tools. Look for areas where users are dropping off or abandoning their carts and consider whether changes in specific design elements could improve these metrics.

Selecting a Testing Tool

Once you've identified what you want to test, you need a tool that can help you perform A/B tests effectively. There are numerous A/B testing tools available online, both free and paid options.

When selecting a testing tool, look for one with features like easy integration with your eCommerce platform; ability to run multiple tests at once; support for different types of experiments (such as split URL or multivariate); detailed reporting capabilities; and flexible targeting options based on user demographics such as location or device type.

It's also crucial that any chosen tool has reliable customer service so that if issues arise during setup or implementation, they can provide timely assistance.

Determining Sample Size

The final step in setting up an A/B test is determining sample size - how many visitors should participate in each variation? The larger sample size means more accurate results but requires longer time frame while small samples may not reflect actual performance accurately.

To determine sample size correctly, use statistical methods like significance level threshold analysis which will calculate minimum number required participants per group needed before knowing difference between control group (original version) vs experimental group (variant).

Interpreting A/B Test Results

After running an A/B test, it's important to know how to interpret the results in order to make data-driven decisions for site optimization. One key factor is statistical significance; this refers to the level of confidence that the difference between two variations is not due to chance. Typically, a p-value of less than 0.05 (or 5%) is considered statistically significant.

For example, let's say we ran an A/B test comparing two different call-to-action buttons on our website - one green and one blue. In variation A (green button), 100 out of 1,000 visitors made a purchase while in variation B (blue button), 120 out of 1,000 visitors made a purchase. The p-value calculated from this experiment was .03 which means there’s only a three percent probability that these results happened by chance.

In this case, we can conclude that the blue button performed better at driving purchases than the green button with statistical significance. Thus indicating that implementing the blue version may lead to an increase in conversions or sales.

However it's also important note potential errors when interpreting results such as false positive and negative rates and sample size bias.

A false positive occurs when you declare something as “significant” but it actually isn’t - meaning your conclusion was based on random chance rather than meaningful differences between versions.A false negative occurs if you conclude there’s no difference between versions when there really is – meaning you’ll miss opportunities for improvement.

Let’s take another example: Suppose we are testing two home page designs: Variation A has product images above headlines while Variation B does not have any product images at all.The A/B test showed us a conversion rate of $7$% for Variation A compared to $6$% for Variation B.The p-value turned up as $.08$, which indicates insignificant result.

But after checking further ,we discovered some users were having trouble loading Variations pages because of slower internet connection which resulted in an unequal sample size between variations and skewed test results.So ,we can say that there would be a potential false negative rate.

In order to avoid such errors, it's essential to have a large enough sample size for the A/B test. When the sample size is too small, there's a higher risk of sampling bias or random variation affecting the results. Additionally, it’s important to ensure that both groups are representative of your target audience.

Overall, interpreting A/B test results requires careful consideration of statistical significance and potential errors in order to make informed decisions for site optimization. By testing different variations on specific elements of your website you can identify what works best with your customers and drive more conversions as well as revenue growth.

Using A/B Test Insights to Optimize Your Site

A/B testing is an excellent way to get data on how changes to your website affect user behavior. But it's not enough just to run A/B tests and look at the results; you need to use those insights to make data-driven decisions about optimizing your eCommerce site's structure. Here are some steps you can take:

Analyze Your Data

The first step in using A/B test insights for optimization is analyzing your data. You should be looking at conversion rates, click-through rates, bounce rates, and other metrics that indicate how users are interacting with your site.

For example, let's say you ran an A/B test where one group of users saw a pop-up asking them to sign up for your email list while another group didn't see the pop-up. If the conversion rate was higher for the group that saw the pop-up, it might be worth considering adding this feature permanently.

Identify High-Impact Changes

Once you have analyzed your data from multiple A/B tests, start identifying high-impact changes that could improve your site's performance. These might be small tweaks or major redesigns depending on what works best for your audience.

One successful example of a high-impact change comes from Basecamp who tested their pricing page by removing unnecessary distractions such as social proof elements and focusing solely on product features and benefits. As a result of this change, they increased their free trial sign-ups by 102%!

Prioritize Your Optimization Efforts

After identifying high-impact changes in step two above prioritize which ones will give you more value based on ease of implementation coupled with impact generated if implemented correctly.

If we continue our Basecamp example from above prioritizing efforts would mean focusing resources towards improving pricing features rather than experimenting with copywriting or marketing messages since tweaking pricing has a higher potential impact than copywriting experiments alone.

Implement Changes Based On Insights

Finally implement these high-value optimizations leveraging designs informed by insights and data gathered from A/B testing. Consider running additional experiments after implementing changes to confirm that these optimizations are effective.

Conclusion

In conclusion, A/B testing is a crucial tool for eCommerce sites to boost conversion rates and optimize website structure. By testing different variations of design elements, content, and calls-to-action, businesses can identify what works best for their audience and make data-driven decisions to improve the user experience. It’s important for online business owners and marketers to start testing as soon as possible in order to stay competitive in today's digital landscape. Additionally, tools like Quick Creator can help streamline the optimization process by providing insights into website structure that may be hindering conversions. Overall, A/B testing should be an ongoing process that is integrated into any site optimization strategy for maximum success.